Feature importance measures the contribution of each variable within a machine learning model, often based on criteria like Gini impurity or information gain. Permutation importance evaluates feature relevance by randomly shuffling feature values and observing the impact on model performance, offering a model-agnostic and more reliable interpretation. Understanding the distinction between these methods enhances the ability to interpret model behavior and improve predictive accuracy.

Table of Comparison

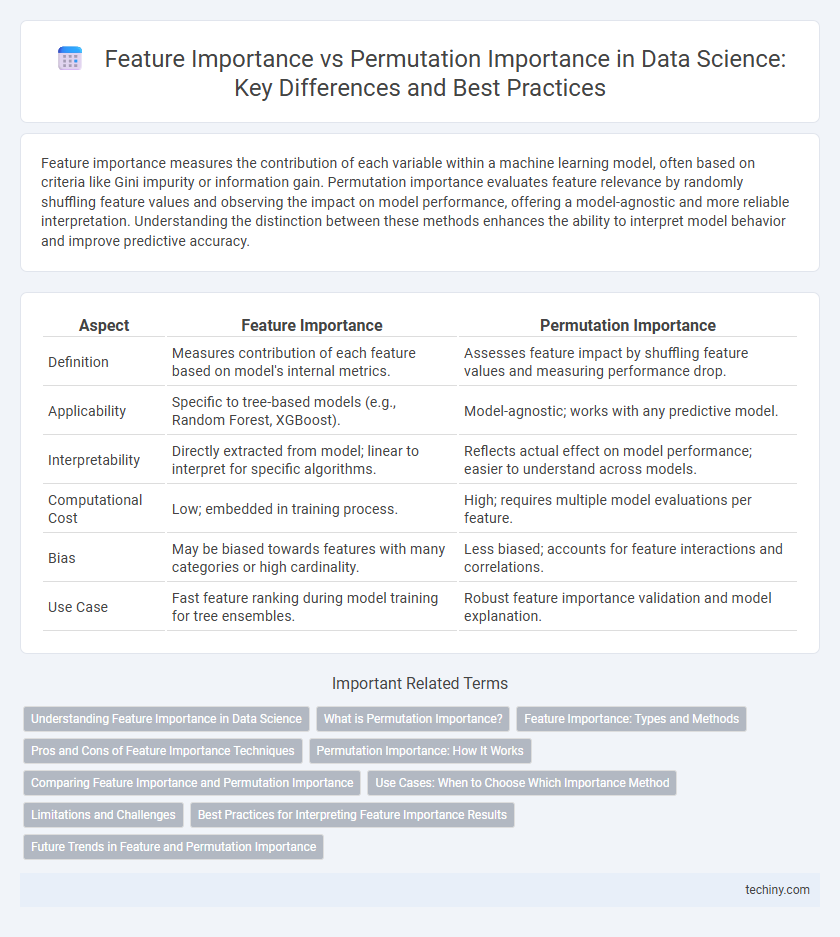

| Aspect | Feature Importance | Permutation Importance |

|---|---|---|

| Definition | Measures contribution of each feature based on model's internal metrics. | Assesses feature impact by shuffling feature values and measuring performance drop. |

| Applicability | Specific to tree-based models (e.g., Random Forest, XGBoost). | Model-agnostic; works with any predictive model. |

| Interpretability | Directly extracted from model; linear to interpret for specific algorithms. | Reflects actual effect on model performance; easier to understand across models. |

| Computational Cost | Low; embedded in training process. | High; requires multiple model evaluations per feature. |

| Bias | May be biased towards features with many categories or high cardinality. | Less biased; accounts for feature interactions and correlations. |

| Use Case | Fast feature ranking during model training for tree ensembles. | Robust feature importance validation and model explanation. |

Understanding Feature Importance in Data Science

Feature importance in data science quantifies the contribution of each input variable to model predictions, essential for interpretability and feature selection. Permutation importance measures feature significance by randomly shuffling individual feature values and observing the impact on model performance, offering a model-agnostic and unbiased evaluation method. Understanding these techniques helps data scientists identify key predictors, improve model accuracy, and enhance transparency in machine learning workflows.

What is Permutation Importance?

Permutation importance measures the significance of a feature by randomly shuffling its values and observing the impact on a model's performance metric, such as accuracy or RMSE. This technique provides a model-agnostic approach to quantify how much each feature contributes to predictive accuracy by breaking the relationship between the feature and the target variable. Unlike traditional feature importance derived from model-specific weights, permutation importance captures feature relevance by directly evaluating changes in model output after perturbation.

Feature Importance: Types and Methods

Feature importance quantifies the contribution of each feature to a predictive model's performance, with common types including Gini importance, Mean Decrease in Impurity (MDI), and Mean Decrease in Accuracy (MDA). Model-specific methods like tree-based algorithms rely on impurity reduction metrics, while model-agnostic approaches such as SHAP values and permutation importance evaluate feature effects by measuring changes in model output or error. Understanding these diverse methods enables accurate interpretation and selection of influential features for enhanced model explainability and robustness.

Pros and Cons of Feature Importance Techniques

Feature importance techniques such as mean decrease impurity (MDI) provide quick, model-specific insights but can be biased towards features with more categories or higher cardinality in tree-based models. Permutation importance, by measuring the increase in model error after shuffling feature values, offers a model-agnostic and more reliable estimate, although it is computationally intensive and can be unstable with correlated features. Both methods require careful interpretation since high importance does not necessarily imply causality, and feature dependencies may skew importance scores.

Permutation Importance: How It Works

Permutation importance measures feature significance by randomly shuffling a single feature's values and observing the impact on model performance, typically using metrics like accuracy or mean squared error. This approach quantifies the decrease in model effectiveness, indicating the feature's contribution while preserving the original data distribution. Unlike traditional feature importance derived from model coefficients, permutation importance offers a model-agnostic evaluation by directly assessing how disrupting each feature affects predictive accuracy.

Comparing Feature Importance and Permutation Importance

Feature importance measures the contribution of each feature based on the internal model parameters, often derived from tree-based algorithms like Random Forests or Gradient Boosting Machines. Permutation importance evaluates feature relevance by randomly shuffling feature values and assessing the increase in model prediction error, providing model-agnostic interpretability. Comparing both methods reveals that permutation importance offers a more reliable assessment in the presence of correlated features and non-linear relationships, while traditional feature importance is faster to compute but can be biased towards features with more splits or higher cardinality.

Use Cases: When to Choose Which Importance Method

Feature importance is ideal for models like random forests and gradient boosting, where internal metrics provide direct insights into variable contributions. Permutation importance excels in model-agnostic scenarios, assessing the impact of each feature by measuring performance degradation after shuffling feature values, making it suitable for complex or black-box models. Choosing between these methods depends on the model interpretability requirements and the specific task, such as using feature importance for initial variable selection and permutation importance for validating feature effects across diverse models.

Limitations and Challenges

Feature importance methods often suffer from bias toward variables with more levels or higher cardinality, leading to misleading interpretations in data science models. Permutation importance, while model-agnostic, can produce unstable results when features are strongly correlated, affecting the reliability of the importance scores. Both approaches face challenges in high-dimensional datasets where noise and multicollinearity obscure true feature contributions.

Best Practices for Interpreting Feature Importance Results

Feature importance measures the contribution of each variable to a predictive model, but permutation importance offers a more reliable assessment by evaluating changes in model performance after feature values are randomly shuffled. Best practices for interpreting these results include validating findings across multiple models, using permutation importance to detect correlated features' true relevance, and considering domain knowledge to avoid misleading conclusions. Properly combining these methods enhances model interpretability and supports robust, data-driven decision-making.

Future Trends in Feature and Permutation Importance

Future trends in feature importance emphasize integrating advanced interpretability techniques with deep learning models to enhance transparency and actionable insights. Permutation importance methods are evolving to address model-agnostic challenges, offering robust assessments in high-dimensional datasets and complex feature interactions. Emerging approaches leverage hybrid frameworks combining model-specific and permutation-based metrics to improve reliability and computational efficiency in real-world data science applications.

feature importance vs permutation importance Infographic

techiny.com

techiny.com