Min-Max Scaling transforms data to fit within a specific range, usually 0 to 1, preserving the original distribution shape but sensitive to outliers. Z-score Scaling standardizes data by centering it around the mean with a standard deviation of one, mitigating the impact of outliers and making it useful for algorithms assuming normally distributed data. Choosing between Min-Max and Z-score scaling depends on the dataset's characteristics and the requirements of the machine learning model.

Table of Comparison

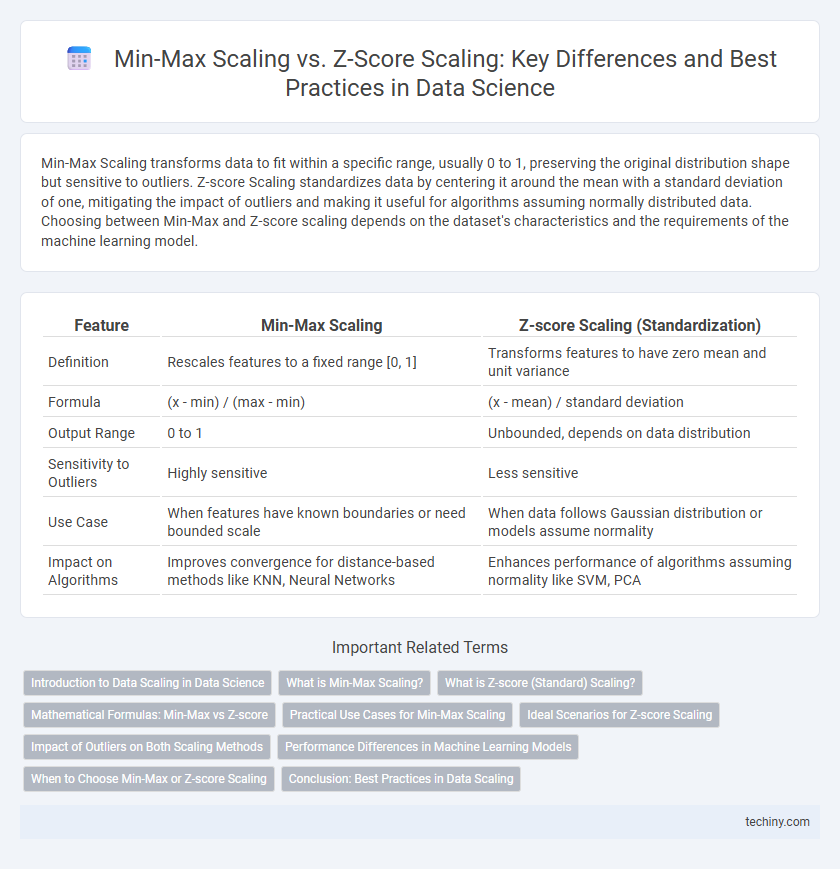

| Feature | Min-Max Scaling | Z-score Scaling (Standardization) |

|---|---|---|

| Definition | Rescales features to a fixed range [0, 1] | Transforms features to have zero mean and unit variance |

| Formula | (x - min) / (max - min) | (x - mean) / standard deviation |

| Output Range | 0 to 1 | Unbounded, depends on data distribution |

| Sensitivity to Outliers | Highly sensitive | Less sensitive |

| Use Case | When features have known boundaries or need bounded scale | When data follows Gaussian distribution or models assume normality |

| Impact on Algorithms | Improves convergence for distance-based methods like KNN, Neural Networks | Enhances performance of algorithms assuming normality like SVM, PCA |

Introduction to Data Scaling in Data Science

Data scaling techniques like Min-Max Scaling and Z-score Scaling normalize feature values to improve the performance of machine learning algorithms. Min-Max Scaling rescales data to a fixed range, typically [0, 1], preserving the relative distribution but is sensitive to outliers. Z-score Scaling standardizes data by centering around the mean with a unit standard deviation, which is robust to outliers and useful for algorithms assuming data is normally distributed.

What is Min-Max Scaling?

Min-Max Scaling is a normalization technique that transforms features by scaling the data to a fixed range, typically 0 to 1. This method subtracts the minimum value of the feature and then divides by the range, which is the difference between the maximum and minimum values. Min-Max Scaling is sensitive to outliers because it uses the extreme values of the data set to perform the transformation.

What is Z-score (Standard) Scaling?

Z-score scaling, also known as standard scaling, transforms data by subtracting the mean and dividing by the standard deviation, resulting in a distribution with a mean of zero and a standard deviation of one. This technique is essential in data science for normalizing features with different units or scales, enabling algorithms like PCA and SVM to perform more effectively. Z-score scaling is particularly useful when the dataset follows a Gaussian distribution or when outlier robustness is important.

Mathematical Formulas: Min-Max vs Z-score

Min-Max Scaling transforms data using the formula \( X' = \frac{X - X_{\min}}{X_{\max} - X_{\min}} \), rescaling values to a [0,1] range. Z-score Scaling standardizes data with the formula \( Z = \frac{X - \mu}{\sigma} \), converting values to represent the number of standard deviations from the mean. Understanding these mathematical formulas is essential for selecting the appropriate normalization technique in data preprocessing pipelines.

Practical Use Cases for Min-Max Scaling

Min-Max Scaling is ideal for data requiring a bounded range, especially in algorithms like neural networks and k-nearest neighbors that assume data within a fixed interval, typically [0,1]. It preserves the relationships of the original data distribution, making it suitable for image processing and financial data normalization where relative differences matter. This method enhances convergence speed and performance in gradient-based models by preventing features with larger magnitudes from dominating the learning process.

Ideal Scenarios for Z-score Scaling

Z-score scaling is ideal when the data contains outliers or follows a Gaussian distribution, as it standardizes features by centering them around the mean and scaling based on the standard deviation. This technique preserves the effect of outliers by assigning them appropriate negative or positive z-scores, preventing distortion in the data's variance. Models sensitive to feature scaling, such as Support Vector Machines and Principal Component Analysis, benefit from z-score normalization for improved performance and convergence.

Impact of Outliers on Both Scaling Methods

Min-Max scaling compresses all data points within a fixed range, typically 0 to 1, causing extreme outliers to skew the distribution and reduce the effectiveness of scaling for most data points. Z-score scaling standardizes data based on mean and standard deviation, making it more robust to outliers by centering data around the mean and expressing values in terms of standard deviations. Outliers significantly influence Min-Max scaling boundaries, whereas Z-score scaling mitigates outlier impact by adjusting data relative to overall variance.

Performance Differences in Machine Learning Models

Min-Max Scaling and Z-score Scaling impact machine learning model performance differently based on data distribution and algorithm sensitivity. Min-Max Scaling normalizes features to a specific range, enhancing convergence speed for gradient-based models like neural networks but may be sensitive to outliers. Z-score Scaling standardizes features by mean and standard deviation, providing robustness to outliers and improving performance in algorithms assuming Gaussian distribution, such as SVM and logistic regression.

When to Choose Min-Max or Z-score Scaling

Min-Max scaling is ideal for algorithms sensitive to feature magnitude and when data distribution is not Gaussian, as it scales features to a fixed range [0,1], preserving relative distances. Z-score scaling, or standardization, is preferable for data with outliers or approximately normal distribution, centering features by mean and scaling by standard deviation to achieve zero mean and unit variance. Choosing between Min-Max and Z-score scaling depends on the algorithm requirements, data distribution characteristics, and robustness to outliers in the dataset.

Conclusion: Best Practices in Data Scaling

Min-max scaling preserves the original data distribution by rescaling features to a fixed range, making it ideal for algorithms sensitive to magnitude such as neural networks. Z-score scaling standardizes features by centering data around zero with unit variance, improving performance in models assuming normality like logistic regression or SVM. Choosing the appropriate scaling method depends on the model's assumptions and the feature distribution, with robust preprocessing enhancing overall predictive accuracy and model convergence.

Min-Max Scaling vs Z-score Scaling Infographic

techiny.com

techiny.com