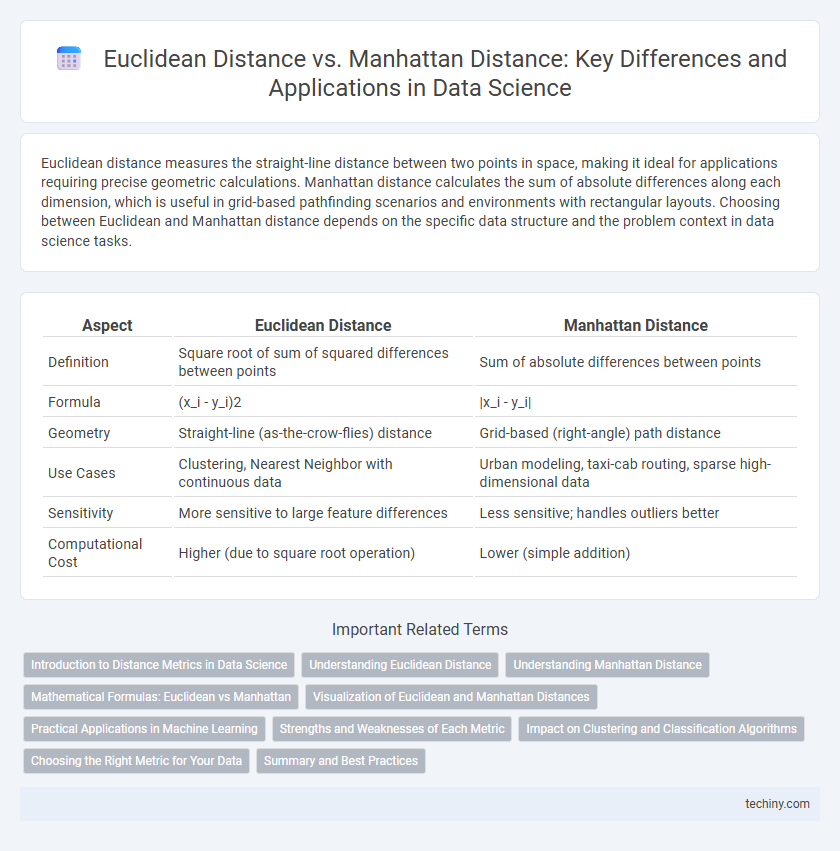

Euclidean distance measures the straight-line distance between two points in space, making it ideal for applications requiring precise geometric calculations. Manhattan distance calculates the sum of absolute differences along each dimension, which is useful in grid-based pathfinding scenarios and environments with rectangular layouts. Choosing between Euclidean and Manhattan distance depends on the specific data structure and the problem context in data science tasks.

Table of Comparison

| Aspect | Euclidean Distance | Manhattan Distance |

|---|---|---|

| Definition | Square root of sum of squared differences between points | Sum of absolute differences between points |

| Formula | (x_i - y_i)2 | |x_i - y_i| |

| Geometry | Straight-line (as-the-crow-flies) distance | Grid-based (right-angle) path distance |

| Use Cases | Clustering, Nearest Neighbor with continuous data | Urban modeling, taxi-cab routing, sparse high-dimensional data |

| Sensitivity | More sensitive to large feature differences | Less sensitive; handles outliers better |

| Computational Cost | Higher (due to square root operation) | Lower (simple addition) |

Introduction to Distance Metrics in Data Science

Euclidean distance measures the straight-line distance between two points in a multidimensional space, making it ideal for data with continuous features and geometric interpretations. Manhattan distance, also known as L1 distance, calculates the sum of absolute differences across dimensions, often used in grid-based and sparse data scenarios for its robustness to outliers. Both metrics play a critical role in clustering, nearest neighbor algorithms, and similarity analysis within data science, influencing model accuracy and computational efficiency.

Understanding Euclidean Distance

Euclidean Distance measures the straight-line distance between two points in a multidimensional space, calculated using the square root of the sum of squared differences across each dimension. This metric is widely used in clustering algorithms like K-Means and in nearest neighbor searches due to its intuitive geometric interpretation. Its sensitivity to large differences in any dimension makes it ideal for applications requiring precise measurement of magnitude differences in continuous data.

Understanding Manhattan Distance

Manhattan Distance, also known as L1 norm or taxicab distance, measures the distance between two points by summing the absolute differences of their coordinates, reflecting movement along grid-like paths. It is especially useful in high-dimensional spaces and urban grid scenarios where only orthogonal movements are possible, contrasting with Euclidean Distance's straight-line approach. This distance metric is less sensitive to outliers and better suited for discrete or sparse data in clustering and classification tasks.

Mathematical Formulas: Euclidean vs Manhattan

Euclidean distance is calculated using the formula (xi - yi)2, measuring the straight-line distance between two points in Euclidean space. Manhattan distance, defined as |xi - yi|, sums the absolute differences of their coordinates, reflecting grid-based movement. Both metrics are essential in clustering algorithms and nearest neighbor searches, with Euclidean distance emphasizing geometric proximity and Manhattan distance accounting for path-restricted navigation.

Visualization of Euclidean and Manhattan Distances

Visualizing Euclidean distance involves representing the shortest straight-line distance between points in a multidimensional space, often depicted as the hypotenuse of a right triangle in 2D or 3D plots. Manhattan distance visualization highlights the sum of absolute differences along each dimension, resembling a grid-based path similar to navigating city blocks. Plotting both distances on the same coordinate plane reveals Euclidean distance as a diagonal line and Manhattan distance as a stepwise path composed of horizontal and vertical segments, emphasizing their geometric and practical differences in data science applications.

Practical Applications in Machine Learning

Euclidean distance is widely applied in clustering algorithms such as K-means and in nearest neighbor searches where geometric similarity in continuous feature spaces is critical. Manhattan distance excels in high-dimensional feature spaces and is preferred in grid-like pathfinding problems and certain sparse data scenarios, like text mining and recommendation systems. Choosing between Euclidean and Manhattan distances significantly impacts model accuracy depending on data distribution and the nature of feature interactions.

Strengths and Weaknesses of Each Metric

Euclidean distance excels in measuring the straight-line distance between points, making it ideal for continuous data and scenarios where the shortest path matters, but it is sensitive to scale and outliers. Manhattan distance calculates distance based on grid-like paths, providing robustness to outliers and suitability for high-dimensional or discrete data, yet it may overestimate actual proximity when diagonal movement is relevant. Choosing between Euclidean and Manhattan distance depends on the data structure and the specific application, such as clustering or nearest neighbor algorithms.

Impact on Clustering and Classification Algorithms

Euclidean distance measures the straight-line distance between points, emphasizing geometric proximity, which benefits clustering algorithms like K-means by forming compact, spherical clusters. Manhattan distance calculates the sum of absolute differences along each dimension, making it more robust to outliers and better suited for high-dimensional data, often improving performance in classification tasks such as k-nearest neighbors (k-NN). The choice between these metrics directly impacts algorithm accuracy, cluster shape, and sensitivity to data scale, influencing model interpretability and effectiveness in real-world applications.

Choosing the Right Metric for Your Data

Euclidean distance measures the straight-line distance between points, making it ideal for continuous, geometric data where spatial relationships matter. Manhattan distance calculates the sum of absolute differences along each dimension, better suited for grid-like, high-dimensional, or sparse data where movement is restricted to orthogonal directions. Choosing the right metric depends on the data structure and problem context, as Euclidean distance emphasizes magnitude while Manhattan distance captures path-based proximity.

Summary and Best Practices

Euclidean distance calculates the straight-line distance between two points in a multi-dimensional space, making it ideal for continuous variables and applications requiring geometric proximity. Manhattan distance sums the absolute differences across dimensions, offering robustness in high-dimensional spaces and scenarios with grid-like path constraints. Best practices recommend using Euclidean distance for clustering and nearest neighbor algorithms with evenly scaled data, while Manhattan distance suits discrete or high-variance features and when interpretability in grid-based movements is essential.

Euclidean Distance vs Manhattan Distance Infographic

techiny.com

techiny.com