Mean imputation replaces missing data with the average value, offering a simple and quick solution but often underestimating variability and biasing results. Multiple imputation, however, generates several plausible values for each missing data point, capturing uncertainty and producing more accurate statistical estimates. Choosing between them depends on the dataset's complexity and the importance of preserving data variability in the analysis.

Table of Comparison

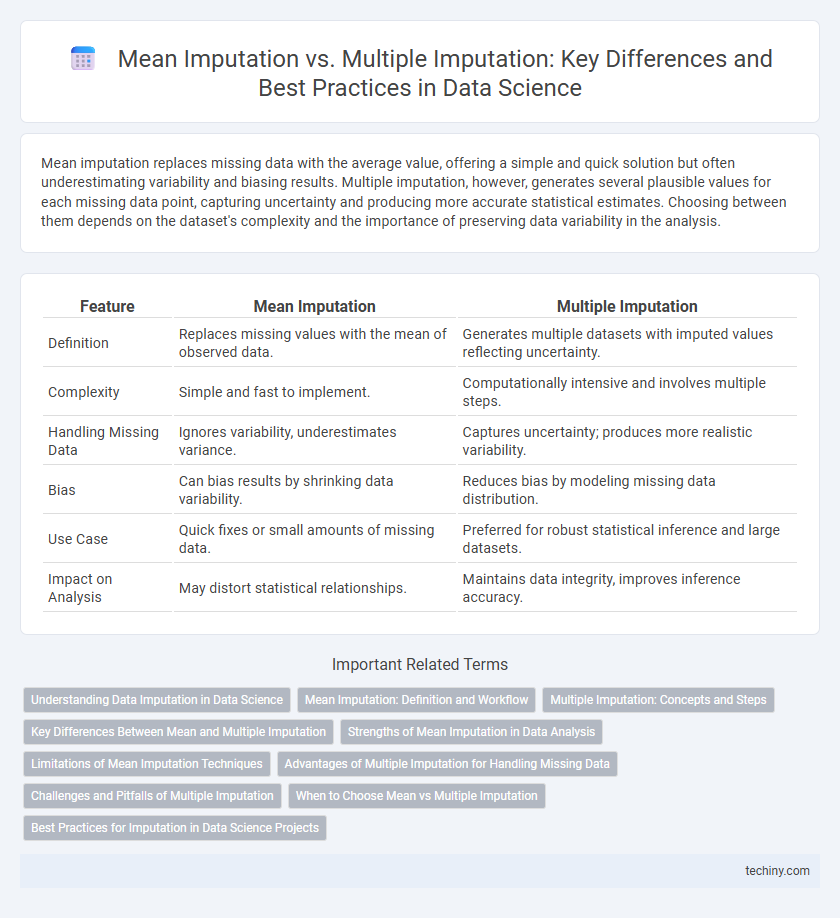

| Feature | Mean Imputation | Multiple Imputation |

|---|---|---|

| Definition | Replaces missing values with the mean of observed data. | Generates multiple datasets with imputed values reflecting uncertainty. |

| Complexity | Simple and fast to implement. | Computationally intensive and involves multiple steps. |

| Handling Missing Data | Ignores variability, underestimates variance. | Captures uncertainty; produces more realistic variability. |

| Bias | Can bias results by shrinking data variability. | Reduces bias by modeling missing data distribution. |

| Use Case | Quick fixes or small amounts of missing data. | Preferred for robust statistical inference and large datasets. |

| Impact on Analysis | May distort statistical relationships. | Maintains data integrity, improves inference accuracy. |

Understanding Data Imputation in Data Science

Mean imputation replaces missing data with the average value of a variable, offering simplicity but often underestimating variability and biasing statistical analyses. Multiple imputation generates several plausible values for missing data based on predictive models, preserving natural variance and improving inference accuracy. Understanding these imputation techniques is crucial for handling incomplete datasets and ensuring robust, reliable outcomes in data science projects.

Mean Imputation: Definition and Workflow

Mean imputation is a statistical technique used to handle missing data by replacing missing values with the mean of observed values for a specific variable, preserving dataset size and simplifying analysis. The workflow involves identifying missing data points, calculating the mean from available observations, and substituting these missing values with the calculated mean, which can lead to biased estimates and reduced variability. While mean imputation is computationally efficient, it often fails to account for the uncertainty of missing data, unlike multiple imputation techniques.

Multiple Imputation: Concepts and Steps

Multiple Imputation involves creating several complete datasets by replacing missing values with multiple sets of plausible estimates based on statistical models. This method improves the accuracy and validity of data analysis by accounting for the uncertainty inherent in missing data. Key steps include imputing missing values multiple times, analyzing each complete dataset separately, and then pooling the results to obtain final estimates.

Key Differences Between Mean and Multiple Imputation

Mean imputation replaces missing values with the average of observed data, leading to biased estimates and reduced variance, whereas multiple imputation generates several plausible values for each missing data point, preserving data variability and uncertainty. Multiple imputation applies statistical models to create multiple complete datasets, improving accuracy in parameter estimation and inference compared to the simplistic single-value approach of mean imputation. The key differences lie in the treatment of data uncertainty and the preservation of relationships among variables, where multiple imputation provides more robust and unbiased results for downstream analyses.

Strengths of Mean Imputation in Data Analysis

Mean imputation offers simplicity and speed, making it an efficient method for handling missing data in large datasets where computational resources or time are limited. It preserves the overall mean of a variable, reducing bias introduced by missing values while maintaining the variance structure in some cases. This technique is particularly effective when the proportion of missing data is low and the dataset exhibits minimal variability in the variable of interest.

Limitations of Mean Imputation Techniques

Mean imputation often leads to biased parameter estimates by reducing the variability of data and ignoring uncertainty about missing values, which can distort statistical analyses and predictive models. This technique assumes data are missing completely at random (MCAR), an assumption rarely met in real-world datasets, resulting in inaccurate or misleading conclusions. Furthermore, mean imputation can underestimate standard errors and weaken relationships between variables, compromising the robustness of data science insights.

Advantages of Multiple Imputation for Handling Missing Data

Multiple imputation improves data analysis accuracy by generating several plausible values for missing data, capturing the uncertainty inherent in incomplete datasets. It reduces bias and increases statistical power compared to mean imputation, which oversimplifies missing values by using a single mean estimate. This method provides more robust parameter estimates and valid standard errors, enhancing the reliability of inferential statistics in data science projects.

Challenges and Pitfalls of Multiple Imputation

Multiple imputation addresses missing data by creating several plausible datasets, but it presents challenges such as increased computational complexity and the need for appropriate model specification to avoid biased estimates. Incorrect assumptions about the missing data mechanism and improper pooling of results can lead to misleading inferences. Ensuring convergence and managing the variability between imputations are critical pitfalls that require careful attention during implementation.

When to Choose Mean vs Multiple Imputation

Mean imputation is suitable when dealing with small amounts of missing data in datasets with low complexity and when preserving the original dataset size is critical. Multiple imputation is more appropriate for datasets with substantial missing values and complex data structures, as it accounts for uncertainty and variability by generating several plausible estimates. Choose multiple imputation when accurate statistical inference and robust analysis are priorities, especially in predictive modeling and inferential statistics.

Best Practices for Imputation in Data Science Projects

Mean imputation offers simplicity by filling missing values with the dataset's average, but it can underestimate variability and bias results. Multiple imputation generates several plausible datasets by modeling the missing data distribution, enhancing accuracy and reflecting uncertainty. Best practices recommend using multiple imputation for robust analysis, especially when missingness is not random, while mean imputation may suit preliminary or less complex analyses.

Mean Imputation vs Multiple Imputation Infographic

techiny.com

techiny.com