Upsampling increases the number of instances in the minority class to balance imbalanced datasets, often using techniques like SMOTE to create synthetic samples. Downsampling reduces the number of instances in the majority class, which can lead to loss of valuable information but simplifies the dataset. Choosing between upsampling and downsampling depends on the dataset size, model performance, and the risk of overfitting or underfitting.

Table of Comparison

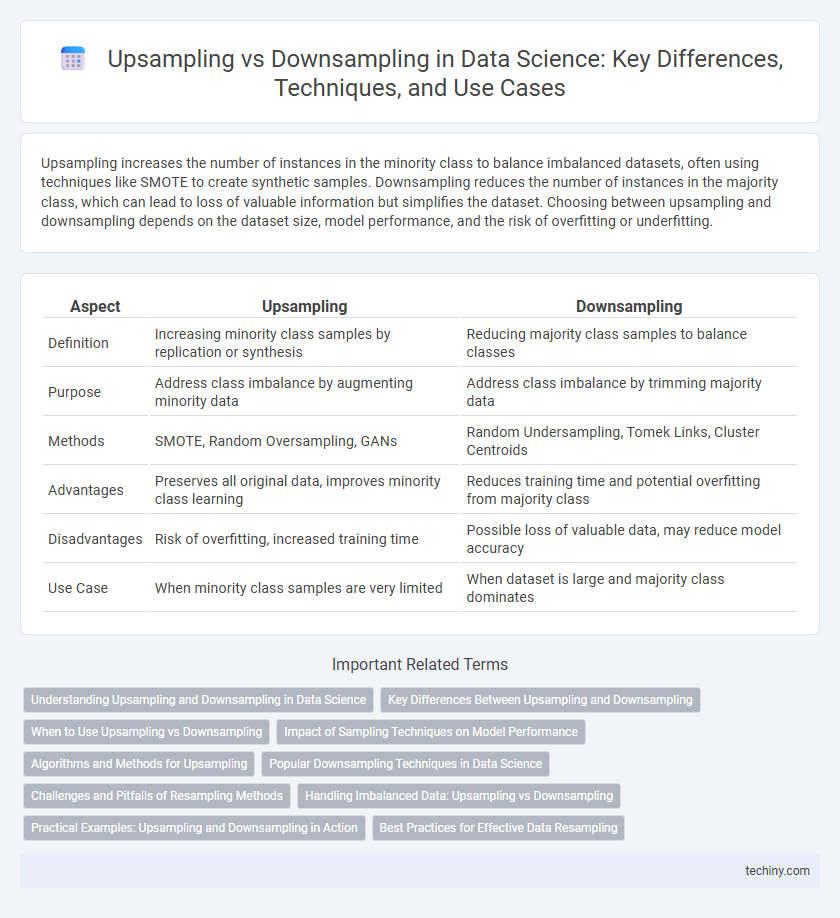

| Aspect | Upsampling | Downsampling |

|---|---|---|

| Definition | Increasing minority class samples by replication or synthesis | Reducing majority class samples to balance classes |

| Purpose | Address class imbalance by augmenting minority data | Address class imbalance by trimming majority data |

| Methods | SMOTE, Random Oversampling, GANs | Random Undersampling, Tomek Links, Cluster Centroids |

| Advantages | Preserves all original data, improves minority class learning | Reduces training time and potential overfitting from majority class |

| Disadvantages | Risk of overfitting, increased training time | Possible loss of valuable data, may reduce model accuracy |

| Use Case | When minority class samples are very limited | When dataset is large and majority class dominates |

Understanding Upsampling and Downsampling in Data Science

Upsampling increases the number of samples in a dataset by replicating or generating synthetic data points, enhancing model training on imbalanced classes. Downsampling reduces the dataset size by randomly removing samples from the majority class to balance class distribution, which helps prevent model bias toward the majority. Both techniques are crucial in data preprocessing for improving the performance and accuracy of machine learning algorithms on skewed datasets.

Key Differences Between Upsampling and Downsampling

Upsampling increases the size of a dataset by replicating or generating new data points to balance class distribution, primarily used to address class imbalance in classification tasks. Downsampling reduces the dataset size by randomly removing samples from the majority class, helping to prevent model bias towards dominant classes but risking loss of valuable information. Both techniques impact model training efficiency and accuracy, with upsampling potentially causing overfitting and downsampling possibly discarding important data patterns.

When to Use Upsampling vs Downsampling

Upsampling is best utilized in data science when dealing with imbalanced datasets where the minority class requires augmentation to improve model performance without losing information. Downsampling is preferred when the majority class is overwhelming and computational efficiency is essential, especially in large datasets, as it reduces sample size while maintaining class balance. Choosing between upsampling and downsampling depends on factors like dataset size, model complexity, and the importance of preserving minority class information.

Impact of Sampling Techniques on Model Performance

Upsampling increases the minority class size by replicating or synthesizing samples, improving model sensitivity but potentially causing overfitting. Downsampling reduces the majority class to balance the dataset, which can enhance training speed and reduce bias but may discard valuable information. The choice between upsampling and downsampling significantly impacts model accuracy, precision, recall, and overall generalization in imbalanced data scenarios.

Algorithms and Methods for Upsampling

Upsampling techniques in data science involve increasing the number of samples in a minority class to balance imbalanced datasets, crucial for improving model performance in classification tasks. Popular algorithms for upsampling include Synthetic Minority Over-sampling Technique (SMOTE), which generates synthetic samples based on feature space similarities, and Adaptive Synthetic Sampling (ADASYN), which focuses on generating samples in regions where data is sparse. These methods enhance algorithm generalization by providing a more representative training set, reducing bias toward majority classes in machine learning models.

Popular Downsampling Techniques in Data Science

Popular downsampling techniques in data science include random downsampling, which involves randomly selecting a subset of the majority class to balance the dataset, and stratified downsampling, which maintains the original class distribution proportions during the reduction process. Cluster-based downsampling groups similar instances using clustering algorithms like K-means to retain representative samples from each cluster, preserving important data characteristics. These methods help mitigate class imbalance issues, improving model performance while reducing computational cost.

Challenges and Pitfalls of Resampling Methods

Resampling methods like upsampling and downsampling are crucial in handling imbalanced datasets but often introduce challenges such as overfitting in upsampling due to repeated data points and potential loss of important information in downsampling by discarding samples. These techniques can also affect the generalization ability of machine learning models, leading to biased performance metrics. Careful validation and the use of hybrid or ensemble resampling strategies are essential to mitigate pitfalls like increased variance and reduced model robustness.

Handling Imbalanced Data: Upsampling vs Downsampling

Handling imbalanced data in data science involves techniques like upsampling and downsampling to balance class distributions for improved model performance. Upsampling increases minority class samples by replicating or generating synthetic data, such as using SMOTE, to prevent model bias toward the majority class. Downsampling reduces majority class instances to match the minority count, which can simplify training but risks information loss, making the choice dependent on dataset size and application requirements.

Practical Examples: Upsampling and Downsampling in Action

In data science, upsampling techniques increase minority class samples to balance datasets, commonly applied in fraud detection to amplify rare fraudulent transactions for model training. Downsampling reduces majority class instances, often used in churn prediction to prevent models from biasing toward predominant non-churn cases. Practical implementations of both methods enhance model accuracy and robustness by addressing class imbalance in datasets.

Best Practices for Effective Data Resampling

Effective data resampling in data science involves choosing upsampling techniques like SMOTE to generate synthetic minority class samples, enhancing model training on imbalanced datasets. Downsampling methods, such as random undersampling, reduce the majority class size to balance classes but risk losing valuable information. Best practices recommend combining resampling with cross-validation and feature selection to maintain data integrity and improve model generalization.

upsampling vs downsampling Infographic

techiny.com

techiny.com