Mean Squared Error (MSE) and Mean Absolute Error (MAE) are common metrics used to evaluate regression models in data science. MSE penalizes larger errors more heavily due to squaring, making it sensitive to outliers, while MAE provides a straightforward average of absolute errors, offering robustness against outliers. Choosing between MSE and MAE depends on the specific use case and whether larger errors need stricter penalization or a more balanced error evaluation is preferred.

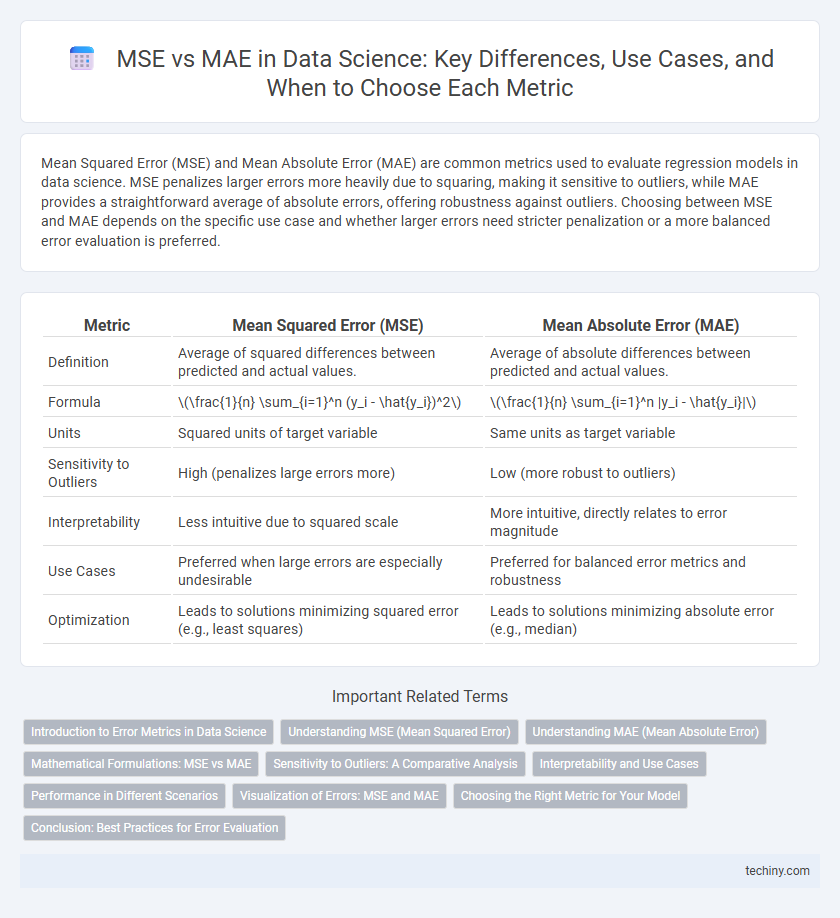

Table of Comparison

| Metric | Mean Squared Error (MSE) | Mean Absolute Error (MAE) |

|---|---|---|

| Definition | Average of squared differences between predicted and actual values. | Average of absolute differences between predicted and actual values. |

| Formula | \(\frac{1}{n} \sum_{i=1}^n (y_i - \hat{y_i})^2\) | \(\frac{1}{n} \sum_{i=1}^n |y_i - \hat{y_i}|\) |

| Units | Squared units of target variable | Same units as target variable |

| Sensitivity to Outliers | High (penalizes large errors more) | Low (more robust to outliers) |

| Interpretability | Less intuitive due to squared scale | More intuitive, directly relates to error magnitude |

| Use Cases | Preferred when large errors are especially undesirable | Preferred for balanced error metrics and robustness |

| Optimization | Leads to solutions minimizing squared error (e.g., least squares) | Leads to solutions minimizing absolute error (e.g., median) |

Introduction to Error Metrics in Data Science

Mean Squared Error (MSE) and Mean Absolute Error (MAE) are fundamental error metrics used in data science to evaluate model performance by quantifying the difference between predicted and actual values. MSE emphasizes larger errors due to squaring the residuals, making it sensitive to outliers, whereas MAE calculates the average absolute differences, providing a linear measure of error that is more robust to outliers. Selecting between MSE and MAE depends on the specific application and the importance of penalizing larger errors in regression tasks.

Understanding MSE (Mean Squared Error)

Mean Squared Error (MSE) quantifies the average of the squares of the differences between predicted and actual values, making it highly sensitive to large errors. This sensitivity helps in penalizing significant deviations more than smaller ones, which is crucial for models requiring precise accuracy. MSE's emphasis on larger errors often results in better performance when optimizing regression models in data science tasks.

Understanding MAE (Mean Absolute Error)

Mean Absolute Error (MAE) measures the average magnitude of errors in a set of predictions, without considering their direction, making it a straightforward metric for model accuracy. MAE calculates the average of absolute differences between predicted values and actual values, providing an interpretable error value in the same units as the target variable. It is particularly useful for cases where all errors are equally important and outliers should not disproportionately influence the error metric.

Mathematical Formulations: MSE vs MAE

Mean Squared Error (MSE) calculates the average of the squares of the errors, given by \( \text{MSE} = \frac{1}{n} \sum_{i=1}^n (y_i - \hat{y}_i)^2 \), where \( y_i \) represents actual values and \( \hat{y}_i \) predicted values. Mean Absolute Error (MAE) computes the average of absolute differences between actual and predicted values, defined as \( \text{MAE} = \frac{1}{n} \sum_{i=1}^n |y_i - \hat{y}_i| \). MSE penalizes larger errors more heavily due to squaring, while MAE treats all errors linearly, impacting model evaluation in regression analysis.

Sensitivity to Outliers: A Comparative Analysis

Mean Squared Error (MSE) is highly sensitive to outliers due to its quadratic penalization of large errors, causing disproportionate influence on model evaluation. Mean Absolute Error (MAE) offers greater robustness by linearly penalizing errors, reducing the impact of extreme values on performance metrics. This sensitivity difference makes MAE preferable in datasets with significant outlier presence, whereas MSE suits scenarios emphasizing large error minimization.

Interpretability and Use Cases

Mean Squared Error (MSE) emphasizes larger errors by squaring deviations, making it highly sensitive to outliers and suitable for applications where significant errors must be penalized, such as regression models in finance or meteorology. Mean Absolute Error (MAE) provides a more interpretable metric by averaging absolute deviations, offering robustness against outliers and clearer insights in fields like healthcare or real estate price prediction. Selecting MSE or MAE depends on the importance of error magnitude versus error consistency in a specific data science use case.

Performance in Different Scenarios

Mean Squared Error (MSE) penalizes larger errors more heavily due to its squaring component, making it sensitive to outliers and beneficial in scenarios where large errors must be minimized. Mean Absolute Error (MAE) treats all errors equally, providing a more robust measure in datasets with outliers or non-normal error distributions. In performance evaluation, MSE often drives models toward better accuracy on average predictions, while MAE offers more interpretability and stability when errors vary widely.

Visualization of Errors: MSE and MAE

Visualizing errors through Mean Squared Error (MSE) highlights larger discrepancies by amplifying the impact of significant outliers with squared differences, making it sensitive to extreme values. Mean Absolute Error (MAE) offers a straightforward linear representation of average errors, emphasizing overall error magnitude without disproportionately weighting outliers. Graphical comparisons using residual plots or error histograms effectively illustrate MSE's sensitivity versus MAE's uniform treatment of deviations in data science model evaluation.

Choosing the Right Metric for Your Model

Mean Squared Error (MSE) and Mean Absolute Error (MAE) are crucial metrics for evaluating regression models, each serving different purposes based on error characteristics. MSE squares the errors, penalizing larger deviations more heavily and making it sensitive to outliers, which suits models where large errors are particularly undesirable. MAE measures average absolute errors, providing a straightforward interpretation and robustness to outliers, ideal for cases where all errors should weigh equally in model assessment.

Conclusion: Best Practices for Error Evaluation

Mean Squared Error (MSE) is more sensitive to large errors and is preferred when significant outliers need penalization, making it ideal for models requiring strict accuracy. Mean Absolute Error (MAE) provides a more robust measure by treating all errors equally, best suited for applications with consistent, moderate error tolerance. Combining MSE and MAE offers a balanced perspective for error evaluation, enhancing model assessment in diverse data science tasks.

MSE vs MAE Infographic

techiny.com

techiny.com