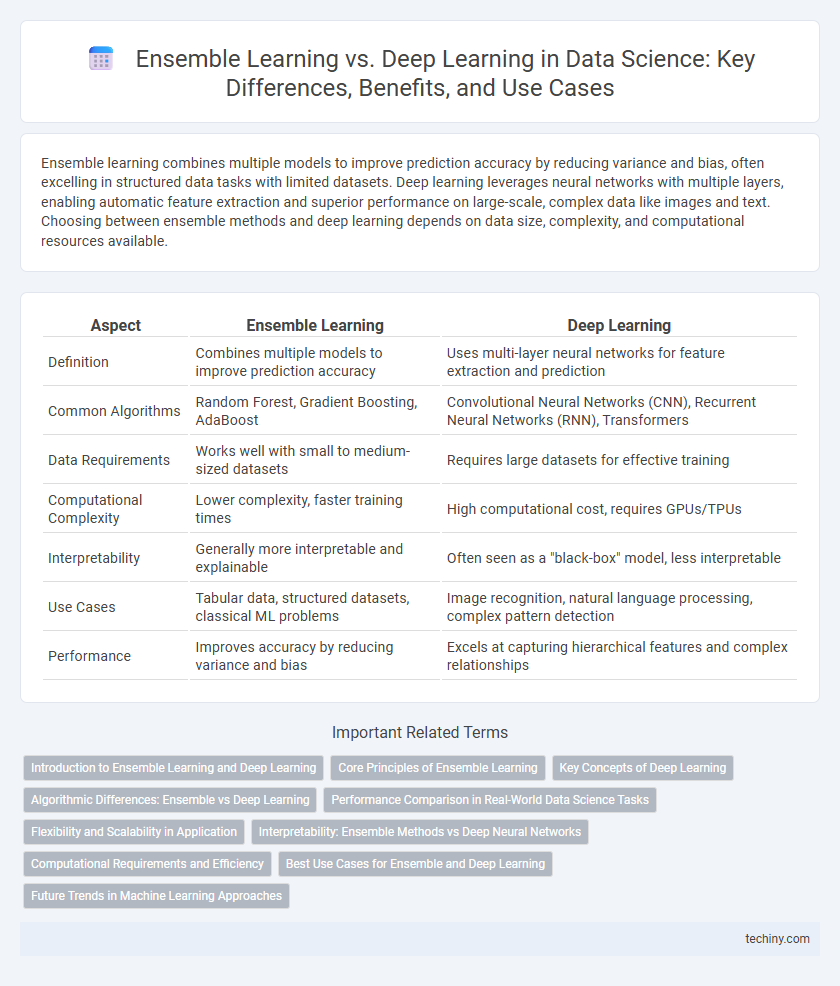

Ensemble learning combines multiple models to improve prediction accuracy by reducing variance and bias, often excelling in structured data tasks with limited datasets. Deep learning leverages neural networks with multiple layers, enabling automatic feature extraction and superior performance on large-scale, complex data like images and text. Choosing between ensemble methods and deep learning depends on data size, complexity, and computational resources available.

Table of Comparison

| Aspect | Ensemble Learning | Deep Learning |

|---|---|---|

| Definition | Combines multiple models to improve prediction accuracy | Uses multi-layer neural networks for feature extraction and prediction |

| Common Algorithms | Random Forest, Gradient Boosting, AdaBoost | Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Transformers |

| Data Requirements | Works well with small to medium-sized datasets | Requires large datasets for effective training |

| Computational Complexity | Lower complexity, faster training times | High computational cost, requires GPUs/TPUs |

| Interpretability | Generally more interpretable and explainable | Often seen as a "black-box" model, less interpretable |

| Use Cases | Tabular data, structured datasets, classical ML problems | Image recognition, natural language processing, complex pattern detection |

| Performance | Improves accuracy by reducing variance and bias | Excels at capturing hierarchical features and complex relationships |

Introduction to Ensemble Learning and Deep Learning

Ensemble learning combines multiple machine learning models to improve predictive performance by reducing variance and bias, often using methods such as bagging, boosting, and stacking. Deep learning leverages artificial neural networks with multiple layers to automatically learn hierarchical feature representations from large datasets, excelling in complex pattern recognition tasks. Both approaches enhance model accuracy but differ fundamentally in architecture, with ensemble learning aggregating diverse base learners and deep learning focusing on deep neural network optimization.

Core Principles of Ensemble Learning

Ensemble learning combines multiple base models to improve overall predictive performance by reducing variance, bias, or enhancing stability compared to individual models. Core principles include diversity among base learners, which ensures that errors are uncorrelated, and aggregation methods such as bagging, boosting, and stacking that consolidate predictions into a final output. This approach contrasts with deep learning's reliance on layered neural networks to automatically extract hierarchical feature representations from data.

Key Concepts of Deep Learning

Deep learning utilizes artificial neural networks with multiple layers to model complex patterns in large datasets, enabling advanced feature extraction and hierarchical representation. Key concepts include convolutional layers for image data, recurrent architectures for sequential data, and backpropagation for efficient training of deep models. This approach excels in tasks requiring high-level abstraction, such as speech recognition and natural language processing.

Algorithmic Differences: Ensemble vs Deep Learning

Ensemble learning combines multiple base models such as decision trees, support vector machines, or logistic regression to improve predictive performance by aggregating their outputs through techniques like bagging, boosting, or stacking. Deep learning relies on neural networks with multiple layers, enabling hierarchical feature extraction and end-to-end learning from raw data. Ensemble algorithms optimize diversity among learners to reduce model variance and bias, while deep learning models focus on adaptive representation learning through backpropagation and non-linear activation functions.

Performance Comparison in Real-World Data Science Tasks

Ensemble learning often outperforms individual deep learning models in terms of generalization and robustness on real-world data science tasks by combining multiple weak learners to reduce variance and bias. Deep learning models excel with large, high-dimensional datasets by capturing complex patterns through hierarchical feature extraction but may overfit smaller datasets. Performance comparison depends heavily on dataset size, interpretability requirements, and computational resources, with ensemble methods like Random Forests and Gradient Boosting frequently achieving competitive accuracy in structured data scenarios.

Flexibility and Scalability in Application

Ensemble learning offers greater flexibility by combining multiple models to improve prediction accuracy across diverse datasets, making it ideal for varying feature spaces and heterogeneous data sources. Deep learning excels in scalability, effectively handling large-scale and high-dimensional data through layered neural architectures that automatically extract complex patterns. Both approaches adapt to different application needs, with ensemble methods providing modular flexibility and deep learning enabling scalable end-to-end learning pipelines.

Interpretability: Ensemble Methods vs Deep Neural Networks

Ensemble learning techniques, such as random forests and gradient boosting, offer higher interpretability due to their ability to provide feature importance and decision tree visualizations. Deep neural networks, characterized by complex architectures and numerous parameters, often act as black boxes, making it challenging to explain individual predictions. Techniques like SHAP and LIME help interpret deep learning models but generally lag behind ensemble methods in transparency and ease of understanding.

Computational Requirements and Efficiency

Ensemble learning methods generally demand higher computational resources due to the need for training multiple base models, leading to increased memory usage and processing time compared to deep learning models. Deep learning leverages GPU acceleration and specialized hardware to optimize matrix operations, achieving higher efficiency in large-scale data scenarios. However, ensemble models often provide better robustness and interpretability at the cost of computational overhead, whereas deep learning models excel in automated feature extraction with improved scalability.

Best Use Cases for Ensemble and Deep Learning

Ensemble learning excels in structured data tasks such as fraud detection, credit scoring, and risk assessment by combining multiple models to enhance predictive accuracy and reduce overfitting. Deep learning is best suited for unstructured data applications including image recognition, natural language processing, and speech analysis due to its ability to automatically extract complex feature hierarchies from raw data. Choosing ensemble methods is ideal when interpretability and performance on tabular datasets are crucial, while deep learning is preferred for large-scale datasets with high-dimensional inputs and complex patterns.

Future Trends in Machine Learning Approaches

Ensemble learning techniques are evolving to improve model robustness and interpretability by combining diverse algorithms, enhancing predictive accuracy in complex datasets. Deep learning advancements focus on developing more efficient architectures like transformers and self-supervised learning, which reduce reliance on labeled data and expand applicability across domains. Future machine learning trends emphasize hybrid models merging ensemble strategies with deep neural networks to leverage complementary strengths for scalable, adaptable AI solutions.

ensemble learning vs deep learning Infographic

techiny.com

techiny.com