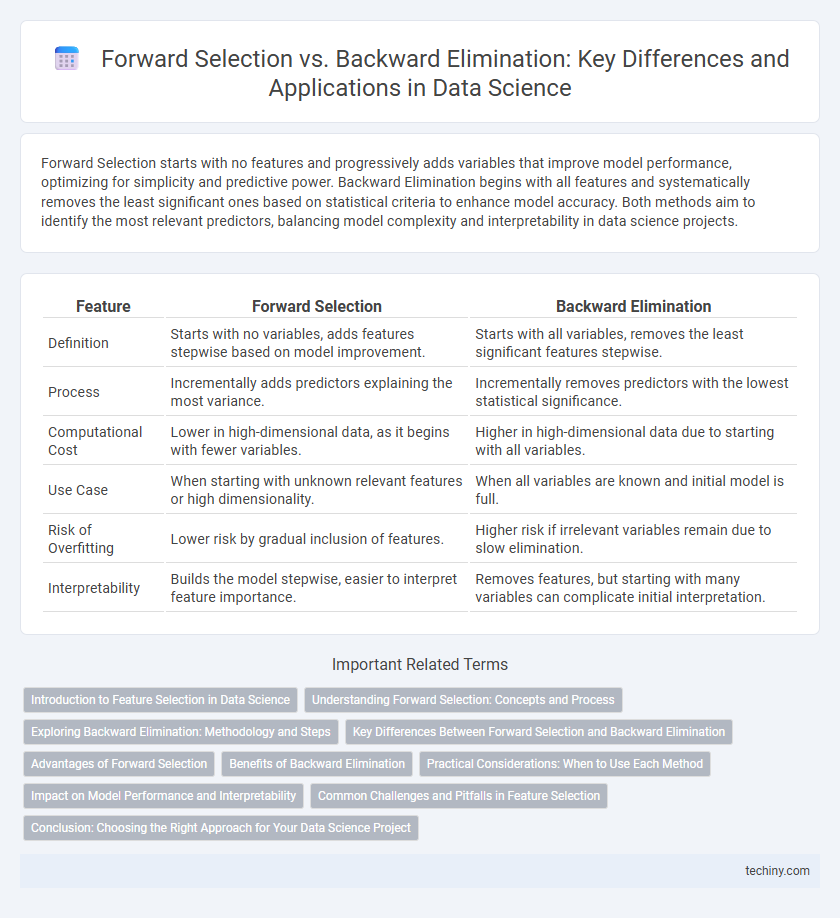

Forward Selection starts with no features and progressively adds variables that improve model performance, optimizing for simplicity and predictive power. Backward Elimination begins with all features and systematically removes the least significant ones based on statistical criteria to enhance model accuracy. Both methods aim to identify the most relevant predictors, balancing model complexity and interpretability in data science projects.

Table of Comparison

| Feature | Forward Selection | Backward Elimination |

|---|---|---|

| Definition | Starts with no variables, adds features stepwise based on model improvement. | Starts with all variables, removes the least significant features stepwise. |

| Process | Incrementally adds predictors explaining the most variance. | Incrementally removes predictors with the lowest statistical significance. |

| Computational Cost | Lower in high-dimensional data, as it begins with fewer variables. | Higher in high-dimensional data due to starting with all variables. |

| Use Case | When starting with unknown relevant features or high dimensionality. | When all variables are known and initial model is full. |

| Risk of Overfitting | Lower risk by gradual inclusion of features. | Higher risk if irrelevant variables remain due to slow elimination. |

| Interpretability | Builds the model stepwise, easier to interpret feature importance. | Removes features, but starting with many variables can complicate initial interpretation. |

Introduction to Feature Selection in Data Science

Feature selection in data science improves model performance by identifying the most relevant variables from large datasets. Forward selection begins with no features, progressively adding those that enhance model accuracy, while backward elimination starts with all features, removing the least significant ones step-by-step. Both methods reduce overfitting, improve interpretability, and decrease computational cost by selecting optimal feature subsets.

Understanding Forward Selection: Concepts and Process

Forward selection in data science is a stepwise regression technique that begins with an empty model and iteratively adds predictors based on their statistical significance and improvement in model performance. This method evaluates each candidate variable's contribution to reducing prediction error, ensuring only relevant features are included to enhance model accuracy. The process continues until no additional variables significantly improve the model, optimizing feature selection while preventing overfitting.

Exploring Backward Elimination: Methodology and Steps

Backward Elimination is a stepwise regression technique that begins with all candidate variables and iteratively removes the least significant predictor based on p-values or a chosen criterion like AIC until only statistically significant variables remain. This method helps reduce model complexity and multicollinearity by systematically excluding features that contribute the least to model performance. By evaluating each step's impact on model metrics such as R-squared or adjusted R-squared, it ensures the final model balances simplicity and predictive power.

Key Differences Between Forward Selection and Backward Elimination

Forward Selection initiates model building with no variables and progressively adds the most significant predictors based on statistical criteria, optimizing model simplicity and interpretability. Backward Elimination starts with all candidate features and iteratively removes the least significant variables, ensuring comprehensive consideration of all potential predictors at the outset. Key differences include the starting point of variable inclusion, computational efficiency, and the risk of excluding relevant variables early in Forward Selection versus retaining redundant features longer in Backward Elimination.

Advantages of Forward Selection

Forward Selection offers the advantage of reduced computational complexity by starting with an empty model and adding features incrementally, making it efficient for high-dimensional datasets. It helps prevent overfitting by including only the most statistically significant variables, improving model interpretability. This method allows early stopping once an optimal subset is identified, saving time compared to exhaustive search techniques.

Benefits of Backward Elimination

Backward Elimination simplifies model selection by starting with all features, ensuring no important variables are initially excluded, which enhances the chances of identifying the most significant predictors. This method reduces overfitting by systematically removing less relevant variables, leading to a more interpretable and generalizable model. It is particularly effective in datasets with many features, improving computational efficiency and model accuracy.

Practical Considerations: When to Use Each Method

Forward selection is preferred when dealing with a large number of features and limited computational resources, as it starts with no variables and adds them incrementally based on improvement criteria. Backward elimination suits smaller feature sets because it begins with all variables and removes the least significant ones, requiring more initial computation but potentially identifying better interactions. In practice, forward selection is advantageous for exploratory analysis or when feature relevance is unknown, while backward elimination is ideal when model complexity needs to be reduced from an established comprehensive set.

Impact on Model Performance and Interpretability

Forward selection incrementally adds features based on their statistical significance, often resulting in simpler models with high interpretability but potentially missing interactions among variables, which can impact predictive performance. Backward elimination starts with all candidate features and systematically removes the least significant ones, typically producing models that balance complexity and accuracy but may include redundant variables, complicating interpretation. Both methods impact model performance by influencing bias-variance trade-offs, where forward selection favors sparsity and interpretability, while backward elimination may enhance prediction by retaining more features.

Common Challenges and Pitfalls in Feature Selection

Forward Selection often struggles with overlooking important features due to its greedy nature, potentially leading to suboptimal models by stopping early. Backward Elimination faces challenges with computational cost, especially in high-dimensional datasets, as it evaluates the removal of each feature iteratively. Both methods risk multicollinearity issues and model overfitting without proper cross-validation and regularization techniques.

Conclusion: Choosing the Right Approach for Your Data Science Project

Forward Selection is ideal for datasets with a large number of features and limited computational resources, as it incrementally adds the most significant variables, reducing model complexity efficiently. Backward Elimination suits scenarios where starting with a full model is feasible, enabling the removal of less important features to improve interpretability and performance. Selecting between these methods depends on dataset size, feature relevance, and computational constraints, ensuring optimal model accuracy and resource utilization.

Forward Selection vs Backward Elimination Infographic

techiny.com

techiny.com