XGBoost and LightGBM are powerful gradient boosting frameworks widely used in data science for their efficiency and accuracy. XGBoost excels with its robust handling of sparse data and strong regularization techniques, while LightGBM offers faster training speed and lower memory usage by leveraging histogram-based algorithms and leaf-wise tree growth. Choosing between them depends on the specific dataset size, feature characteristics, and the need for speed versus interpretability in predictive modeling tasks.

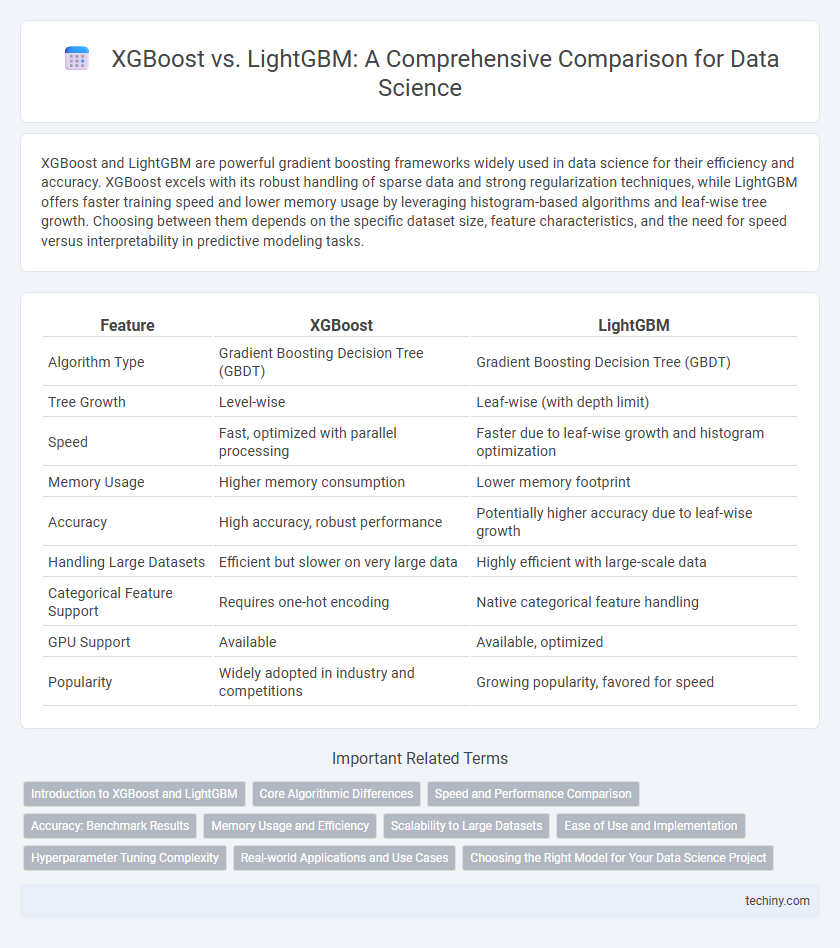

Table of Comparison

| Feature | XGBoost | LightGBM |

|---|---|---|

| Algorithm Type | Gradient Boosting Decision Tree (GBDT) | Gradient Boosting Decision Tree (GBDT) |

| Tree Growth | Level-wise | Leaf-wise (with depth limit) |

| Speed | Fast, optimized with parallel processing | Faster due to leaf-wise growth and histogram optimization |

| Memory Usage | Higher memory consumption | Lower memory footprint |

| Accuracy | High accuracy, robust performance | Potentially higher accuracy due to leaf-wise growth |

| Handling Large Datasets | Efficient but slower on very large data | Highly efficient with large-scale data |

| Categorical Feature Support | Requires one-hot encoding | Native categorical feature handling |

| GPU Support | Available | Available, optimized |

| Popularity | Widely adopted in industry and competitions | Growing popularity, favored for speed |

Introduction to XGBoost and LightGBM

XGBoost and LightGBM are advanced gradient boosting frameworks widely used in data science for their high predictive accuracy and efficiency. XGBoost excels with its regularization techniques and parallel processing, making it suitable for various complex datasets. LightGBM uses a leaf-wise growth approach and histogram-based algorithms, enabling faster training speed and lower memory usage, especially with large-scale data.

Core Algorithmic Differences

XGBoost relies on a level-wise tree growth approach, constructing trees by expanding all nodes at the same depth simultaneously, which often leads to more balanced trees but higher computational cost. LightGBM employs a leaf-wise tree growth strategy with a best-first approach, prioritizing splitting the leaf with the highest loss reduction, resulting in deeper trees and improved accuracy but increased risk of overfitting. Furthermore, LightGBM uses histogram-based decision trees with gradient-based one-side sampling (GOSS) and exclusive feature bundling (EFB) to enhance efficiency, while XGBoost primarily focuses on exact greedy algorithms for split finding.

Speed and Performance Comparison

XGBoost and LightGBM are leading gradient boosting frameworks widely used in data science for predictive modeling tasks. LightGBM often outperforms XGBoost in training speed due to its histogram-based algorithm and leaf-wise tree growth, enabling faster handling of large datasets and higher efficiency in memory usage. Performance metrics typically show comparable accuracy, but LightGBM can achieve better results on large-scale data with complex features, while XGBoost remains robust and effective for smaller datasets.

Accuracy: Benchmark Results

Benchmark results reveal that XGBoost and LightGBM exhibit competitive accuracy across various datasets, with LightGBM often outperforming XGBoost in large-scale scenarios due to its leaf-wise tree growth strategy. Studies report LightGBM achieving higher accuracy scores on classification and regression tasks, especially with high-dimensional data, while XGBoost maintains robustness and slightly better stability in small to medium datasets. Both algorithms leverage gradient boosting, but LightGBM's histogram-based approach enables efficient handling of categorical features, enhancing its predictive performance in complex data environments.

Memory Usage and Efficiency

XGBoost and LightGBM are both powerful gradient boosting frameworks widely used in data science for predictive modeling, with LightGBM typically demonstrating superior memory efficiency due to its leaf-wise tree growth and histogram-based algorithms. LightGBM uses less memory by employing exclusive feature bundling and gradient-based one-side sampling, which significantly reduces data size without sacrificing accuracy. Although XGBoost supports pruning and weighted quantile sketch, LightGBM's optimized data structure and parallel learning make it more efficient for large-scale datasets and resource-constrained environments.

Scalability to Large Datasets

XGBoost and LightGBM are both powerful gradient boosting frameworks optimized for large datasets, but LightGBM offers superior scalability due to its histogram-based algorithm and leaf-wise tree growth, which reduces memory usage and computational cost. LightGBM's efficient parallel and GPU learning capabilities allow it to handle massive datasets with millions of examples more quickly than XGBoost, which relies on a level-wise growth method. Scalability in LightGBM is further enhanced by its support for distributed training across multiple machines, making it a preferred choice for large-scale data science projects.

Ease of Use and Implementation

XGBoost offers straightforward implementation with extensive documentation and a wide array of tutorials, making it accessible for beginners and experts alike. LightGBM provides a seamless integration with major machine learning frameworks, featuring automatic handling of categorical features that simplifies preprocessing. Both libraries support parallel and distributed computing, but LightGBM's optimized memory usage often leads to faster training times in large-scale datasets.

Hyperparameter Tuning Complexity

XGBoost requires careful hyperparameter tuning due to its numerous parameters like max_depth, eta, and subsample, which directly impact model performance and overfitting. LightGBM offers faster training but involves complex parameters such as num_leaves and min_data_in_leaf, demanding extensive experimentation to optimize for accuracy and speed. Both frameworks benefit significantly from grid search or Bayesian optimization techniques to balance tuning complexity and predictive power.

Real-world Applications and Use Cases

XGBoost and LightGBM are widely used in real-world data science projects due to their high performance in classification and regression tasks. XGBoost is preferred in scenarios requiring robust handling of missing data and interpretability, such as fraud detection and credit scoring. LightGBM excels in large-scale applications with high-dimensional datasets, like click-through rate prediction and large-scale recommendation systems, due to its faster training speed and lower memory usage.

Choosing the Right Model for Your Data Science Project

XGBoost and LightGBM are both powerful gradient boosting frameworks widely used in data science for structured data modeling, with XGBoost excelling in smaller datasets due to its robustness and detailed tree pruning, while LightGBM offers faster training speed and lower memory usage, making it ideal for large-scale data. Choosing the right model depends on the dataset size, feature characteristics, and performance requirements; LightGBM supports categorical features natively and handles high-dimensional data efficiently, whereas XGBoost provides better handling of sparse data and missing values. Evaluating cross-validation scores, computational resources, and model interpretability is crucial to align the choice with project goals and deployment constraints.

XGBoost vs LightGBM Infographic

techiny.com

techiny.com