Early stopping and dropout are two popular regularization techniques used to prevent overfitting in data science models. Early stopping halts training when the model's performance on a validation set begins to degrade, ensuring the model does not learn noise from the training data. Dropout randomly deactivates neurons during training, promoting robustness by forcing the model to learn redundant representations and reducing co-adaptation of features.

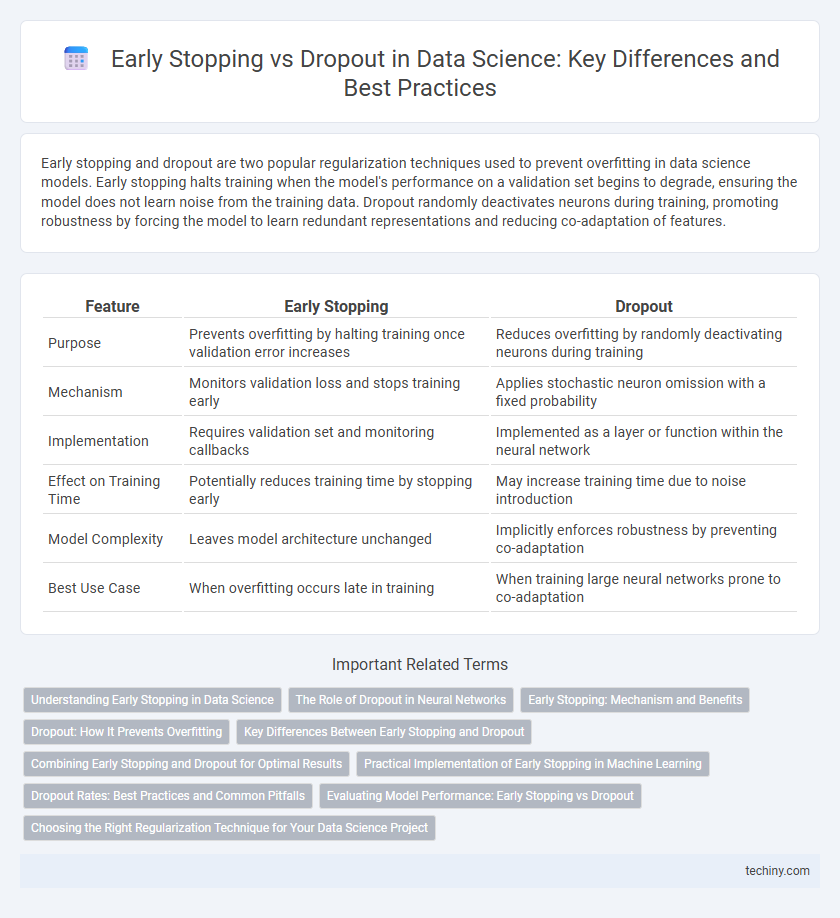

Table of Comparison

| Feature | Early Stopping | Dropout |

|---|---|---|

| Purpose | Prevents overfitting by halting training once validation error increases | Reduces overfitting by randomly deactivating neurons during training |

| Mechanism | Monitors validation loss and stops training early | Applies stochastic neuron omission with a fixed probability |

| Implementation | Requires validation set and monitoring callbacks | Implemented as a layer or function within the neural network |

| Effect on Training Time | Potentially reduces training time by stopping early | May increase training time due to noise introduction |

| Model Complexity | Leaves model architecture unchanged | Implicitly enforces robustness by preventing co-adaptation |

| Best Use Case | When overfitting occurs late in training | When training large neural networks prone to co-adaptation |

Understanding Early Stopping in Data Science

Early stopping is a regularization technique in data science that halts model training once performance on a validation set begins to degrade, preventing overfitting and saving computational resources. Unlike dropout, which randomly deactivates neurons during training to reduce model complexity, early stopping monitors validation loss or accuracy to determine the optimal stopping point. This method is especially effective in iterative algorithms like gradient descent, ensuring the model generalizes well to unseen data without relying on network architecture changes.

The Role of Dropout in Neural Networks

Dropout in neural networks serves as a powerful regularization technique by randomly deactivating neurons during training, which prevents overfitting and enhances model generalization. Unlike early stopping that halts training based on validation performance, dropout modifies the network structure dynamically to create an ensemble effect within a single model. This stochastic neuron omission helps neural networks learn more robust features and improves predictive accuracy on unseen data.

Early Stopping: Mechanism and Benefits

Early stopping monitors model performance on a validation set during training and halts the process when performance degrades, preventing overfitting. This mechanism reduces computational cost by avoiding unnecessary epochs while ensuring model generalization. Early stopping is particularly effective in iterative algorithms like neural networks, where prolonged training often leads to diminishing returns and overfitting.

Dropout: How It Prevents Overfitting

Dropout prevents overfitting in neural networks by randomly deactivating a fraction of neurons during training, which forces the model to learn redundant representations and improves generalization. This technique reduces reliance on specific nodes, effectively creating an ensemble of subnetworks that enhance robustness against noise and variance in the data. Dropout's impact is particularly significant in deep learning architectures, where complex models are prone to memorizing training data without capturing underlying patterns.

Key Differences Between Early Stopping and Dropout

Early stopping and dropout are regularization techniques used to prevent overfitting in machine learning models. Early stopping monitors the model's performance on a validation set during training and halts training when performance deteriorates, whereas dropout randomly deactivates neurons during training to reduce co-adaptation and improve generalization. Early stopping directly controls training duration based on model behavior, while dropout modifies the network architecture dynamically to promote robustness.

Combining Early Stopping and Dropout for Optimal Results

Combining early stopping and dropout enhances model generalization by preventing both overfitting and underfitting in neural networks. Early stopping monitors validation loss to halt training when performance degrades, while dropout randomly deactivates neurons during training to reduce co-adaptation. This synergy leverages dropout's regularization with early stopping's adaptive training time, optimizing model accuracy and robustness.

Practical Implementation of Early Stopping in Machine Learning

Early stopping is a regularization technique used during neural network training to prevent overfitting by halting the training process once the model's performance on a validation set stops improving. Practically, early stopping requires monitoring validation loss or accuracy after each epoch and terminating training when these metrics degrade or plateau for a predefined number of epochs, known as the patience parameter. This method is computationally efficient and often preferable over dropout when training time and model convergence stability are priorities.

Dropout Rates: Best Practices and Common Pitfalls

Dropout rates typically range from 0.2 to 0.5, balancing model regularization and training efficiency to prevent overfitting in neural networks. Setting dropout rates too high can cause underfitting by excessively reducing the model's capacity, while rates that are too low may fail to provide sufficient regularization. Best practices recommend tuning dropout rates through cross-validation, considering model complexity and dataset size to optimize generalization performance.

Evaluating Model Performance: Early Stopping vs Dropout

Early stopping monitors validation loss to halt training once performance degrades, effectively preventing overfitting and saving computational resources. Dropout randomly deactivates neurons during training, promoting generalization by reducing co-adaptation of features and enhancing model robustness. Combining early stopping with dropout often yields optimal model performance by balancing bias and variance while minimizing overfitting risks.

Choosing the Right Regularization Technique for Your Data Science Project

Choosing the right regularization technique is crucial for improving model generalization and preventing overfitting in data science projects. Early stopping halts training once the validation error plateaus, saving computational resources and maintaining model performance, while dropout randomly disables neurons during training to promote robustness by preventing co-adaptations. Selecting between early stopping and dropout depends on dataset size, model complexity, and training stability, with early stopping favored for quicker convergence and dropout for enhancing model resilience in deep neural networks.

early stopping vs drop out Infographic

techiny.com

techiny.com