Logistic regression excels in binary classification by modeling the probability of class membership with a straightforward linear decision boundary, making it interpretable and efficient for linearly separable data. Support Vector Machines (SVM) enhance classification performance through maximizing the margin between classes and effectively handling non-linear separability with kernel functions. While logistic regression offers probabilistic outputs, SVM focuses on finding optimal hyperplanes, often resulting in better accuracy for complex datasets.

Table of Comparison

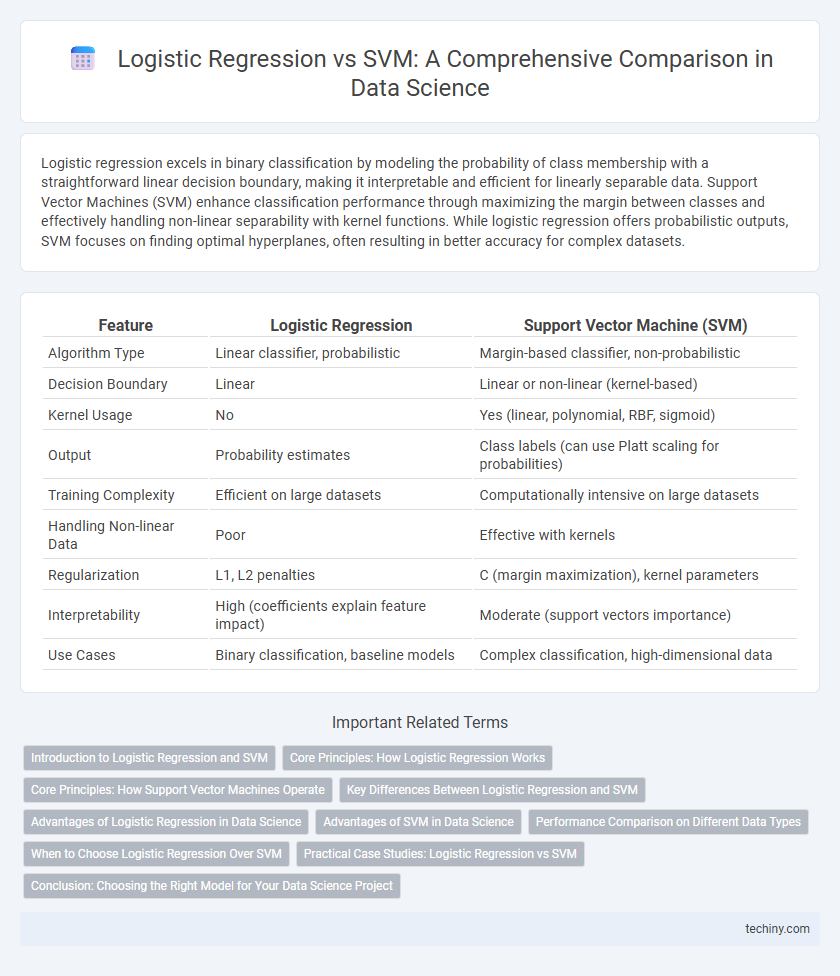

| Feature | Logistic Regression | Support Vector Machine (SVM) |

|---|---|---|

| Algorithm Type | Linear classifier, probabilistic | Margin-based classifier, non-probabilistic |

| Decision Boundary | Linear | Linear or non-linear (kernel-based) |

| Kernel Usage | No | Yes (linear, polynomial, RBF, sigmoid) |

| Output | Probability estimates | Class labels (can use Platt scaling for probabilities) |

| Training Complexity | Efficient on large datasets | Computationally intensive on large datasets |

| Handling Non-linear Data | Poor | Effective with kernels |

| Regularization | L1, L2 penalties | C (margin maximization), kernel parameters |

| Interpretability | High (coefficients explain feature impact) | Moderate (support vectors importance) |

| Use Cases | Binary classification, baseline models | Complex classification, high-dimensional data |

Introduction to Logistic Regression and SVM

Logistic regression is a statistical model used for binary classification by estimating the probability of an outcome based on input features through a logistic function. Support Vector Machines (SVM) are supervised learning algorithms that find the optimal hyperplane to separate classes in high-dimensional space, maximizing the margin between data points of different categories. Both techniques are fundamental in classification tasks, with logistic regression providing probabilistic outputs and SVM excelling in complex, non-linear decision boundaries using kernel methods.

Core Principles: How Logistic Regression Works

Logistic regression models the probability of a binary outcome using the logistic function, transforming a linear combination of input features into a value between 0 and 1. It estimates model parameters by maximizing the likelihood function through techniques like gradient descent, ensuring the best fit to the observed data. This approach offers interpretable coefficients, providing insights into the relationship between variables and the predicted probability.

Core Principles: How Support Vector Machines Operate

Support Vector Machines (SVM) operate by finding the optimal hyperplane that maximizes the margin between different classes in a high-dimensional feature space, effectively separating the data points. Unlike logistic regression, which estimates probabilities using a logistic function, SVM focuses on maximizing the margin to ensure better generalization, especially in cases of non-linearly separable data through the use of kernel functions. This margin maximization principle allows SVMs to handle complex classification tasks with high accuracy and robustness against overfitting.

Key Differences Between Logistic Regression and SVM

Logistic Regression predicts probabilities using a linear decision boundary optimized by maximizing the likelihood, making it suitable for linearly separable data and probabilistic interpretation. Support Vector Machine (SVM) constructs a hyperplane that maximizes the margin between classes, leveraging kernel functions to handle non-linear separability and focusing on support vectors rather than the entire dataset. Unlike Logistic Regression, SVM prioritizes margin maximization over probability estimation, often resulting in better performance on complex, high-dimensional datasets.

Advantages of Logistic Regression in Data Science

Logistic regression offers advantages in data science due to its simplicity, interpretability, and efficiency with large datasets. It provides probabilistic outputs, making it easier to understand and communicate model predictions compared to SVM's binary classification. Logistic regression performs well with linearly separable data and requires less computational power, making it suitable for real-time applications and large-scale problems.

Advantages of SVM in Data Science

Support Vector Machines (SVM) excel in handling high-dimensional datasets and complex, nonlinear relationships through the kernel trick, making them highly effective in various data science applications such as image recognition and text classification. SVMs provide robust performance by maximizing the margin between classes, which enhances generalization and reduces overfitting compared to logistic regression. Their ability to work well with small to medium-sized datasets and their effectiveness in cases with clear margin separation give SVM a distinct advantage in predictive modeling tasks.

Performance Comparison on Different Data Types

Logistic regression excels in linearly separable data with balanced classes, offering interpretable probability estimates and faster training times. Support Vector Machines (SVM) outperform when handling high-dimensional or non-linear data by utilizing kernel functions to find optimal separating hyperplanes. For noisy datasets, logistic regression tends to be more robust, while SVM may overfit unless carefully regularized.

When to Choose Logistic Regression Over SVM

Logistic regression is preferred over SVM when interpretability and probabilistic outputs are essential, such as in medical diagnosis or credit scoring where understanding feature influence is critical. It performs well with linearly separable data and smaller datasets, offering faster training times and less computational complexity compared to SVM. Logistic regression is also more effective when the dataset includes noise and outliers, as SVM's margin maximization can be overly sensitive in such scenarios.

Practical Case Studies: Logistic Regression vs SVM

Logistic Regression excels in binary classification tasks where interpretability and probabilistic outputs are crucial, such as in medical diagnosis and credit scoring case studies. Support Vector Machines (SVM) often outperform logistic regression in high-dimensional spaces with clear margin separation, making them ideal for text classification and image recognition scenarios. Practical applications show logistic regression's simplicity benefits model deployment while SVM's robustness handles complex, non-linear boundaries effectively.

Conclusion: Choosing the Right Model for Your Data Science Project

Logistic regression excels in interpretability and is ideal for linearly separable data with a clear probabilistic framework, making it suitable for straightforward classification tasks. Support Vector Machines (SVM) offer powerful performance on both linear and non-linear datasets by using kernel functions, making them effective for complex data distributions and high-dimensional spaces. Selecting the right model depends on the trade-off between interpretability, computational cost, and data characteristics such as feature dimensionality and linearity.

logistic regression vs SVM Infographic

techiny.com

techiny.com