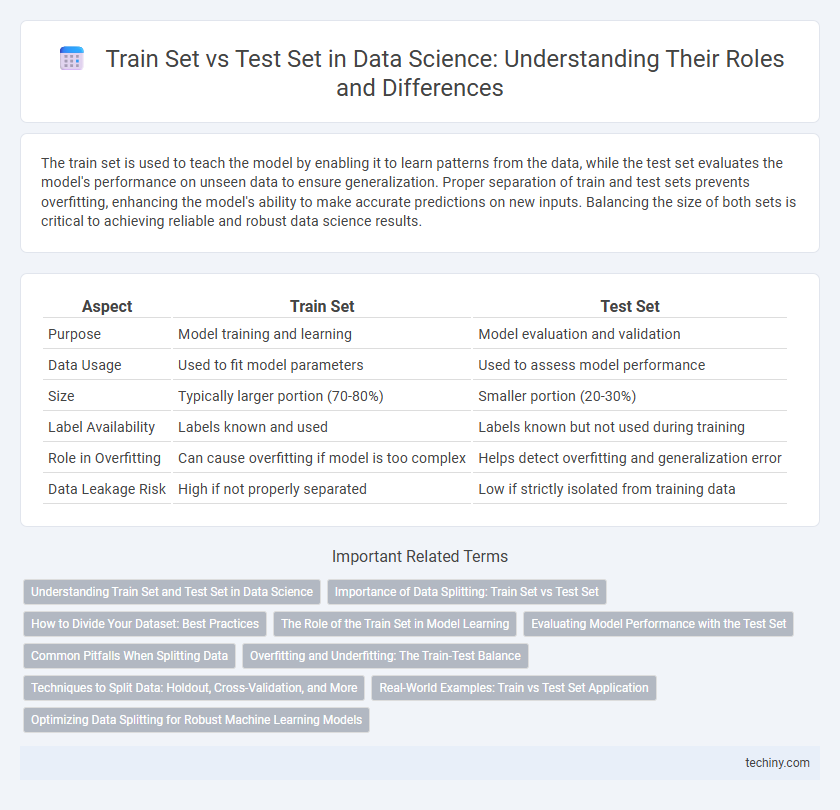

The train set is used to teach the model by enabling it to learn patterns from the data, while the test set evaluates the model's performance on unseen data to ensure generalization. Proper separation of train and test sets prevents overfitting, enhancing the model's ability to make accurate predictions on new inputs. Balancing the size of both sets is critical to achieving reliable and robust data science results.

Table of Comparison

| Aspect | Train Set | Test Set |

|---|---|---|

| Purpose | Model training and learning | Model evaluation and validation |

| Data Usage | Used to fit model parameters | Used to assess model performance |

| Size | Typically larger portion (70-80%) | Smaller portion (20-30%) |

| Label Availability | Labels known and used | Labels known but not used during training |

| Role in Overfitting | Can cause overfitting if model is too complex | Helps detect overfitting and generalization error |

| Data Leakage Risk | High if not properly separated | Low if strictly isolated from training data |

Understanding Train Set and Test Set in Data Science

The train set in data science consists of labeled data used to build and optimize machine learning models by enabling algorithms to learn patterns and relationships. The test set serves as unseen data to evaluate the model's generalization capabilities and performance metrics such as accuracy, precision, and recall. Proper separation of train and test sets prevents overfitting and ensures reliable validation of predictive models.

Importance of Data Splitting: Train Set vs Test Set

Effective data splitting into train and test sets is crucial for developing reliable machine learning models, as it ensures unbiased evaluation and generalization performance. The train set is used to fit the model, learning patterns and relationships within the data, while the test set assesses the model's performance on unseen data, preventing overfitting. Proper splitting techniques, such as stratified sampling, maintain class distribution and enhance model robustness across real-world applications.

How to Divide Your Dataset: Best Practices

Dividing your dataset effectively involves allocating roughly 70-80% of the data to the train set and 20-30% to the test set to ensure robust model training and evaluation. Employ stratified sampling when dealing with imbalanced classes to maintain representative class distributions across both sets. Cross-validation techniques, such as k-fold cross-validation, further enhance model reliability by repeatedly partitioning the train set for validation without contaminating the test set.

The Role of the Train Set in Model Learning

The train set plays a critical role in model learning by providing the algorithm with labeled data to identify patterns and relationships within the dataset. This enables the model to adjust its internal parameters, minimizing error through techniques such as gradient descent and loss function optimization. Effective training on a representative train set ensures the model can generalize well when exposed to unseen data in the test set.

Evaluating Model Performance with the Test Set

Evaluating model performance with the test set provides an unbiased estimate of how the model generalizes to new, unseen data by measuring metrics such as accuracy, precision, recall, and F1 score. The test set acts as a final checkpoint, ensuring that the model does not overfit the training data and confirming its predictive power in real-world scenarios. Proper evaluation with the test set is critical for validating model robustness and guiding further model refinement or deployment decisions.

Common Pitfalls When Splitting Data

Common pitfalls when splitting data into train and test sets include data leakage, where information from the test set inadvertently influences the training process, leading to overly optimistic performance metrics. Another frequent issue is an imbalanced split that fails to maintain the underlying distribution of classes or features, which can cause biased model evaluation. Ignoring temporal or grouped dependencies during splitting can also result in unrealistic generalization performance due to correlated samples appearing in both sets.

Overfitting and Underfitting: The Train-Test Balance

Overfitting occurs when a model learns noise and details from the train set, resulting in high accuracy on training data but poor performance on the test set. Underfitting happens when the model is too simple to capture underlying patterns in the train set, leading to low accuracy on both train and test data. Balancing the train-test split ensures the model generalizes well, avoiding overfitting and underfitting by providing representative and sufficient data for training and validation.

Techniques to Split Data: Holdout, Cross-Validation, and More

Techniques to split data into train and test sets significantly impact model performance and generalization in data science. The Holdout method partitions the dataset into distinct training and testing portions, providing a straightforward evaluation but with variability risks in small datasets. Cross-validation, such as k-fold, enhances robustness by iteratively training and testing on different subsets, reducing bias and variance and ensuring the model's reliability on unseen data.

Real-World Examples: Train vs Test Set Application

Real-world applications of train and test sets in data science reveal critical differences in model evaluation and performance. In fraud detection systems, the train set comprises historical transaction data used to develop the model, while the test set includes recent, unseen transactions to assess accuracy and detect emerging fraud patterns. Similarly, in medical diagnosis, models trained on patient records from one hospital are tested on records from another to ensure generalization across diverse populations.

Optimizing Data Splitting for Robust Machine Learning Models

Effective data splitting separates the dataset into a train set for model learning and a test set for unbiased evaluation, ensuring generalization. Optimizing the ratio, commonly 70-80% train and 20-30% test, balances sufficient training data with reliable performance assessment. Stratified sampling maintains class distribution, improving robustness in imbalanced datasets and preventing overfitting.

train set vs test set Infographic

techiny.com

techiny.com