Recall and sensitivity both measure the ability of a model to correctly identify positive cases, often used interchangeably in data science. Recall quantifies the proportion of true positives detected out of all actual positives, highlighting the model's effectiveness in capturing relevant instances. High sensitivity ensures fewer false negatives, making it critical in domains like medical diagnostics where missing a positive case can have severe consequences.

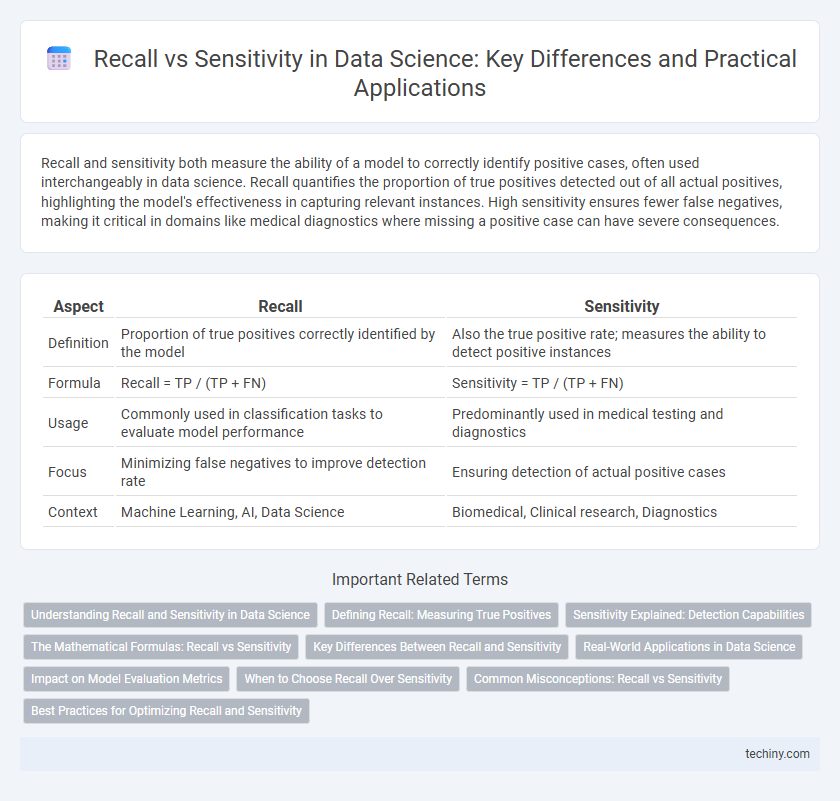

Table of Comparison

| Aspect | Recall | Sensitivity |

|---|---|---|

| Definition | Proportion of true positives correctly identified by the model | Also the true positive rate; measures the ability to detect positive instances |

| Formula | Recall = TP / (TP + FN) | Sensitivity = TP / (TP + FN) |

| Usage | Commonly used in classification tasks to evaluate model performance | Predominantly used in medical testing and diagnostics |

| Focus | Minimizing false negatives to improve detection rate | Ensuring detection of actual positive cases |

| Context | Machine Learning, AI, Data Science | Biomedical, Clinical research, Diagnostics |

Understanding Recall and Sensitivity in Data Science

Recall and sensitivity both measure a model's ability to identify true positives accurately in data science classification tasks. Recall quantifies the proportion of actual positive instances correctly detected, while sensitivity is often used interchangeably but specifically emphasizes true positive rate in medical and diagnostic analytics. Optimizing recall or sensitivity is crucial when false negatives have significant consequences, ensuring high detection rates of critical events or conditions.

Defining Recall: Measuring True Positives

Recall, also known as sensitivity, measures the proportion of true positives correctly identified by a classification model out of all actual positive cases. It quantifies the model's ability to detect relevant instances, focusing on minimizing false negatives. High recall is crucial in applications like medical diagnosis and fraud detection, where missing positive cases can have severe consequences.

Sensitivity Explained: Detection Capabilities

Sensitivity, also known as recall, measures a data science model's ability to correctly identify true positive cases within a dataset, highlighting the detection capabilities for relevant instances. High sensitivity is crucial in scenarios such as medical diagnosis or fraud detection, where missing positive cases can have significant consequences. By maximizing sensitivity, models ensure that most actual positive events are captured, improving overall reliability in critical detection tasks.

The Mathematical Formulas: Recall vs Sensitivity

Recall and sensitivity are mathematically equivalent metrics used to evaluate classification performance, both calculated as True Positives divided by the sum of True Positives and False Negatives (TP / (TP + FN)). These formulas quantify the ability of a model to correctly identify positive instances from the actual positive cases in a dataset. Understanding this equivalence helps data scientists interpret model performance consistently across different contexts.

Key Differences Between Recall and Sensitivity

Recall and sensitivity are often used interchangeably in data science, yet recall specifically measures the proportion of true positive cases correctly identified by a model, focusing on the completeness of positive predictions. Sensitivity emphasizes the model's ability to correctly detect actual positives out of all true positive instances, highlighting the model's robustness in identifying relevant cases. The key difference lies in the application context: recall is commonly used in information retrieval, whereas sensitivity is primarily referenced in medical diagnosis and classification tasks.

Real-World Applications in Data Science

Recall, also known as sensitivity, measures the proportion of true positives correctly identified by a model, crucial in data science applications such as medical diagnosis and fraud detection where missing positive cases can have severe consequences. High recall ensures that critical instances, like disease presence or fraudulent transactions, are detected, reducing false negatives and improving decision-making accuracy. Data scientists often optimize models for recall when the cost of overlooking positive instances outweighs false positives, enhancing reliability in real-world scenarios.

Impact on Model Evaluation Metrics

Recall, also known as sensitivity, measures the proportion of actual positives correctly identified by a model, directly affecting the evaluation of classification tasks in data science. High recall is crucial in applications such as medical diagnosis and fraud detection, where missing positive cases can lead to significant consequences. Balancing recall with precision impacts metrics like F1-score and ROC-AUC, providing a comprehensive view of model performance.

When to Choose Recall Over Sensitivity

Recall and sensitivity are often used interchangeably in data science, both measuring the true positive rate of a model. Choose recall over sensitivity when the priority is to minimize false negatives, such as in medical diagnostics or fraud detection, where missing a positive case has severe consequences. High recall ensures that most relevant instances are captured, even if it means accepting a higher false positive rate.

Common Misconceptions: Recall vs Sensitivity

Recall and sensitivity are often used interchangeably in data science, but subtle differences exist depending on context; recall typically refers to the proportion of true positives correctly identified in classification tasks, while sensitivity often emphasizes the same metric in medical diagnostics. A common misconception is that these metrics apply universally without adjustment, yet recall may prioritize retrieval in information systems, whereas sensitivity highlights detection accuracy of positive cases in clinical settings. Understanding the domain-specific application ensures accurate interpretation and effective model evaluation.

Best Practices for Optimizing Recall and Sensitivity

Optimizing recall and sensitivity in data science involves balancing model threshold settings and addressing class imbalances through techniques like SMOTE or cost-sensitive learning. Implementing stratified cross-validation ensures reliable evaluation of recall across diverse subsets, while feature selection and hyperparameter tuning enhance the model's ability to detect true positives. Continuous monitoring of recall alongside precision and F1-score facilitates effective identification and mitigation of false negatives in real-world applications.

recall vs sensitivity Infographic

techiny.com

techiny.com