Lasso regression performs feature selection by shrinking some coefficients to exactly zero, making it ideal for models requiring sparsity and interpretability. Ridge regression, on the other hand, shrinks coefficients towards zero but never exactly zero, which helps in handling multicollinearity and stabilizing estimates without eliminating features. Both methods add regularization to linear models, with lasso using L1 penalty and ridge using L2 penalty, balancing bias and variance to improve prediction accuracy.

Table of Comparison

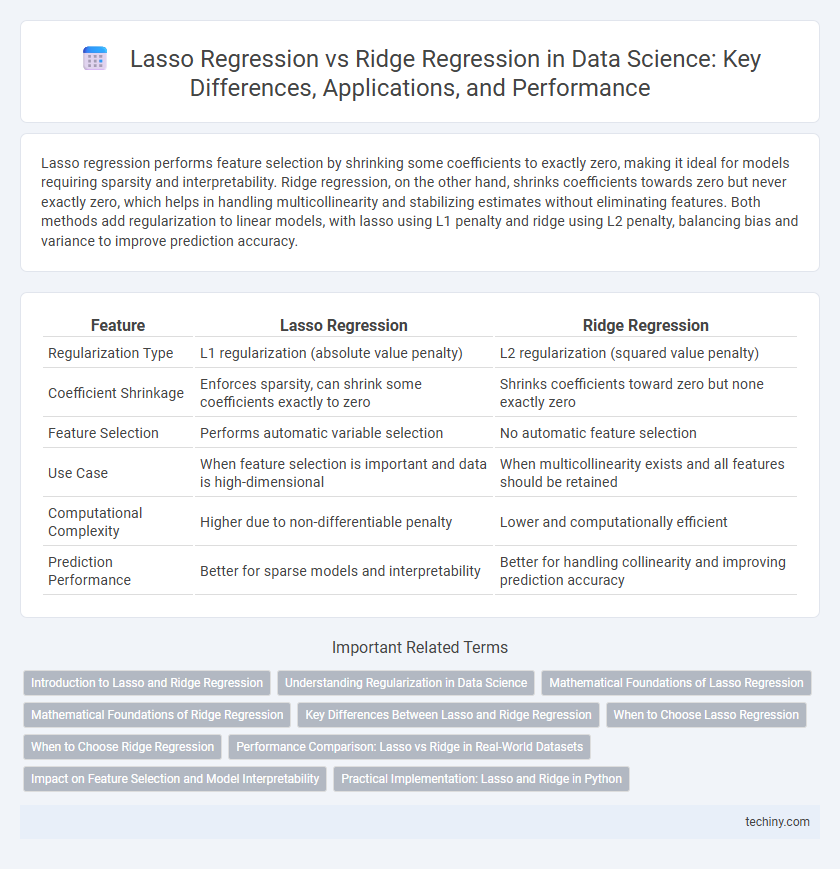

| Feature | Lasso Regression | Ridge Regression |

|---|---|---|

| Regularization Type | L1 regularization (absolute value penalty) | L2 regularization (squared value penalty) |

| Coefficient Shrinkage | Enforces sparsity, can shrink some coefficients exactly to zero | Shrinks coefficients toward zero but none exactly zero |

| Feature Selection | Performs automatic variable selection | No automatic feature selection |

| Use Case | When feature selection is important and data is high-dimensional | When multicollinearity exists and all features should be retained |

| Computational Complexity | Higher due to non-differentiable penalty | Lower and computationally efficient |

| Prediction Performance | Better for sparse models and interpretability | Better for handling collinearity and improving prediction accuracy |

Introduction to Lasso and Ridge Regression

Lasso regression applies L1 regularization, promoting sparsity by shrinking some coefficients exactly to zero, effectively performing feature selection. Ridge regression uses L2 regularization, which penalizes the sum of squared coefficients to reduce model complexity and multicollinearity without eliminating variables. Both techniques improve model generalization by controlling overfitting through different regularization mechanisms.

Understanding Regularization in Data Science

Lasso regression applies L1 regularization, which promotes sparsity by shrinking some coefficients exactly to zero, making it effective for feature selection in high-dimensional datasets. Ridge regression uses L2 regularization, distributing penalty across coefficients to reduce their magnitude without eliminating variables, thus handling multicollinearity and improving model stability. Both methods prevent overfitting by adding a complexity penalty to the loss function, essential for building robust predictive models in data science.

Mathematical Foundations of Lasso Regression

Lasso regression minimizes the residual sum of squares subject to the sum of the absolute value of the coefficients being less than a constant, effectively performing L1 regularization. This constraint leads to sparse solutions by shrinking some coefficients exactly to zero, facilitating variable selection within high-dimensional data contexts. In contrast to ridge regression, which uses L2 regularization and shrinks coefficients continuously without setting them to zero, lasso's mathematical foundation allows for both regularization and feature selection simultaneously.

Mathematical Foundations of Ridge Regression

Ridge regression minimizes the sum of squared residuals with an added L2 penalty on the coefficients, expressed mathematically as minimizing ||y - Xb||2 + l||b||2, where l controls the regularization strength. This penalization shrinks coefficients toward zero but never sets them exactly to zero, helping mitigate multicollinearity and overfitting in high-dimensional datasets. Unlike lasso regression, which uses an L1 penalty promoting sparsity, ridge regression's continuous shrinkage improves model stability while retaining all predictors.

Key Differences Between Lasso and Ridge Regression

Lasso regression performs feature selection by imposing an L1 penalty that can shrink some coefficients to zero, effectively reducing model complexity. Ridge regression applies an L2 penalty that shrinks coefficients towards zero but never exactly zero, maintaining all features in the model. Lasso is preferred for sparsity and interpretability, while Ridge is better suited for multicollinearity and models where all predictors contribute.

When to Choose Lasso Regression

Lasso regression is ideal when feature selection is crucial due to its ability to shrink some coefficients to zero, effectively removing irrelevant variables and enhancing model interpretability. It is particularly useful in high-dimensional datasets where many predictors are redundant or noisy. By promoting sparsity, lasso regression prevents overfitting and improves prediction accuracy in models with numerous correlated features.

When to Choose Ridge Regression

Choose ridge regression when dealing with multicollinearity in high-dimensional datasets due to its L2 regularization, which shrinks coefficients without eliminating predictors. It performs well when many features have small to moderate effects, helping to stabilize estimates and reduce model complexity without losing information. Ridge regression is preferred over lasso when the goal is to improve prediction accuracy rather than feature selection.

Performance Comparison: Lasso vs Ridge in Real-World Datasets

Lasso regression excels in feature selection by driving some coefficients to zero, enhancing model interpretability in sparse datasets, whereas Ridge regression shrinks coefficients toward zero without eliminating variables, maintaining all features in the model. In real-world datasets with multicollinearity, Ridge often outperforms Lasso by stabilizing coefficient estimates and reducing variance, leading to improved prediction accuracy. Performance comparison through cross-validation typically shows Lasso's advantage in high-dimensional, sparse settings, while Ridge yields better results when many correlated features contribute to the outcome.

Impact on Feature Selection and Model Interpretability

Lasso regression performs feature selection by shrinking some coefficients to zero, effectively eliminating less important variables, which enhances model interpretability by producing simpler, more sparse models. Ridge regression, on the other hand, shrinks coefficients toward zero without setting any exactly to zero, retaining all features and often improving prediction accuracy at the cost of less straightforward interpretation. The choice between lasso and ridge regression depends on whether the priority lies in identifying key predictors or in maintaining all features for a more stable but complex model.

Practical Implementation: Lasso and Ridge in Python

Lasso regression in Python can be implemented using the `Lasso` class from `scikit-learn`, which applies L1 regularization to enforce sparsity, effectively performing feature selection by shrinking some coefficients to zero. Ridge regression is available via the `Ridge` class in the same library and uses L2 regularization to penalize large coefficients, reducing model complexity and multicollinearity without eliminating features entirely. Both methods require tuning the regularization parameter `alpha` using techniques like cross-validation (`GridSearchCV` or `RandomizedSearchCV`) to optimize model performance and prevent overfitting.

lasso regression vs ridge regression Infographic

techiny.com

techiny.com