Cronbach's Alpha measures internal consistency of a dataset, assessing how closely related a set of items are as a group, which is essential for reliability in survey data analysis. Cohen's Kappa evaluates inter-rater reliability, quantifying the agreement between two raters beyond chance, making it critical for categorical data classification tasks. Understanding the differences between Cronbach's Alpha and Cohen's Kappa enhances data validation and quality assurance in data science projects.

Table of Comparison

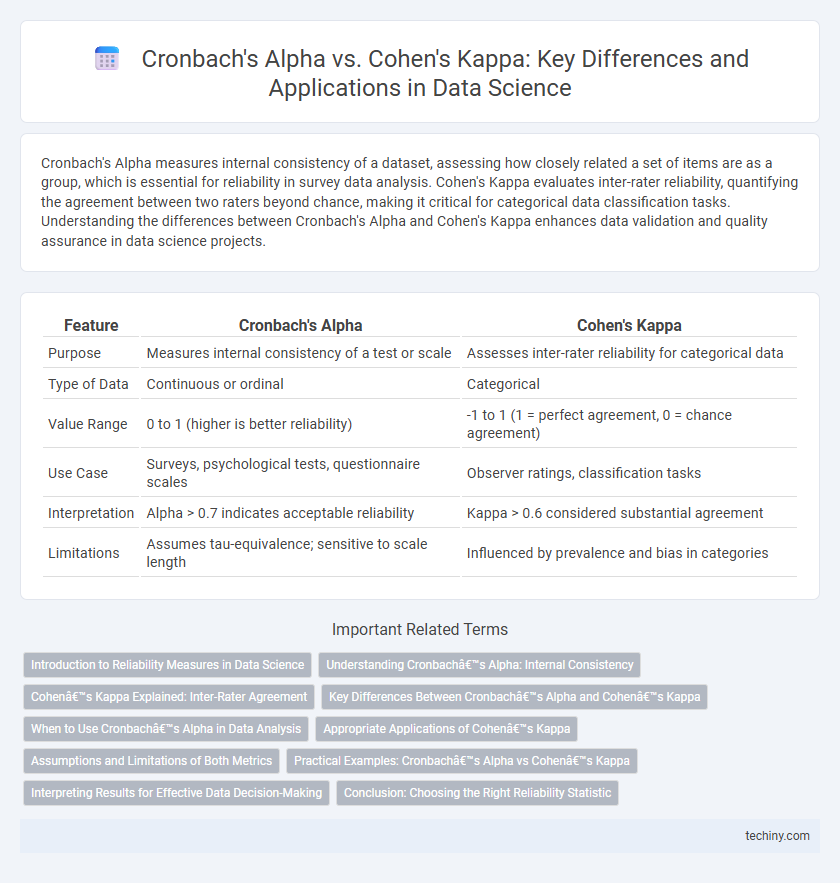

| Feature | Cronbach's Alpha | Cohen's Kappa |

|---|---|---|

| Purpose | Measures internal consistency of a test or scale | Assesses inter-rater reliability for categorical data |

| Type of Data | Continuous or ordinal | Categorical |

| Value Range | 0 to 1 (higher is better reliability) | -1 to 1 (1 = perfect agreement, 0 = chance agreement) |

| Use Case | Surveys, psychological tests, questionnaire scales | Observer ratings, classification tasks |

| Interpretation | Alpha > 0.7 indicates acceptable reliability | Kappa > 0.6 considered substantial agreement |

| Limitations | Assumes tau-equivalence; sensitive to scale length | Influenced by prevalence and bias in categories |

Introduction to Reliability Measures in Data Science

Cronbach's Alpha quantifies internal consistency by measuring how closely related a set of items are as a group, often used to assess the reliability of survey instruments. Cohen's Kappa evaluates inter-rater reliability by accounting for agreement occurring by chance between two raters or classification methods. Both metrics play crucial roles in data science for validating the reliability and consistency of data collection and annotation processes.

Understanding Cronbach’s Alpha: Internal Consistency

Cronbach's Alpha measures the internal consistency of a psychometric instrument by assessing how closely related a set of items are as a group, providing reliability coefficients ranging from 0 to 1. It is widely used in data science to evaluate survey and test reliability, with values above 0.7 generally indicating acceptable internal consistency. Unlike Cohen's Kappa, which assesses inter-rater reliability for categorical data, Cronbach's Alpha focuses on the coherence of continuous or ordinal scale items within a single test.

Cohen’s Kappa Explained: Inter-Rater Agreement

Cohen's Kappa is a robust statistical measure used to evaluate inter-rater agreement for categorical items, accounting for agreement occurring by chance. Unlike Cronbach's Alpha, which assesses internal consistency among scale items, Cohen's Kappa specifically quantifies the reliability between two raters or observers. This metric ranges from -1 to 1, where values closer to 1 indicate strong consensus beyond random agreement, making it essential in data science for validating labeling consistency in classification tasks.

Key Differences Between Cronbach’s Alpha and Cohen’s Kappa

Cronbach's Alpha measures internal consistency or reliability of a set of scale or test items, indicating how closely related the items are as a group. Cohen's Kappa assesses inter-rater reliability by quantifying agreement between two raters or annotators on categorical data, adjusting for chance agreement. While Cronbach's Alpha applies to continuous or ordinal data within a test, Cohen's Kappa is primarily used for categorical labels in classification tasks.

When to Use Cronbach’s Alpha in Data Analysis

Cronbach's Alpha is used when assessing the internal consistency or reliability of a scale composed of multiple Likert-type items, indicating how closely related a set of items are as a group. It is appropriate for measuring the reliability of continuous or ordinal data in psychometric tests, surveys, or questionnaires where multiple responses are combined into a single score. Unlike Cohen's Kappa, which evaluates inter-rater agreement for categorical data, Cronbach's Alpha focuses on the uniformity of item responses within a variable.

Appropriate Applications of Cohen’s Kappa

Cohen's Kappa is highly effective for assessing inter-rater reliability in categorical data where two raters classify items into mutually exclusive categories. Unlike Cronbach's Alpha, which measures internal consistency of continuous scales, Cohen's Kappa accounts for agreement occurring by chance, making it ideal for nominal or ordinal data in classification tasks. This metric is especially appropriate in fields like medical diagnosis, content analysis, and survey research where consistent categorical judgments are critical.

Assumptions and Limitations of Both Metrics

Cronbach's Alpha assumes tau-equivalence, meaning items measure the same underlying construct with equal variance, and is limited by sensitivity to the number of items and dimensionality, often inflating reliability estimates in multi-dimensional scales. Cohen's Kappa assumes categorical variables and independence between raters, facing limitations such as prevalence and bias effects that can distort agreement measurements, especially with imbalanced categories. Both metrics require careful interpretation within their specific assumptions to avoid misleading conclusions in data science applications.

Practical Examples: Cronbach’s Alpha vs Cohen’s Kappa

Cronbach's Alpha measures internal consistency reliability among items in a scale, commonly applied in psychometric assessments to ensure survey questions yield stable results. Cohen's Kappa evaluates inter-rater agreement for categorical data, often used in classification tasks or medical diagnoses to quantify consistency between multiple evaluators. For example, Cronbach's Alpha assesses survey item coherence in customer satisfaction studies, while Cohen's Kappa verifies diagnostic agreement between radiologists interpreting medical images.

Interpreting Results for Effective Data Decision-Making

Cronbach's Alpha measures internal consistency reliability of survey scales, with values above 0.7 indicating acceptable reliability for data-driven decisions. Cohen's Kappa assesses inter-rater agreement for categorical data, where scores above 0.6 suggest substantial agreement supporting confident interpretation. Understanding these metrics ensures accurate evaluation of data quality, enabling more effective and reliable insights in data science projects.

Conclusion: Choosing the Right Reliability Statistic

Choosing between Cronbach's Alpha and Cohen's Kappa depends on the data type and research objective; Cronbach's Alpha is ideal for measuring internal consistency of continuous or ordinal scales, while Cohen's Kappa excels in assessing inter-rater reliability for categorical data. Researchers must consider the scale structure and measurement level to ensure the selected statistic accurately reflects reliability. Proper application of these metrics enhances the validity and interpretability of data science models and research findings.

Cronbach’s Alpha vs Cohen’s Kappa Infographic

techiny.com

techiny.com