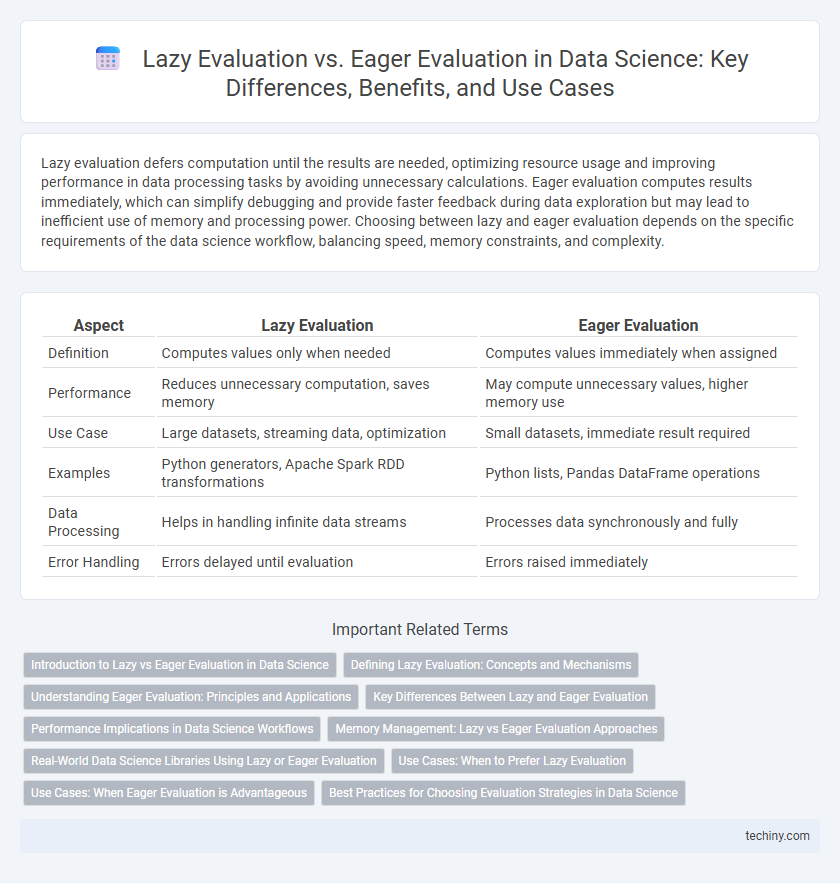

Lazy evaluation defers computation until the results are needed, optimizing resource usage and improving performance in data processing tasks by avoiding unnecessary calculations. Eager evaluation computes results immediately, which can simplify debugging and provide faster feedback during data exploration but may lead to inefficient use of memory and processing power. Choosing between lazy and eager evaluation depends on the specific requirements of the data science workflow, balancing speed, memory constraints, and complexity.

Table of Comparison

| Aspect | Lazy Evaluation | Eager Evaluation |

|---|---|---|

| Definition | Computes values only when needed | Computes values immediately when assigned |

| Performance | Reduces unnecessary computation, saves memory | May compute unnecessary values, higher memory use |

| Use Case | Large datasets, streaming data, optimization | Small datasets, immediate result required |

| Examples | Python generators, Apache Spark RDD transformations | Python lists, Pandas DataFrame operations |

| Data Processing | Helps in handling infinite data streams | Processes data synchronously and fully |

| Error Handling | Errors delayed until evaluation | Errors raised immediately |

Introduction to Lazy vs Eager Evaluation in Data Science

Lazy evaluation in data science defers computation until the results are needed, optimizing resource use and improving efficiency when handling large datasets or complex pipelines. Eager evaluation processes data immediately, providing instant outputs but potentially increasing memory consumption and computation time. Understanding the trade-offs between lazy and eager evaluation is crucial for optimizing performance in data analysis, machine learning workflows, and big data processing.

Defining Lazy Evaluation: Concepts and Mechanisms

Lazy evaluation defers the computation of expressions until their values are actually needed, optimizing performance by avoiding unnecessary calculations. This mechanism relies on thunks, which are placeholders for delayed computations, enabling efficient management of memory and processing resources. By leveraging lazy evaluation, data science workflows can handle large datasets and complex transformations without immediate resource consumption, improving scalability.

Understanding Eager Evaluation: Principles and Applications

Eager evaluation in data science executes expressions immediately, optimizing computational efficiency by producing results as soon as operations are called. This approach is crucial in environments requiring prompt data processing, such as real-time analytics and machine learning pipelines. Understanding eager evaluation principles enhances performance tuning by minimizing latency and resource overhead in data workflows.

Key Differences Between Lazy and Eager Evaluation

Lazy evaluation delays computation until the result is needed, optimizing performance by avoiding unnecessary calculations and conserving memory. Eager evaluation computes results immediately, ensuring faster access at the cost of higher upfront resource usage. Key differences include execution timing, resource management, and suitability for infinite data structures or large datasets.

Performance Implications in Data Science Workflows

Lazy evaluation defers computation until results are needed, reducing memory usage and improving performance in large-scale data processing by avoiding unnecessary calculations. Eager evaluation executes operations immediately, which can increase memory consumption and slow down workflows when handling extensive datasets. Optimizing between lazy and eager evaluation strategies enhances efficiency in data science pipelines by aligning computational load with resource availability and task requirements.

Memory Management: Lazy vs Eager Evaluation Approaches

Lazy evaluation defers computation until the result is needed, significantly reducing memory usage by avoiding the creation of intermediate data structures. Eager evaluation computes values immediately, which can lead to higher memory consumption due to storing all intermediate results upfront. Efficient memory management in data science often favors lazy evaluation for large datasets to optimize resource utilization and performance.

Real-World Data Science Libraries Using Lazy or Eager Evaluation

Real-world data science libraries such as TensorFlow and Dask leverage lazy evaluation to optimize computation by deferring execution until results are needed, enhancing performance and resource management for large datasets. In contrast, libraries like NumPy and Pandas employ eager evaluation, executing operations immediately to provide faster feedback and simpler debugging during interactive data analysis. Choosing between lazy and eager evaluation in data science workflows hinges on balancing computational efficiency with responsiveness based on dataset size and use case complexity.

Use Cases: When to Prefer Lazy Evaluation

Lazy evaluation is ideal for handling large datasets or infinite data streams, where computing every element upfront is resource-intensive or impractical. It excels in scenarios involving complex data pipelines, enabling deferred computation that conserves memory and improves responsiveness by processing only necessary elements on demand. Use cases include real-time data analysis, interactive data visualization, and iterative algorithms in machine learning workflows that benefit from on-the-fly computation.

Use Cases: When Eager Evaluation is Advantageous

Eager evaluation is advantageous in data science scenarios requiring immediate computation results, such as real-time analytics pipelines where low latency is critical. It benefits batch processing tasks involving large datasets by ensuring computations are completed upfront, optimizing memory usage and simplifying debugging. Use cases like feature engineering in machine learning workflows often prefer eager evaluation to validate data transformations instantly.

Best Practices for Choosing Evaluation Strategies in Data Science

Selecting the appropriate evaluation strategy in data science depends on the complexity and size of datasets, where lazy evaluation defers computations until necessary, optimizing memory usage and enhancing performance in large-scale data processing. Eager evaluation executes operations immediately, which simplifies debugging and provides faster feedback for smaller datasets or iterative development. Best practices recommend leveraging lazy evaluation for big data workflows with complex transformations, while adopting eager evaluation for exploratory analysis and rapid prototyping to balance efficiency and responsiveness.

Lazy evaluation vs eager evaluation Infographic

techiny.com

techiny.com