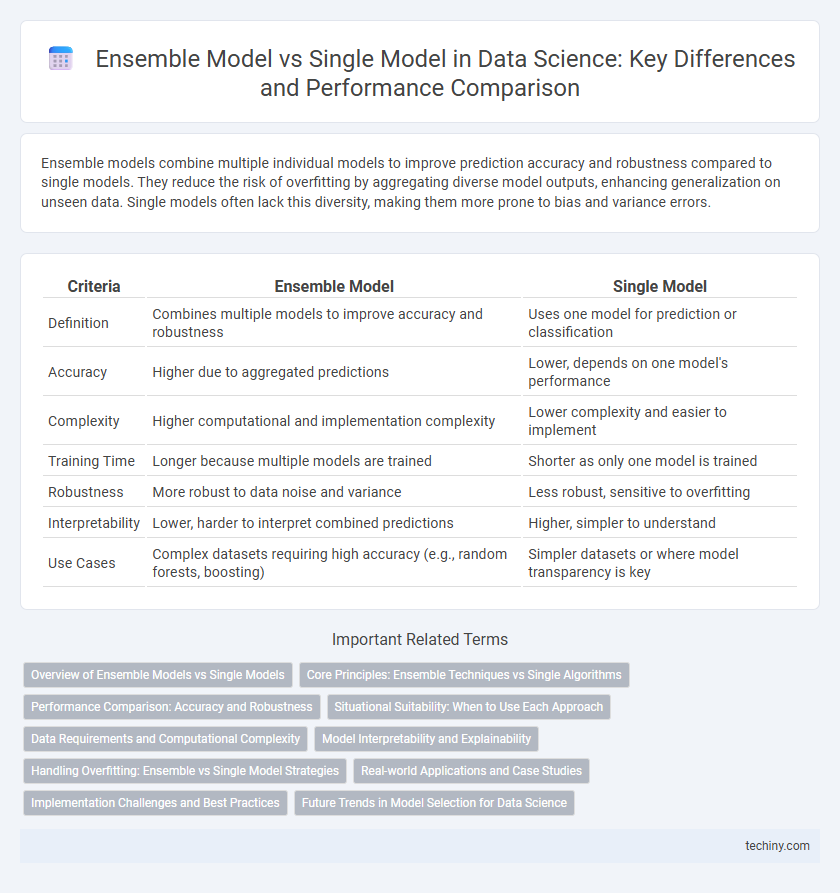

Ensemble models combine multiple individual models to improve prediction accuracy and robustness compared to single models. They reduce the risk of overfitting by aggregating diverse model outputs, enhancing generalization on unseen data. Single models often lack this diversity, making them more prone to bias and variance errors.

Table of Comparison

| Criteria | Ensemble Model | Single Model |

|---|---|---|

| Definition | Combines multiple models to improve accuracy and robustness | Uses one model for prediction or classification |

| Accuracy | Higher due to aggregated predictions | Lower, depends on one model's performance |

| Complexity | Higher computational and implementation complexity | Lower complexity and easier to implement |

| Training Time | Longer because multiple models are trained | Shorter as only one model is trained |

| Robustness | More robust to data noise and variance | Less robust, sensitive to overfitting |

| Interpretability | Lower, harder to interpret combined predictions | Higher, simpler to understand |

| Use Cases | Complex datasets requiring high accuracy (e.g., random forests, boosting) | Simpler datasets or where model transparency is key |

Overview of Ensemble Models vs Single Models

Ensemble models combine multiple individual models, such as decision trees or neural networks, to improve prediction accuracy by reducing variance and bias compared to single models. Single models rely on one algorithm, which may lead to overfitting or underfitting depending on data complexity, whereas ensembles like Random Forests or Gradient Boosting aggregate diverse predictions to enhance robustness. This results in ensemble methods outperforming single models in tasks like classification and regression, especially with large, complex datasets.

Core Principles: Ensemble Techniques vs Single Algorithms

Ensemble models leverage multiple learning algorithms to improve predictive performance by combining their outputs, reducing variance and bias compared to single models that rely on one algorithm's predictions. Techniques such as bagging, boosting, and stacking enhance robustness and accuracy by aggregating diverse model predictions into a consensus outcome. Single algorithms provide simplicity and interpretability but often lack the adaptability and error reduction capabilities inherent in ensemble techniques.

Performance Comparison: Accuracy and Robustness

Ensemble models typically demonstrate higher accuracy and robustness compared to single models by combining multiple algorithms to reduce overfitting and variance. Techniques like Random Forests and Gradient Boosting aggregate predictions, enhancing generalization on diverse datasets and improving resistance to noise. Single models may perform well on specific tasks but often lack the stability and consistent performance that ensembles achieve through diverse hypothesis averaging.

Situational Suitability: When to Use Each Approach

Ensemble models excel in scenarios requiring high accuracy and robustness due to their ability to combine multiple algorithms, effectively reducing variance and bias. Single models are preferable when interpretability, computational efficiency, and speed are critical, especially in real-time applications or when training data is limited. Choosing between ensemble and single models depends on the complexity of the problem, available resources, and specific performance goals within data science projects.

Data Requirements and Computational Complexity

Ensemble models generally require larger datasets to effectively combine the predictions of multiple base learners, increasing their data requirement compared to single models that can perform well on smaller datasets. The computational complexity of ensemble methods is significantly higher due to the need to train, validate, and aggregate multiple models, while single models typically demand less processing power and training time. This trade-off between improved accuracy and resource consumption is crucial for selecting the appropriate model type in data science projects.

Model Interpretability and Explainability

Ensemble models combine multiple algorithms to enhance predictive accuracy but often sacrifice model interpretability and explainability due to their complexity. Single models, particularly simpler algorithms like decision trees or linear regression, provide clearer insights into feature importance and decision pathways, facilitating easier explanation to stakeholders. Prioritizing explainability is essential in domains such as healthcare or finance where transparency directly impacts trust and regulatory compliance.

Handling Overfitting: Ensemble vs Single Model Strategies

Ensemble models reduce overfitting by combining multiple base learners, which averages out errors and increases generalization compared to single models. Techniques like bagging, boosting, and stacking create diverse model sets that mitigate variance and bias, effectively balancing model complexity and robustness. Single models often require regularization methods such as L1/L2 penalties or early stopping to control overfitting but generally lack the adaptive error correction capabilities inherent in ensembles.

Real-world Applications and Case Studies

Ensemble models consistently outperform single models in real-world applications such as fraud detection, healthcare diagnostics, and customer churn prediction by combining multiple algorithms to improve accuracy and robustness. Case studies in finance demonstrate that ensemble techniques like Random Forest and Gradient Boosting reduce false positives significantly compared to single decision trees. In medical imaging, ensembles have enhanced predictive performance, leading to earlier and more reliable disease detection, boosting overall clinical decision-making.

Implementation Challenges and Best Practices

Implementing ensemble models involves managing increased computational complexity and ensuring proper tuning of diverse base learners to prevent overfitting. Effective strategies include leveraging cross-validation for robust model selection and utilizing parallel processing to accelerate training. Best practices emphasize careful feature engineering, consistent data preprocessing, and monitoring model interpretability to balance performance gains with practical deployment constraints.

Future Trends in Model Selection for Data Science

Ensemble models are increasingly favored in data science due to their superior accuracy and robustness by integrating multiple algorithms, reducing overfitting risks common in single models. Future trends emphasize hybrid ensemble techniques combining neural networks and traditional algorithms to optimize predictive performance across complex datasets. Advances in automated machine learning (AutoML) will further streamline model selection, leveraging ensemble strategies to enhance adaptability and scalability in diverse data environments.

ensemble model vs single model Infographic

techiny.com

techiny.com