ETL (Extract, Transform, Load) involves extracting data from sources, transforming it into a suitable format, and then loading it into the target system, optimizing for processing before storage. ELT (Extract, Load, Transform) extracts and loads raw data into the target system first, where transformation occurs, leveraging the processing power of modern data warehouses. ELT offers greater flexibility and scalability for handling large volumes of complex data compared to traditional ETL workflows.

Table of Comparison

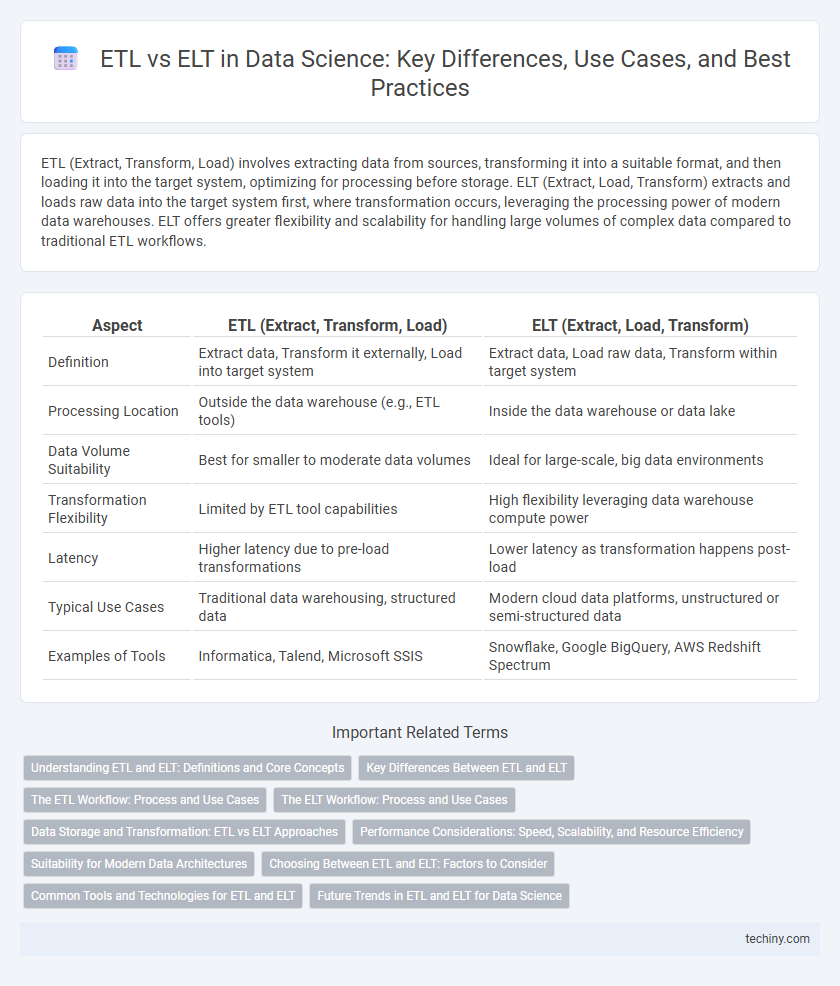

| Aspect | ETL (Extract, Transform, Load) | ELT (Extract, Load, Transform) |

|---|---|---|

| Definition | Extract data, Transform it externally, Load into target system | Extract data, Load raw data, Transform within target system |

| Processing Location | Outside the data warehouse (e.g., ETL tools) | Inside the data warehouse or data lake |

| Data Volume Suitability | Best for smaller to moderate data volumes | Ideal for large-scale, big data environments |

| Transformation Flexibility | Limited by ETL tool capabilities | High flexibility leveraging data warehouse compute power |

| Latency | Higher latency due to pre-load transformations | Lower latency as transformation happens post-load |

| Typical Use Cases | Traditional data warehousing, structured data | Modern cloud data platforms, unstructured or semi-structured data |

| Examples of Tools | Informatica, Talend, Microsoft SSIS | Snowflake, Google BigQuery, AWS Redshift Spectrum |

Understanding ETL and ELT: Definitions and Core Concepts

ETL (Extract, Transform, Load) is a data integration process where data is extracted from sources, transformed into a suitable format, and then loaded into a target database or data warehouse. ELT (Extract, Load, Transform) differs by loading raw data directly into the target system for transformation within the destination environment, often leveraging the processing power of modern cloud-based data platforms. Understanding these core concepts is crucial for optimizing data workflows, as ETL suits structured, predefined transformations, while ELT offers flexibility and scalability for handling large volumes of diverse data.

Key Differences Between ETL and ELT

ETL (Extract, Transform, Load) moves data from sources to a staging area for transformation before loading it into the target database, ideal for structured data and traditional data warehouses. ELT (Extract, Load, Transform) loads raw data directly into a data lake or cloud-based repository, performing transformations within the target system, optimizing for scalability and big data analytics. The primary difference lies in transformation timing and processing location, with ETL prioritizing pre-load data cleansing, while ELT leverages the computational power of modern data platforms post-load.

The ETL Workflow: Process and Use Cases

The ETL workflow involves extracting data from various sources, transforming it to fit operational needs, and loading it into a data warehouse or storage system. This process is essential for data cleansing, integration, and structuring before analysis, making it ideal for traditional BI applications and structured data environments. Use cases include financial reporting, compliance data management, and legacy system integrations where data quality and consistency are critical.

The ELT Workflow: Process and Use Cases

The ELT workflow involves extracting data from source systems, loading it directly into a target data repository, such as a cloud data warehouse, and then transforming it using the processing power of the destination platform. This approach optimizes performance by leveraging scalable cloud resources for data transformation tasks, enabling faster analytics and real-time data processing. Common use cases include big data analytics, machine learning pipelines, and situations requiring agile data integration with minimal latency.

Data Storage and Transformation: ETL vs ELT Approaches

ETL (Extract, Transform, Load) performs data transformation before loading data into storage, optimizing for structured data warehouses and reducing storage costs by storing only processed data. ELT (Extract, Load, Transform) loads raw data directly into scalable data lakes or cloud storage, enabling flexible, schema-on-read transformations using powerful processing engines like Apache Spark. ELT's approach leverages modern cloud architectures for real-time analytics and supports diverse data formats, while ETL remains efficient for traditional, highly curated data pipelines.

Performance Considerations: Speed, Scalability, and Resource Efficiency

ETL processes often lag in speed due to the intensive data transformation before loading, whereas ELT leverages the target system's computational power to perform transformations, enhancing speed and scalability. ELT benefits from modern cloud data warehouses' elastic resources, offering superior resource efficiency by scaling dynamically based on workload demands. Performance considerations favor ELT for large volumes and complex transformations, while ETL may suffice for smaller, simpler datasets where resource limitations exist.

Suitability for Modern Data Architectures

ELT is more suitable for modern data architectures because it leverages cloud-based data warehouses with scalable storage and processing capabilities, enabling faster and more flexible data transformations directly within the destination system. ETL involves transforming data before loading, which can limit scalability and slow down processing in large volumes typical of big data environments. Cloud-native platforms like Snowflake, Google BigQuery, and Azure Synapse optimize ELT processes to handle complex analytics and real-time data integration efficiently.

Choosing Between ETL and ELT: Factors to Consider

Choosing between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) depends on data volume, system architecture, and processing speed requirements. ETL is ideal for smaller datasets requiring complex transformations before loading into data warehouses, while ELT suits large-scale data lakes and cloud environments where raw data is loaded first for flexible, on-demand transformation. Consider data latency, infrastructure costs, and scalability to optimize workflow efficiency and analytical performance.

Common Tools and Technologies for ETL and ELT

Common tools for ETL processes include Apache NiFi, Talend, and Informatica, which excel at extracting, transforming, and loading data from multiple sources into data warehouses. ELT workflows often utilize platforms like Apache Spark, Azure Data Factory, and Google BigQuery that enable data transformation post-loading within scalable cloud environments. Both approaches rely on databases such as Snowflake and Amazon Redshift, which support high-performance querying and storage for structured and semi-structured data.

Future Trends in ETL and ELT for Data Science

Future trends in ETL and ELT for data science emphasize automation with AI-driven data transformation and real-time data processing to enhance efficiency and accuracy. Cloud-native ETL/ELT platforms are gaining prominence, enabling scalable, flexible infrastructure to handle growing data volumes and diverse data sources. Integration of machine learning models within ETL/ELT pipelines is becoming standard, optimizing data cleansing, enrichment, and feature engineering for advanced analytics.

ETL vs ELT Infographic

techiny.com

techiny.com