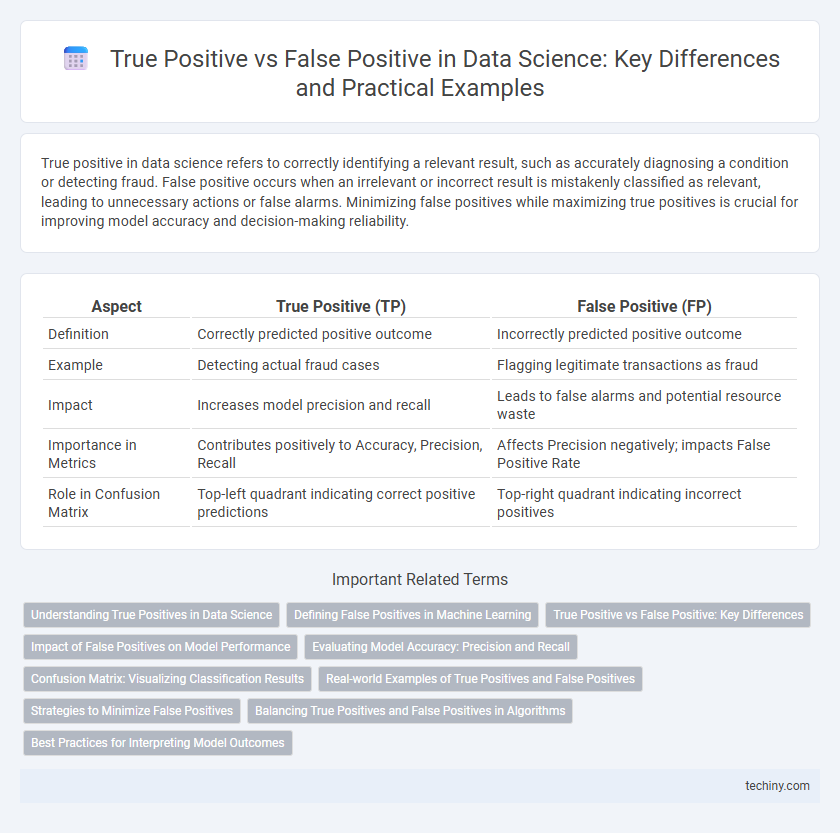

True positive in data science refers to correctly identifying a relevant result, such as accurately diagnosing a condition or detecting fraud. False positive occurs when an irrelevant or incorrect result is mistakenly classified as relevant, leading to unnecessary actions or false alarms. Minimizing false positives while maximizing true positives is crucial for improving model accuracy and decision-making reliability.

Table of Comparison

| Aspect | True Positive (TP) | False Positive (FP) |

|---|---|---|

| Definition | Correctly predicted positive outcome | Incorrectly predicted positive outcome |

| Example | Detecting actual fraud cases | Flagging legitimate transactions as fraud |

| Impact | Increases model precision and recall | Leads to false alarms and potential resource waste |

| Importance in Metrics | Contributes positively to Accuracy, Precision, Recall | Affects Precision negatively; impacts False Positive Rate |

| Role in Confusion Matrix | Top-left quadrant indicating correct positive predictions | Top-right quadrant indicating incorrect positives |

Understanding True Positives in Data Science

True positives in data science represent instances where a model correctly identifies a positive condition, such as detecting a disease in a patient who truly has it. Accurate measurement of true positives is crucial for evaluating the sensitivity and effectiveness of classification algorithms. Optimizing true positives helps enhance decision-making processes by reducing missed detections and improving overall predictive performance.

Defining False Positives in Machine Learning

False positives in machine learning occur when a model incorrectly predicts the positive class for an instance that actually belongs to the negative class, leading to misleading conclusions. This type of error impacts metrics such as precision and can cause significant issues in applications like medical diagnosis or fraud detection. Understanding and minimizing false positives is crucial for optimizing model performance and ensuring reliable decision-making.

True Positive vs False Positive: Key Differences

True positives occur when a data science model correctly identifies a positive case, confirming the presence of the targeted attribute or condition. False positives represent incorrect positive identifications where the model signals a condition that is not actually present, leading to potential errors in decision-making. Understanding the balance between true positives and false positives is critical for optimizing model precision and ensuring reliable predictive performance in data science applications.

Impact of False Positives on Model Performance

False positives in data science models lead to inflated error rates, reducing overall model precision and trustworthiness. High false positive rates can result in unnecessary actions, increased operational costs, and customer dissatisfaction, particularly in domains like healthcare and fraud detection. Minimizing false positives through rigorous threshold tuning and validation techniques is crucial for optimizing model performance and maintaining accurate predictive outcomes.

Evaluating Model Accuracy: Precision and Recall

True positive refers to correctly predicted positive instances, while false positive indicates incorrectly predicted positives, both critical for evaluating model accuracy. Precision measures the proportion of true positives among all positive predictions, highlighting the model's correctness in positive labeling. Recall assesses the ratio of true positives to actual positives, reflecting the model's ability to identify all relevant instances.

Confusion Matrix: Visualizing Classification Results

The confusion matrix is a vital tool in data science for visualizing classification results by displaying true positives, false positives, true negatives, and false negatives. True positives indicate correctly predicted positive cases, while false positives represent instances where negative samples were incorrectly classified as positive. Analyzing these metrics within the confusion matrix helps optimize model performance by balancing precision and recall, reducing misclassification costs.

Real-world Examples of True Positives and False Positives

In medical diagnostics, a true positive occurs when a test correctly identifies a patient with a disease, such as detecting cancer through a confirmed biopsy result, while a false positive mistakenly indicates disease presence in a healthy individual, leading to unnecessary treatments. In spam email filtering, true positives accurately classify unwanted emails as spam, effectively protecting users from phishing attacks, whereas false positives wrongly label legitimate emails as spam, potentially causing missed important communications. Fraud detection systems experience true positives when fraudulent transactions are successfully flagged, reducing financial losses, whereas false positives generate alerts for legitimate activities, creating inefficiencies and customer dissatisfaction.

Strategies to Minimize False Positives

Implement rigorous threshold tuning based on precision-recall trade-offs to minimize false positives effectively in data science models. Employ cross-validation techniques and incorporate domain-specific knowledge to enhance model discrimination capabilities. Utilize ensemble methods and anomaly detection algorithms to reduce the likelihood of incorrectly classifying negative instances as positive.

Balancing True Positives and False Positives in Algorithms

Balancing true positives and false positives is critical for optimizing algorithm performance in data science, as high true positive rates improve model sensitivity while minimizing false positives reduces erroneous alerts. Techniques such as precision-recall trade-offs, ROC curve analysis, and threshold tuning help achieve an optimal balance suited to specific applications. Proper balance enhances decision-making accuracy in domains like medical diagnosis, fraud detection, and spam filtering.

Best Practices for Interpreting Model Outcomes

True positive results accurately identify relevant cases, enhancing model reliability and decision-making effectiveness. False positives occur when the model incorrectly flags negative instances as positive, leading to potential resource wastage and reduced trust in predictions. Best practices for interpreting model outcomes include validating with confusion matrices, adjusting classification thresholds, and employing precision-recall trade-offs to balance sensitivity and specificity.

true positive vs false positive Infographic

techiny.com

techiny.com