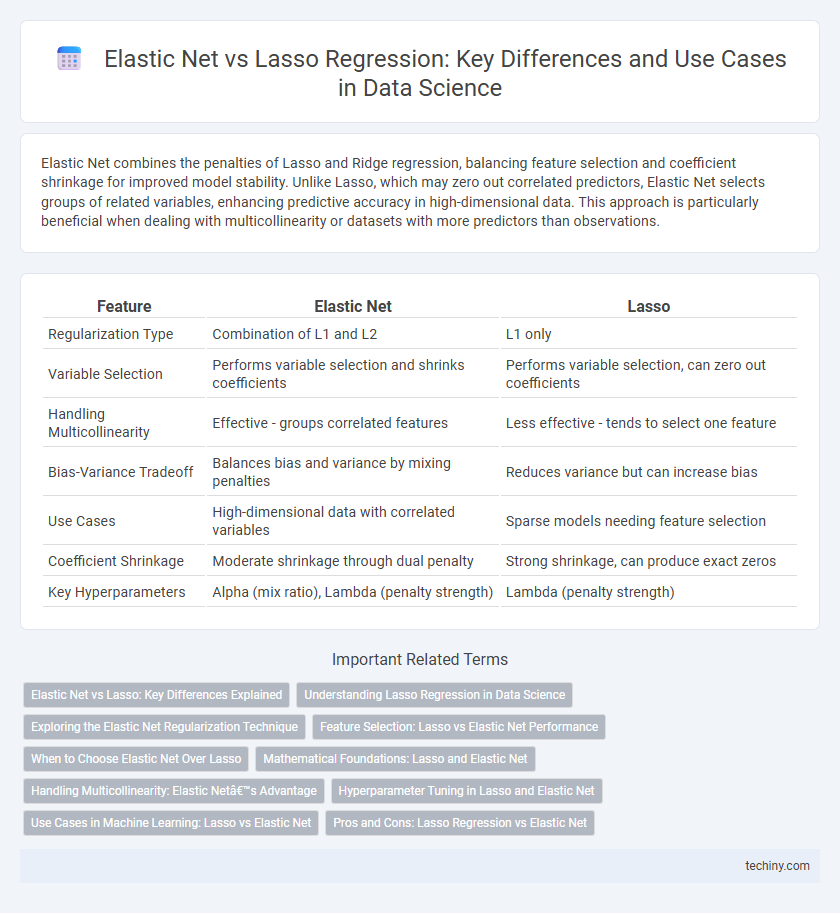

Elastic Net combines the penalties of Lasso and Ridge regression, balancing feature selection and coefficient shrinkage for improved model stability. Unlike Lasso, which may zero out correlated predictors, Elastic Net selects groups of related variables, enhancing predictive accuracy in high-dimensional data. This approach is particularly beneficial when dealing with multicollinearity or datasets with more predictors than observations.

Table of Comparison

| Feature | Elastic Net | Lasso |

|---|---|---|

| Regularization Type | Combination of L1 and L2 | L1 only |

| Variable Selection | Performs variable selection and shrinks coefficients | Performs variable selection, can zero out coefficients |

| Handling Multicollinearity | Effective - groups correlated features | Less effective - tends to select one feature |

| Bias-Variance Tradeoff | Balances bias and variance by mixing penalties | Reduces variance but can increase bias |

| Use Cases | High-dimensional data with correlated variables | Sparse models needing feature selection |

| Coefficient Shrinkage | Moderate shrinkage through dual penalty | Strong shrinkage, can produce exact zeros |

| Key Hyperparameters | Alpha (mix ratio), Lambda (penalty strength) | Lambda (penalty strength) |

Elastic Net vs Lasso: Key Differences Explained

Elastic Net combines the penalties of Lasso and Ridge regression, addressing multicollinearity and selecting groups of correlated variables more effectively than Lasso, which uses only L1 regularization to enforce sparsity. Unlike Lasso, which can arbitrarily select one feature from a group of correlated variables, Elastic Net balances feature selection and coefficient shrinkage, improving model stability and predictive performance. This makes Elastic Net especially suitable for high-dimensional data where multicollinearity is prevalent, offering a more flexible and robust alternative to Lasso in regularized regression tasks.

Understanding Lasso Regression in Data Science

Lasso regression in data science is a linear model that performs both variable selection and regularization by applying an L1 penalty to the coefficients, effectively shrinking some coefficients to zero and thus producing sparse models. It is particularly useful for high-dimensional datasets where feature selection is critical to prevent overfitting and enhance model interpretability. Unlike elastic net, which combines L1 and L2 penalties, lasso focuses solely on L1 regularization, making it ideal for scenarios requiring automatic feature elimination.

Exploring the Elastic Net Regularization Technique

Elastic Net regularization combines the strengths of Lasso (L1) and Ridge (L2) penalties, controlling both feature selection and coefficient shrinkage, which improves model stability and predictive accuracy especially in datasets with highly correlated features. Unlike Lasso that may arbitrarily select one feature from a group, Elastic Net encourages grouping effects, retaining multiple correlated features and reducing overfitting. This makes Elastic Net particularly effective for high-dimensional data in data science applications such as genomics, finance, and text mining.

Feature Selection: Lasso vs Elastic Net Performance

Lasso regression excels in feature selection by shrinking some coefficients to zero, effectively performing variable selection in high-dimensional data. Elastic Net combines L1 and L2 regularization, balancing between feature selection and coefficient shrinkage, which improves predictive performance when predictors are highly correlated. Studies show Elastic Net outperforms Lasso in scenarios with multicollinearity, maintaining model sparsity while capturing group effects among variables.

When to Choose Elastic Net Over Lasso

Elastic Net is preferred over Lasso when dealing with datasets containing highly correlated features, as it balances L1 and L2 regularization to select groups of correlated variables rather than arbitrarily picking one. Elastic Net performs better when the number of predictors exceeds the number of observations or when the underlying model is assumed to be sparse but with correlated coefficients. Use Elastic Net to improve prediction accuracy and feature selection stability in complex high-dimensional data scenarios common in genomics and finance.

Mathematical Foundations: Lasso and Elastic Net

Lasso regression minimizes the residual sum of squares with an L1 penalty, promoting sparsity by shrinking some coefficients exactly to zero, which aids feature selection in high-dimensional data. Elastic Net combines L1 (lasso) and L2 (ridge) penalties, balancing variable selection and regularization to handle multicollinearity and select groups of correlated features. The Elastic Net objective function includes both the absolute value and squared magnitude of coefficients, enabling improved prediction accuracy and model stability in complex datasets.

Handling Multicollinearity: Elastic Net’s Advantage

Elastic Net combines L1 and L2 regularization, making it superior in handling multicollinearity compared to Lasso, which uses only L1 regularization. By blending these penalties, Elastic Net effectively selects correlated features while maintaining model stability and reducing variance. This dual approach allows Elastic Net to overcome Lasso's limitation of arbitrarily selecting only one feature from a group of correlated predictors.

Hyperparameter Tuning in Lasso and Elastic Net

Hyperparameter tuning in Lasso involves optimizing the alpha parameter to balance between feature selection and regularization strength, minimizing overfitting while retaining relevant variables. Elastic Net tuning requires adjusting both alpha and l1_ratio parameters, where alpha controls regularization magnitude and l1_ratio balances L1 (Lasso) and L2 (Ridge) penalties, effectively handling correlated features. Grid search and cross-validation are common techniques used to identify the optimal combination of these hyperparameters for improved model performance.

Use Cases in Machine Learning: Lasso vs Elastic Net

Lasso regression excels in feature selection within high-dimensional datasets by enforcing sparsity, making it ideal for models requiring interpretability and reducing multicollinearity. Elastic Net combines L1 and L2 penalties, balancing feature selection with coefficient shrinkage, which enhances performance on correlated variables and complex datasets. Use Elastic Net for genomic data or finance applications where groups of correlated predictors are present, while Lasso suits scenarios demanding simpler, more interpretable models with fewer predictors.

Pros and Cons: Lasso Regression vs Elastic Net

Lasso regression excels in feature selection by shrinking some coefficients to zero, making it ideal for sparse datasets but can struggle with highly correlated variables. Elastic Net combines Lasso and Ridge penalties, offering a balanced approach that handles multicollinearity better by selecting groups of correlated features while maintaining sparsity. However, Elastic Net requires tuning two hyperparameters, increasing model complexity compared to Lasso's simpler single-parameter approach.

elastic net vs lasso Infographic

techiny.com

techiny.com