SGDClassifier excels in handling large-scale and sparse datasets by implementing stochastic gradient descent, making it highly efficient for online learning and incremental updates. LogisticRegression provides a more robust and stable solution for smaller datasets with its maximum likelihood estimation and supports a wide range of regularization options. Choosing between them depends on dataset size, complexity, and the need for real-time model updates.

Table of Comparison

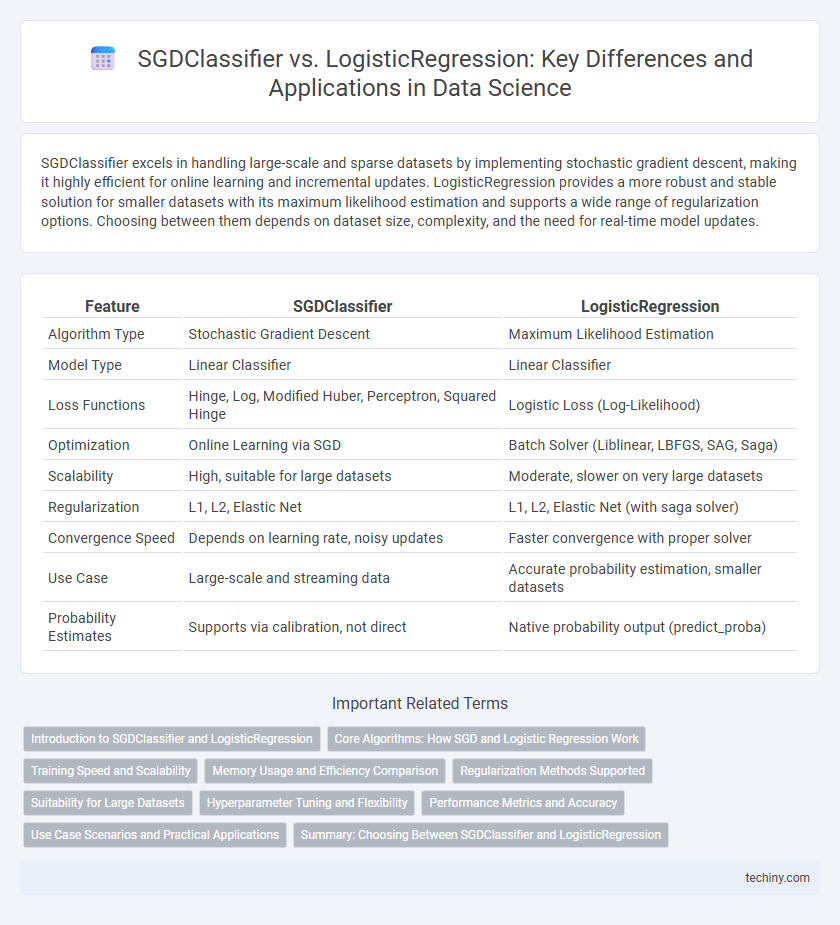

| Feature | SGDClassifier | LogisticRegression |

|---|---|---|

| Algorithm Type | Stochastic Gradient Descent | Maximum Likelihood Estimation |

| Model Type | Linear Classifier | Linear Classifier |

| Loss Functions | Hinge, Log, Modified Huber, Perceptron, Squared Hinge | Logistic Loss (Log-Likelihood) |

| Optimization | Online Learning via SGD | Batch Solver (Liblinear, LBFGS, SAG, Saga) |

| Scalability | High, suitable for large datasets | Moderate, slower on very large datasets |

| Regularization | L1, L2, Elastic Net | L1, L2, Elastic Net (with saga solver) |

| Convergence Speed | Depends on learning rate, noisy updates | Faster convergence with proper solver |

| Use Case | Large-scale and streaming data | Accurate probability estimation, smaller datasets |

| Probability Estimates | Supports via calibration, not direct | Native probability output (predict_proba) |

Introduction to SGDClassifier and LogisticRegression

SGDClassifier employs stochastic gradient descent for efficient large-scale linear classification, making it suitable for datasets with high-dimensional features and streaming data. LogisticRegression optimizes the logistic loss function using various solvers, delivering probabilistic outputs for binary and multi-class classification tasks. Both models support regularization techniques such as L1 and L2 to prevent overfitting and improve generalization.

Core Algorithms: How SGD and Logistic Regression Work

SGDClassifier employs Stochastic Gradient Descent to minimize the loss function by iteratively updating model parameters using a single training sample or a small batch, enabling scalability and faster convergence on large datasets. LogisticRegression directly optimizes the logistic loss function through deterministic algorithms like Newton-Raphson or coordinate descent to find the global minimum and interpret coefficients probabilistically. While LogisticRegression often provides more precise parameter estimates, SGDClassifier is preferred for handling massive data and online learning scenarios due to its computational efficiency and flexibility.

Training Speed and Scalability

SGDClassifier offers faster training speed than LogisticRegression, especially on large-scale datasets due to its stochastic gradient descent optimization, which processes data in mini-batches. LogisticRegression, while more stable and accurate with smaller datasets, scales poorly as the number of samples and features increases, causing longer training times. For massive datasets with high dimensionality, SGDClassifier's scalability and efficiency make it the preferred choice in data science workflows.

Memory Usage and Efficiency Comparison

SGDClassifier utilizes stochastic gradient descent, making it highly memory-efficient and suitable for large-scale datasets due to incremental learning capabilities, whereas LogisticRegression typically requires more memory as it relies on batch optimization methods. In terms of efficiency, SGDClassifier converges faster on massive datasets with sparse features, while LogisticRegression provides more stable and accurate results on smaller to medium datasets due to its solver options like liblinear or lbfgs. Choosing between the two depends largely on dataset size, feature sparsity, and computational resource constraints.

Regularization Methods Supported

SGDClassifier supports multiple regularization methods, including L1, L2, and elastic net penalties, which provide flexibility for controlling model complexity and preventing overfitting. LogisticRegression also offers L1, L2, and elastic net regularizations, but its implementation is optimized for smaller datasets and convergence stability. Both classifiers enable tuning of regularization strength through hyperparameters, impacting bias-variance trade-off during model training.

Suitability for Large Datasets

SGDClassifier excels in handling large-scale datasets due to its efficient implementation of stochastic gradient descent, enabling faster training on massive data. LogisticRegression, while providing more interpretable results, typically requires more memory and CPU resources, making it less suitable for extremely large datasets without optimization techniques like minibatch processing. For big data applications in data science, SGDClassifier offers superior scalability and speed, particularly when real-time or incremental learning is needed.

Hyperparameter Tuning and Flexibility

SGDClassifier offers extensive hyperparameter tuning options such as learning rate schedules, loss functions, and penalty types, enabling fine-grained control over model training for large-scale, sparse datasets. LogisticRegression provides a more straightforward hyperparameter set, mainly focusing on regularization strength and solver choice, which simplifies tuning but may limit flexibility in complex scenarios. The adaptability of SGDClassifier makes it suitable for online learning and streaming data, while LogisticRegression excels in stable environments requiring reliable probabilities and interpretability.

Performance Metrics and Accuracy

SGDClassifier often excels in large-scale datasets by offering faster training times with stochastic gradient descent, while LogisticRegression provides more stable and interpretable coefficients using batch optimization. Accuracy for LogisticRegression typically surpasses SGDClassifier on smaller or cleaner datasets due to its deterministic convergence. Performance metrics such as precision, recall, and F1-score depend heavily on parameter tuning and data preprocessing, with LogisticRegression generally showing more consistent results in balanced classification tasks.

Use Case Scenarios and Practical Applications

SGDClassifier is ideal for large-scale datasets and real-time applications due to its efficient stochastic gradient descent optimization, making it suitable for online learning and high-dimensional sparse data typical in text classification and spam detection. LogisticRegression performs well in small to medium-sized datasets where interpretability and probabilistic outputs are important, often used in medical diagnosis and credit scoring. Both models handle binary classification tasks but differ in scalability and application scope, influencing their choice based on dataset size and performance requirements.

Summary: Choosing Between SGDClassifier and LogisticRegression

SGDClassifier offers scalable and efficient training for large datasets using stochastic gradient descent, making it ideal for online learning and high-dimensional data. LogisticRegression provides more robust optimization with built-in solvers, better suited for smaller to medium-sized datasets requiring precise probability estimates. Selecting between SGDClassifier and LogisticRegression depends on dataset size, computational constraints, and the need for model interpretability and probability outputs.

SGDClassifier vs LogisticRegression Infographic

techiny.com

techiny.com