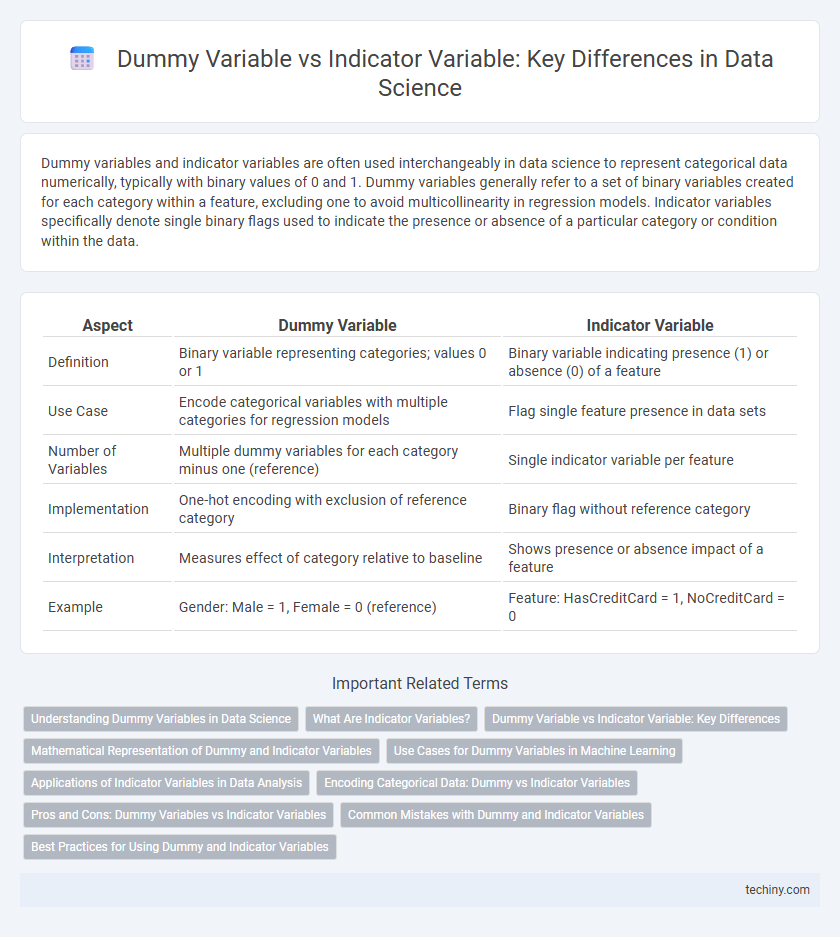

Dummy variables and indicator variables are often used interchangeably in data science to represent categorical data numerically, typically with binary values of 0 and 1. Dummy variables generally refer to a set of binary variables created for each category within a feature, excluding one to avoid multicollinearity in regression models. Indicator variables specifically denote single binary flags used to indicate the presence or absence of a particular category or condition within the data.

Table of Comparison

| Aspect | Dummy Variable | Indicator Variable |

|---|---|---|

| Definition | Binary variable representing categories; values 0 or 1 | Binary variable indicating presence (1) or absence (0) of a feature |

| Use Case | Encode categorical variables with multiple categories for regression models | Flag single feature presence in data sets |

| Number of Variables | Multiple dummy variables for each category minus one (reference) | Single indicator variable per feature |

| Implementation | One-hot encoding with exclusion of reference category | Binary flag without reference category |

| Interpretation | Measures effect of category relative to baseline | Shows presence or absence impact of a feature |

| Example | Gender: Male = 1, Female = 0 (reference) | Feature: HasCreditCard = 1, NoCreditCard = 0 |

Understanding Dummy Variables in Data Science

Dummy variables in data science are binary variables representing categorical data with two or more categories, enabling mathematical models to process qualitative information. Each category is converted into a separate variable containing values of 0 or 1, indicating the absence or presence of the category in the data point. Understanding the creation and use of dummy variables is essential for regression analysis, classification models, and feature engineering.

What Are Indicator Variables?

Indicator variables, also known as binary or dummy variables, represent categorical data in numerical form by assigning values of 0 or 1 to indicate the absence or presence of a specific attribute. These variables are essential in data science for encoding qualitative features, enabling machine learning algorithms to process non-numeric data effectively. By transforming categories into indicator variables, models can capture the influence of different groups or conditions on predictive outcomes.

Dummy Variable vs Indicator Variable: Key Differences

Dummy variables and indicator variables both represent categorical data in numerical form for statistical modeling, yet dummy variables typically convert categories into multiple binary columns while indicator variables often represent a single binary flag for presence or absence. The key difference lies in their usage: dummy variables encode each category into separate binary variables to avoid ordinal implication, whereas indicator variables handle binary classification tasks or specific attribute detection. Understanding these distinctions aids in proper model specification, reduces multicollinearity, and improves interpretability in regression and machine learning algorithms.

Mathematical Representation of Dummy and Indicator Variables

Dummy variables, also known as binary variables, are mathematically represented as \( D_i = \{0,1\} \), where \( D_i = 1 \) indicates the presence of a category and \( 0 \) its absence. Indicator variables follow the same binary structure but are often used to denote the membership of an observation in one specific category within multiple categories, typically represented as \( I_{ij} = \{0,1\} \) for the \( j^{th} \) category of the \( i^{th} \) observation. Both dummy and indicator variables serve as fundamental tools in regression models to convert categorical data into a numerical format suitable for mathematical analysis.

Use Cases for Dummy Variables in Machine Learning

Dummy variables are crucial in machine learning for converting categorical data into numerical format, enabling algorithms to process non-numeric inputs effectively. They allow models to identify and leverage distinct categories, such as gender, nationality, or product types, improving prediction accuracy and interpretability. Common use cases include regression analysis, decision trees, and clustering, where capturing category-specific effects enhances model performance.

Applications of Indicator Variables in Data Analysis

Indicator variables, commonly known as dummy variables, play a crucial role in regression models by converting categorical data into a numerical format that algorithms can interpret. These variables enable the inclusion of qualitative factors, such as gender or region, in predictive models, facilitating the analysis of their impact on the dependent variable. In machine learning and statistical analysis, indicator variables enhance model flexibility and interpretability by representing group membership or binary conditions.

Encoding Categorical Data: Dummy vs Indicator Variables

Encoding categorical data often involves dummy variables and indicator variables, both used to convert categories into numerical formats for machine learning models. Dummy variables typically use k-1 binary variables for a categorical feature with k levels to avoid multicollinearity, especially in regression analysis. Indicator variables, also known as one-hot encoding, represent each category with a separate binary feature, preserving all categories but potentially increasing dimensionality.

Pros and Cons: Dummy Variables vs Indicator Variables

Dummy variables and indicator variables both encode categorical data numerically for statistical models, but dummy variables typically represent k-1 categories to avoid multicollinearity, whereas indicator variables represent each category individually as binary flags. Dummy variables reduce redundancy and simplify model interpretation, but can risk omitting information if incorrectly specified, while indicator variables preserve all category distinctions but may introduce multicollinearity and increase model complexity. Choosing between them depends on the modeling goal, dataset dimensionality, and the need for interpretability versus completeness in categorical representation.

Common Mistakes with Dummy and Indicator Variables

Confusing dummy variables with indicator variables often leads to improper coding in regression models, causing multicollinearity or omitted variable bias. A common mistake is failing to drop one dummy variable category, resulting in the dummy variable trap, which inflates variance and distorts coefficient estimates. Misinterpreting indicator variables as continuous rather than binary can also skew predictions and reduce model accuracy in data science applications.

Best Practices for Using Dummy and Indicator Variables

Best practices for using dummy and indicator variables in data science emphasize clear, consistent encoding of categorical data to ensure model interpretability and accuracy. Remove one dummy variable from each categorical group to avoid multicollinearity, known as the "dummy variable trap," which strengthens regression model stability. Normalize naming conventions and document variable transformations to maintain reproducibility and facilitate collaboration across data teams.

dummy variable vs indicator variable Infographic

techiny.com

techiny.com