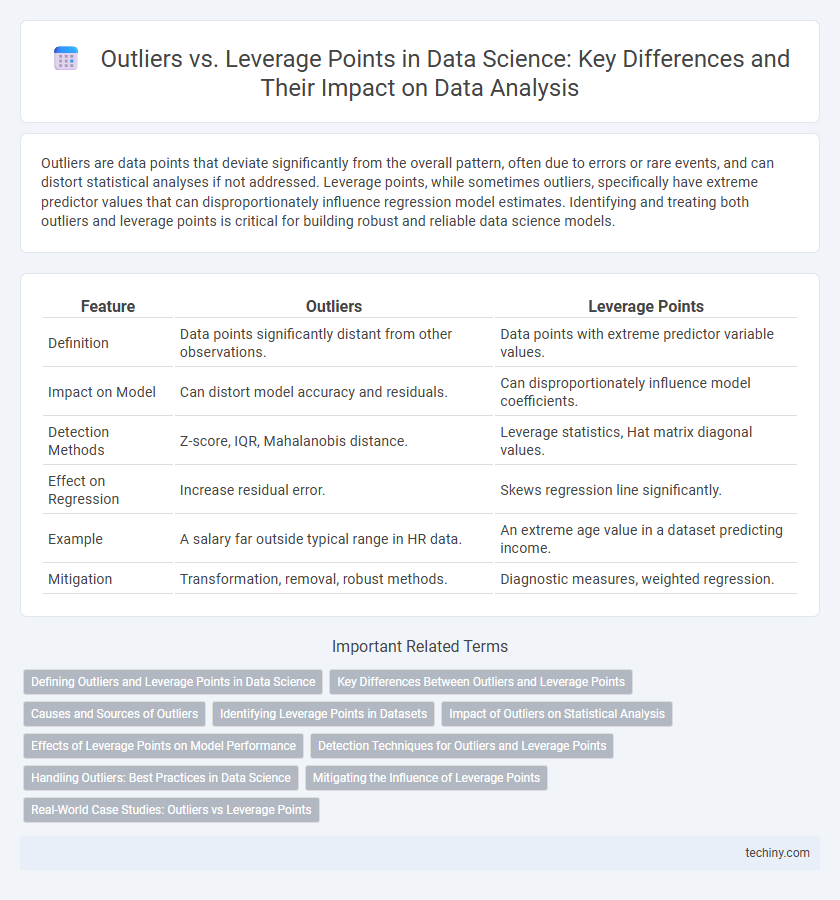

Outliers are data points that deviate significantly from the overall pattern, often due to errors or rare events, and can distort statistical analyses if not addressed. Leverage points, while sometimes outliers, specifically have extreme predictor values that can disproportionately influence regression model estimates. Identifying and treating both outliers and leverage points is critical for building robust and reliable data science models.

Table of Comparison

| Feature | Outliers | Leverage Points |

|---|---|---|

| Definition | Data points significantly distant from other observations. | Data points with extreme predictor variable values. |

| Impact on Model | Can distort model accuracy and residuals. | Can disproportionately influence model coefficients. |

| Detection Methods | Z-score, IQR, Mahalanobis distance. | Leverage statistics, Hat matrix diagonal values. |

| Effect on Regression | Increase residual error. | Skews regression line significantly. |

| Example | A salary far outside typical range in HR data. | An extreme age value in a dataset predicting income. |

| Mitigation | Transformation, removal, robust methods. | Diagnostic measures, weighted regression. |

Defining Outliers and Leverage Points in Data Science

Outliers in data science are data points that deviate significantly from the overall pattern of a dataset, often indicating variability, errors, or novel phenomena. Leverage points refer to observations with extreme predictor variable values that can disproportionately influence the outcome of regression analysis. Identifying and understanding these distinct data anomalies is crucial for robust statistical modeling and accurate data interpretation.

Key Differences Between Outliers and Leverage Points

Outliers are data points that deviate significantly from the overall pattern of the dataset, often identified by their rare or extreme values in the response variable. Leverage points reside far from the mean of the predictor variables, influencing the regression model disproportionately due to their location in the feature space. While outliers can distort model accuracy by affecting residuals, leverage points impact model stability and parameter estimates by exerting strong influence on the fitted regression line.

Causes and Sources of Outliers

Outliers in data science often arise from measurement errors, data entry mistakes, or natural variability in the dataset, significantly impacting model accuracy. Sources include instrumental inaccuracies, environmental changes during data collection, and unexpected events causing deviations. Identifying these outliers is crucial for robust statistical modeling and data analysis workflows.

Identifying Leverage Points in Datasets

Leverage points are observations in a dataset with extreme predictor values that can disproportionately influence the fit of a regression model. Identifying leverage points involves calculating the leverage statistic (hat values) from the hat matrix, with values significantly higher than the average leverage (2p/n or 3p/n, where p is the number of predictors and n the sample size) indicating potential leverage points. Proper detection of leverage points is essential for robust regression analysis, as these points can distort parameter estimates and predictive accuracy.

Impact of Outliers on Statistical Analysis

Outliers significantly distort statistical analysis by skewing central tendency measures like mean and standard deviation, leading to misleading conclusions. They can inflate error variance, reduce model accuracy, and affect hypothesis testing by altering p-values and confidence intervals. Identifying and managing outliers is crucial to maintain the integrity of regression models and ensure reliable predictive performance in data science.

Effects of Leverage Points on Model Performance

Leverage points significantly impact model performance by disproportionately influencing the regression line, often skewing coefficients and inflating error metrics. These high-leverage observations, located far from the mean of predictor variables, can distort model fit and reduce predictive accuracy if not properly identified and managed. Robust regression techniques or leverage diagnostics like Cook's distance are essential to mitigate their effect and enhance model reliability.

Detection Techniques for Outliers and Leverage Points

Detection techniques for outliers in data science include statistical methods such as z-scores, box plots, and the IQR method, which identify data points that deviate significantly from the majority of observations. Leverage points are detected using influence measures like Cook's distance, hat matrix diagonal values, and DFFITS, which assess the impact of individual data points on regression models. Robust regression methods and visualization tools, such as scatter plots with confidence ellipses, complement these techniques by highlighting unusual observations that may distort model accuracy.

Handling Outliers: Best Practices in Data Science

Handling outliers in data science involves identifying data points that significantly deviate from the overall pattern using statistical tests like Z-score or IQR. Robust methods such as trimming, winsorizing, or transforming data using log or Box-Cox transformations help mitigate outlier impact without losing valuable information. Leveraging visualization techniques like box plots and scatter plots aids in the intuitive detection and understanding of outliers for better model accuracy and reliability.

Mitigating the Influence of Leverage Points

Mitigating the influence of leverage points involves identifying observations with extreme predictor values that can disproportionately affect regression models. Techniques such as robust regression, leverage diagnostics like Cook's distance, and data transformation reduce leverage impact by limiting the undue influence of these points on model parameters. Regularly assessing leverage and residuals ensures model stability and improves predictive accuracy in data science applications.

Real-World Case Studies: Outliers vs Leverage Points

In real-world data science case studies, outliers are data points that deviate drastically from the overall pattern, potentially indicating errors or rare events, while leverage points possess extreme predictor values that can disproportionately influence regression models. For example, in financial fraud detection, outliers might represent anomalous transactions, whereas leverage points could skew predictive models, leading to unreliable risk assessments. Understanding the distinction between these enables data scientists to apply appropriate techniques like robust regression or data transformation to improve model accuracy and interpretability.

Outliers vs Leverage Points Infographic

techiny.com

techiny.com