Homoscedasticity occurs when the variance of errors or residuals remains constant across all levels of an independent variable, ensuring reliable regression estimates and valid hypothesis testing. Heteroscedasticity arises when the variance of errors varies at different levels of the independent variable, leading to inefficient estimates and potential bias in standard errors. Detecting heteroscedasticity is crucial for accurate model interpretation and often requires techniques like weighted least squares or robust standard errors.

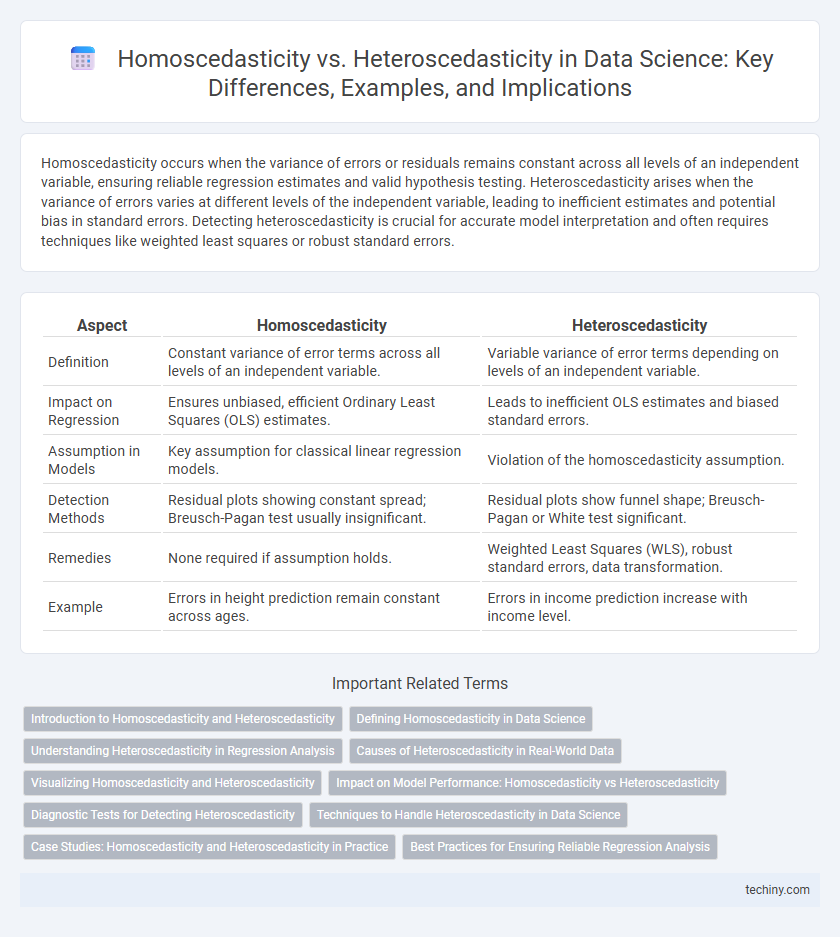

Table of Comparison

| Aspect | Homoscedasticity | Heteroscedasticity |

|---|---|---|

| Definition | Constant variance of error terms across all levels of an independent variable. | Variable variance of error terms depending on levels of an independent variable. |

| Impact on Regression | Ensures unbiased, efficient Ordinary Least Squares (OLS) estimates. | Leads to inefficient OLS estimates and biased standard errors. |

| Assumption in Models | Key assumption for classical linear regression models. | Violation of the homoscedasticity assumption. |

| Detection Methods | Residual plots showing constant spread; Breusch-Pagan test usually insignificant. | Residual plots show funnel shape; Breusch-Pagan or White test significant. |

| Remedies | None required if assumption holds. | Weighted Least Squares (WLS), robust standard errors, data transformation. |

| Example | Errors in height prediction remain constant across ages. | Errors in income prediction increase with income level. |

Introduction to Homoscedasticity and Heteroscedasticity

Homoscedasticity refers to the condition in regression analysis where the variance of the residuals is constant across all levels of the independent variable, ensuring reliable parameter estimates and valid hypothesis tests. Heteroscedasticity occurs when residual variances differ at various values of the independent variable, potentially leading to inefficient estimates and distorted statistical inferences. Detecting and addressing these variance patterns through diagnostic tests like the Breusch-Pagan test is essential for robust predictive modeling in data science.

Defining Homoscedasticity in Data Science

Homoscedasticity in data science refers to the condition where the variance of the errors or residuals in a regression model is constant across all levels of the independent variables. This assumption ensures that the model has equal reliability and predictive power throughout the dataset, which is critical for valid hypothesis testing and confidence interval estimation. Detecting homoscedasticity often involves visual inspection of residual plots or statistical tests like the Breusch-Pagan test to confirm uniform variance.

Understanding Heteroscedasticity in Regression Analysis

Heteroscedasticity in regression analysis occurs when the variance of the residuals or errors is not constant across all levels of the independent variables, violating a key assumption of classical linear regression models. This non-constant error variance can lead to inefficient estimates and biased standard errors, affecting hypothesis testing and confidence intervals reliability. Detecting heteroscedasticity often involves visual inspection of residual plots or formal tests like the Breusch-Pagan and White tests, and addressing it may require techniques such as weighted least squares or robust standard errors to improve model accuracy.

Causes of Heteroscedasticity in Real-World Data

Heteroscedasticity in real-world data often arises from factors such as varying measurement accuracy across different ranges of a dataset and the presence of outliers or extreme values that increase variance unevenly. Economic data frequently exhibit heteroscedasticity due to income disparities causing variability in spending patterns, while financial time series data show volatility clustering, leading to non-constant error variances. Identifying these causes is critical in regression analysis to ensure robust model estimation and valid inference.

Visualizing Homoscedasticity and Heteroscedasticity

Visualizing homoscedasticity involves plotting residuals against predicted values to observe a consistent spread of residuals, indicating constant variance. In contrast, heteroscedasticity is identified by a funnel-shaped or patterned spread in residual plots, revealing changing variance across levels of an independent variable. Effective visualization techniques like scatter plots and residual plots are crucial for diagnosing variance patterns in regression analysis.

Impact on Model Performance: Homoscedasticity vs Heteroscedasticity

Homoscedasticity ensures constant variance of errors across all levels of an independent variable, leading to more reliable and unbiased regression coefficient estimates in data science models. In contrast, heteroscedasticity causes non-constant error variance, which can bias standard errors and reduce the statistical power of hypothesis tests, ultimately degrading model performance. Detecting and addressing heteroscedasticity through techniques like weighted least squares or robust standard errors is crucial for maintaining model accuracy and inference validity.

Diagnostic Tests for Detecting Heteroscedasticity

Diagnostic tests for detecting heteroscedasticity in data science include the Breusch-Pagan test, White test, and Goldfeld-Quandt test, each evaluating whether variance of residuals is constant across observations. The Breusch-Pagan test uses a chi-square distribution to assess if the variance of errors depends on independent variables, while the White test detects heteroscedasticity without specifying a particular form. Goldfeld-Quandt test splits data into subsets to compare residual variances, providing robust insights for regression model validity and ensuring reliable statistical inference.

Techniques to Handle Heteroscedasticity in Data Science

Techniques to handle heteroscedasticity in data science include applying weighted least squares (WLS) regression, which assigns weights inversely proportional to the variance of errors to stabilize variance across observations. Transformations such as log, square root, or Box-Cox can normalize data and reduce heteroscedasticity. Robust standard errors and generalized least squares (GLS) methods provide alternative approaches to achieve consistent parameter estimates in the presence of heteroscedasticity.

Case Studies: Homoscedasticity and Heteroscedasticity in Practice

Case studies in data science reveal that homoscedasticity ensures consistent variance of errors across all levels of an independent variable, improving the reliability of regression models in fields such as finance and healthcare. In contrast, heteroscedasticity, often observed in real estate price modeling or income prediction, signals varying error variances that necessitate techniques like weighted least squares or robust standard errors to achieve accurate inference. Understanding these variance patterns is crucial for selecting appropriate diagnostic tests and adjusting models to avoid biased estimates and incorrect conclusions.

Best Practices for Ensuring Reliable Regression Analysis

Ensuring reliable regression analysis involves checking for homoscedasticity, where the variance of errors remains constant across all levels of the independent variables, which validates standard errors and confidence intervals. When heteroscedasticity is present, indicating non-constant error variance, robust standard errors or weighted least squares regression should be employed to correct bias. Diagnostic tools like the Breusch-Pagan test and residual plots help detect heteroscedasticity early, improving model accuracy and inference reliability.

homoscedasticity vs heteroscedasticity Infographic

techiny.com

techiny.com