Quantum computing leverages the probabilistic nature of quantum states, where measurement outcomes are not fixed but occur with certain probabilities determined by the wavefunction's amplitudes. Unlike classical deterministic outputs, quantum measurements collapse the superposition into one of the possible states, influencing algorithm success rates and error rates. This intrinsic probability requires repeated sampling to extract reliable solutions, distinguishing quantum computing fundamentally from classical deterministic computation.

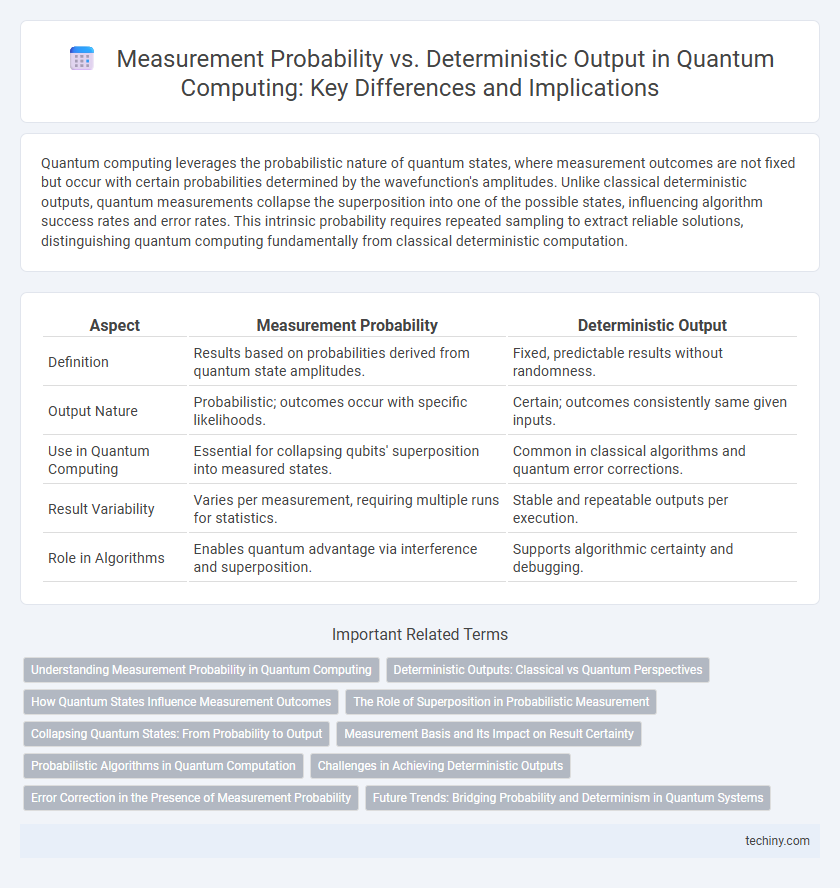

Table of Comparison

| Aspect | Measurement Probability | Deterministic Output |

|---|---|---|

| Definition | Results based on probabilities derived from quantum state amplitudes. | Fixed, predictable results without randomness. |

| Output Nature | Probabilistic; outcomes occur with specific likelihoods. | Certain; outcomes consistently same given inputs. |

| Use in Quantum Computing | Essential for collapsing qubits' superposition into measured states. | Common in classical algorithms and quantum error corrections. |

| Result Variability | Varies per measurement, requiring multiple runs for statistics. | Stable and repeatable outputs per execution. |

| Role in Algorithms | Enables quantum advantage via interference and superposition. | Supports algorithmic certainty and debugging. |

Understanding Measurement Probability in Quantum Computing

Measurement probability in quantum computing quantifies the likelihood of obtaining specific outcomes when observing a qubit's superposition state. Quantum measurements collapse the qubit into a definite state based on the amplitude squared of its probability vector, reflecting inherent uncertainty unlike deterministic classical outputs. Understanding this probabilistic nature is crucial for interpreting quantum algorithm results and optimizing error mitigation strategies in quantum circuits.

Deterministic Outputs: Classical vs Quantum Perspectives

Deterministic outputs in classical computing result from fixed algorithms producing consistent, predictable results for the same input. Quantum computing, in contrast, rarely yields deterministic outputs due to inherent probabilistic measurement outcomes governed by quantum superposition and entanglement. The measurement probability in quantum systems leads to outcomes represented by probability amplitudes, contrasting the deterministic binary states of classical bits.

How Quantum States Influence Measurement Outcomes

Quantum states exhibit superposition, allowing qubits to represent multiple possibilities simultaneously, which results in measurement probabilities rather than deterministic outputs. The probability of each measurement outcome is determined by the amplitude squared of the quantum state components, reflecting the state's quantum probability distribution. Collapse of the wavefunction during measurement leads to one definitive outcome, illustrating how quantum state properties directly influence probabilistic measurement results in quantum computing.

The Role of Superposition in Probabilistic Measurement

Superposition allows quantum bits (qubits) to exist in multiple states simultaneously, which leads to measurement probabilities rather than deterministic outcomes. The probability of each measurement result corresponds to the squared amplitude of the qubit's state vector components. This probabilistic nature is fundamental to quantum algorithms, enabling complex computations that classical deterministic systems cannot efficiently perform.

Collapsing Quantum States: From Probability to Output

Quantum computing leverages the principle of collapsing quantum states, where superposed qubits exhibit measurement probabilities rather than deterministic outcomes. Upon measurement, the quantum state collapses to a definite eigenstate with a probability defined by the squared amplitude of its wavefunction component. This collapse transforms probabilistic information into a classical binary output, enabling quantum algorithms to extract meaningful solutions from inherently uncertain quantum phenomena.

Measurement Basis and Its Impact on Result Certainty

Measurement basis in quantum computing critically influences the probability distribution of qubit states observed during measurement, as quantum states collapse differently depending on the chosen basis. Selecting an appropriate measurement basis aligns with the qubit's superposition components, enhancing result certainty by increasing the likelihood of deterministic outputs. Variations in measurement basis can shift outcome probabilities from highly uncertain superpositions to near-certain eigenstates, directly impacting the reliability and interpretability of quantum computations.

Probabilistic Algorithms in Quantum Computation

Probabilistic algorithms in quantum computation exploit the superposition principle, enabling qubits to represent multiple states simultaneously and yielding measurement probabilities instead of deterministic outputs. These algorithms provide solutions by amplifying the likelihood of correct results through interference patterns, often requiring repeated runs to statistically infer the desired outcome. Unlike classical deterministic algorithms, quantum probabilistic methods offer exponential speedups for specific problems such as factoring and unstructured search.

Challenges in Achieving Deterministic Outputs

Quantum computing inherently relies on probabilistic measurement outcomes due to the superposition principle, making deterministic outputs challenging to achieve consistently. Decoherence and noise in quantum systems further complicate error rates, reducing the fidelity of measurements and demanding complex error correction techniques. Scaling quantum processors while maintaining precise control over qubits is essential to minimize uncertainty and approach deterministic computation in practical applications.

Error Correction in the Presence of Measurement Probability

Measurement probability in quantum computing introduces inherent uncertainty, impacting the reliability of output states and necessitating robust quantum error correction techniques. Quantum error correction codes, such as the surface code, mitigate errors by encoding logical qubits into multiple physical qubits, allowing detection and correction of probabilistic measurement-induced errors. Enhancing measurement fidelity and implementing fault-tolerant protocols remain critical to reducing error rates and achieving reliable deterministic outputs in quantum computations.

Future Trends: Bridging Probability and Determinism in Quantum Systems

Future trends in quantum computing aim to bridge measurement probability with deterministic output by enhancing quantum error correction and developing hybrid quantum-classical algorithms. Advances in qubit coherence and noise reduction are critical for improving measurement reliability and predictability. Integration of machine learning techniques is also accelerating the shift toward more deterministic quantum outcomes, enabling practical applications in cryptography and optimization.

Measurement Probability vs Deterministic Output Infographic

techiny.com

techiny.com