Quantum bits, or qubits, differ fundamentally from classical bits by existing in superpositions, allowing them to represent both 0 and 1 simultaneously. Unlike classical bits that hold a single binary value, qubits leverage quantum phenomena like entanglement and coherence to perform complex computations more efficiently. This unique nature enables quantum computers to solve certain problems exponentially faster than classical counterparts.

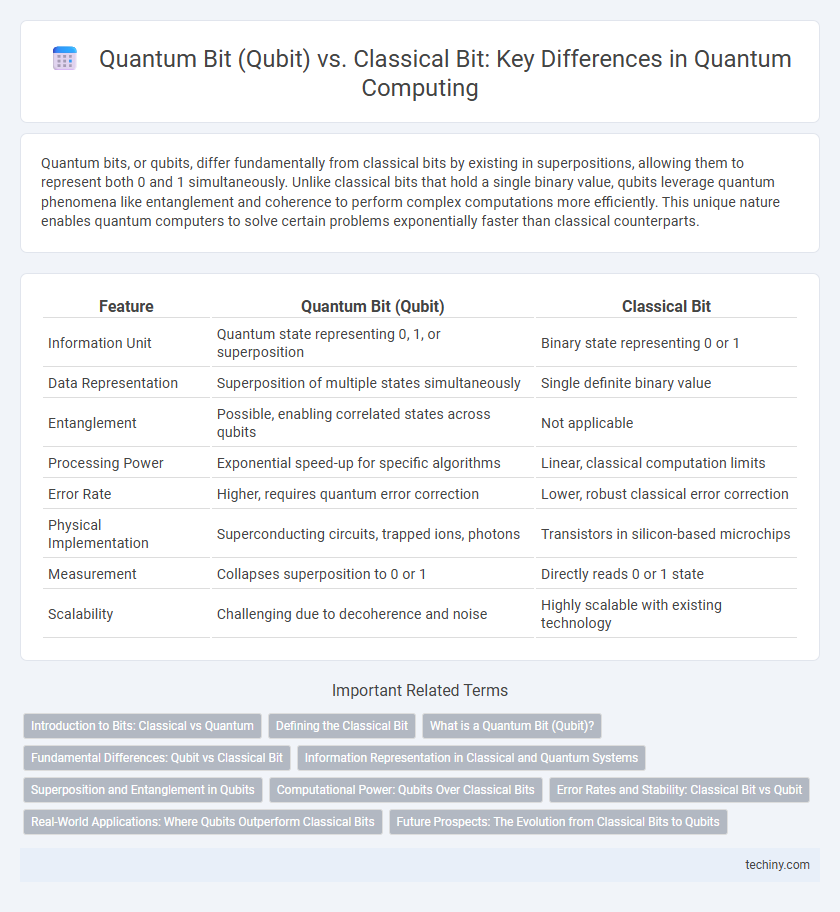

Table of Comparison

| Feature | Quantum Bit (Qubit) | Classical Bit |

|---|---|---|

| Information Unit | Quantum state representing 0, 1, or superposition | Binary state representing 0 or 1 |

| Data Representation | Superposition of multiple states simultaneously | Single definite binary value |

| Entanglement | Possible, enabling correlated states across qubits | Not applicable |

| Processing Power | Exponential speed-up for specific algorithms | Linear, classical computation limits |

| Error Rate | Higher, requires quantum error correction | Lower, robust classical error correction |

| Physical Implementation | Superconducting circuits, trapped ions, photons | Transistors in silicon-based microchips |

| Measurement | Collapses superposition to 0 or 1 | Directly reads 0 or 1 state |

| Scalability | Challenging due to decoherence and noise | Highly scalable with existing technology |

Introduction to Bits: Classical vs Quantum

Classical bits represent information as either 0 or 1, forming the foundation of traditional digital computing through binary states. Quantum bits, or qubits, exploit quantum phenomena like superposition and entanglement, allowing them to exist simultaneously in multiple states and enabling exponentially greater computational power. The fundamental difference lies in qubits' ability to encode and process complex information beyond binary limitations, revolutionizing computational speed and problem-solving capabilities.

Defining the Classical Bit

A classical bit represents the fundamental unit of information in traditional computing, holding a value of either 0 or 1 definitively at any given time. It operates based on binary logic within classical computers, utilizing electrical states to store and process data. The contrast with a quantum bit (qubit) lies in its inability to exist in superposition, limiting classical bits to two discrete states without quantum coherence or entanglement capabilities.

What is a Quantum Bit (Qubit)?

A Quantum Bit (Qubit) is the fundamental unit of quantum information, differing from a classical bit by existing in a superposition of states, representing both 0 and 1 simultaneously. Unlike classical bits that store data as either 0 or 1, qubits leverage principles of quantum mechanics such as entanglement and coherence to perform complex computations more efficiently. This ability enables quantum computers to solve problems in cryptography, optimization, and simulation that are intractable for classical computers.

Fundamental Differences: Qubit vs Classical Bit

A quantum bit (qubit) fundamentally differs from a classical bit by existing in superposition, enabling it to represent both 0 and 1 simultaneously, whereas a classical bit is limited to a binary state of either 0 or 1. Qubits leverage principles of quantum mechanics such as entanglement and interference, allowing quantum computers to perform complex computations exponentially faster than classical computers. The probabilistic nature of qubits contrasts with the deterministic state of classical bits, defining the core advantage of quantum computing over classical computing.

Information Representation in Classical and Quantum Systems

Quantum bits (qubits) differ fundamentally from classical bits by representing information not just as 0 or 1, but through superposition states, allowing simultaneous encoding of multiple values. Classical bits rely on binary states, either 0 or 1, whereas qubits leverage quantum phenomena such as entanglement and coherence to enable exponentially richer information representation. This distinct encoding capability underpins the enhanced computational power of quantum systems over classical architectures.

Superposition and Entanglement in Qubits

Quantum bits (qubits) differ fundamentally from classical bits by leveraging superposition, allowing them to exist simultaneously in multiple states (0 and 1) until measured. Entanglement, a unique quantum property, links qubits such that the state of one instantly influences another, regardless of distance, enabling complex correlations unattainable by classical bits. These quantum phenomena drastically enhance computational power and parallelism in quantum computing compared to traditional binary systems.

Computational Power: Qubits Over Classical Bits

Qubits harness superposition and entanglement, enabling quantum computers to process a vast number of states simultaneously, vastly outperforming classical bits in parallel computation. Classical bits represent data as either 0 or 1, limiting computational power to sequential processing of binary states. The exponential scalability of qubit systems provides unprecedented efficiency for complex algorithms such as factoring and optimization, surpassing classical computing capabilities.

Error Rates and Stability: Classical Bit vs Qubit

Classical bits exhibit low error rates and high stability due to well-established semiconductor technologies, maintaining binary states as 0 or 1 with minimal interference. Qubits, relying on quantum phenomena like superposition and entanglement, face higher error rates and significant decoherence challenges, leading to instability over short timescales. Ongoing advancements in quantum error correction codes and qubit coherence times aim to bridge these stability gaps, crucial for practical quantum computing applications.

Real-World Applications: Where Qubits Outperform Classical Bits

Qubits leverage superposition and entanglement to process complex computations exponentially faster than classical bits, enabling breakthroughs in cryptography, optimization, and drug discovery. Quantum algorithms such as Shor's algorithm outperform classical methods by factoring large numbers efficiently, revolutionizing cybersecurity through quantum-resistant encryption protocols. Real-world applications in materials science and financial modeling exploit qubit-based quantum simulators to solve problems intractable for classical computing architectures.

Future Prospects: The Evolution from Classical Bits to Qubits

Qubits harness quantum superposition and entanglement, enabling exponentially greater processing power compared to classical bits limited to binary states of 0 or 1. Future advancements in quantum error correction and scalable qubit architectures promise transformative impacts on cryptography, optimization, and machine learning. The shift from classical bits to qubits represents a paradigm shift in computational capability poised to revolutionize technology industries.

Quantum Bit (Qubit) vs Classical Bit Infographic

techiny.com

techiny.com