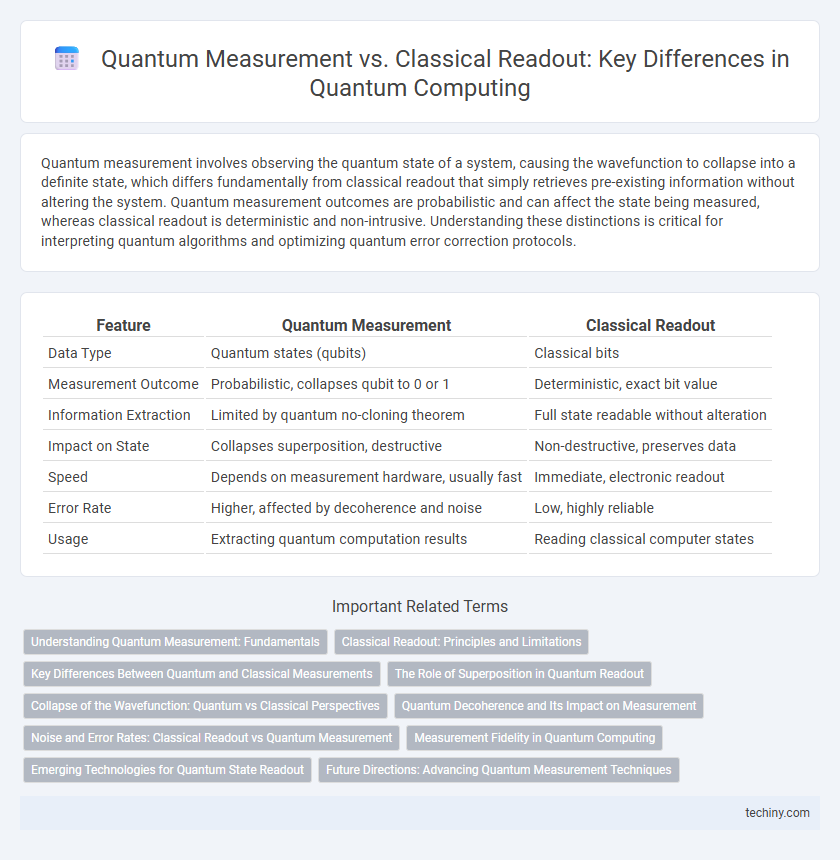

Quantum measurement involves observing the quantum state of a system, causing the wavefunction to collapse into a definite state, which differs fundamentally from classical readout that simply retrieves pre-existing information without altering the system. Quantum measurement outcomes are probabilistic and can affect the state being measured, whereas classical readout is deterministic and non-intrusive. Understanding these distinctions is critical for interpreting quantum algorithms and optimizing quantum error correction protocols.

Table of Comparison

| Feature | Quantum Measurement | Classical Readout |

|---|---|---|

| Data Type | Quantum states (qubits) | Classical bits |

| Measurement Outcome | Probabilistic, collapses qubit to 0 or 1 | Deterministic, exact bit value |

| Information Extraction | Limited by quantum no-cloning theorem | Full state readable without alteration |

| Impact on State | Collapses superposition, destructive | Non-destructive, preserves data |

| Speed | Depends on measurement hardware, usually fast | Immediate, electronic readout |

| Error Rate | Higher, affected by decoherence and noise | Low, highly reliable |

| Usage | Extracting quantum computation results | Reading classical computer states |

Understanding Quantum Measurement: Fundamentals

Quantum measurement fundamentally differs from classical readout by collapsing a quantum state into a definite classical outcome based on the probabilistic wavefunction. Unlike deterministic classical systems, quantum measurement outcomes are governed by the Born rule, reflecting the probability amplitudes of quantum superposition. This process inherently introduces measurement-induced disturbance, making quantum measurement a non-reversible operation that alters the system's state post-observation.

Classical Readout: Principles and Limitations

Classical readout in quantum computing relies on converting quantum information into classical signals through measurement devices such as photodetectors or superconducting amplifiers. This process is constrained by noise, limited detection efficiency, and finite bandwidth, which hamper the accuracy and speed of extracting quantum states. These limitations lead to reduced fidelity in quantum state discrimination, impacting overall system performance and scalability.

Key Differences Between Quantum and Classical Measurements

Quantum measurement fundamentally differs from classical readout by collapsing the quantum state into a definite outcome, whereas classical measurements simply reveal pre-existing information without altering the state. Quantum measurements are probabilistic, providing outcomes based on probability amplitudes, while classical readouts yield deterministic values. Furthermore, quantum measurement outcomes can affect subsequent states due to wavefunction collapse, unlike classical measurements that are non-invasive.

The Role of Superposition in Quantum Readout

Quantum measurement leverages the principle of superposition, allowing qubits to exist in multiple states simultaneously before collapse, enabling more complex information extraction than classical readout. Unlike classical bits that produce binary outcomes, quantum readout interprets probability amplitudes, providing richer data from entangled and superposed states. The role of superposition is crucial in enhancing computational power and precision in quantum algorithms by exploiting these probabilistic outcomes during readout.

Collapse of the Wavefunction: Quantum vs Classical Perspectives

Quantum measurement fundamentally involves the collapse of the wavefunction, where a quantum system's superposition state instantaneously reduces to a single eigenstate upon observation, reflecting inherent probabilistic outcomes. In contrast, classical readout operates on deterministically measurable states without altering the system's underlying state, preserving classical information integrity. The wavefunction collapse embodies the core distinction, emphasizing quantum mechanics' non-deterministic nature versus classical systems' predictable measurement outcomes.

Quantum Decoherence and Its Impact on Measurement

Quantum measurement in quantum computing involves observing qubit states, which causes quantum decoherence by collapsing superposition and entanglement, ultimately limiting computational fidelity. In contrast, classical readout methods measure binary states without affecting system coherence but lack the parallelism advantage inherent in quantum processes. Understanding and mitigating decoherence effects during quantum measurement is critical for improving error rates and enhancing qubit readout accuracy in quantum algorithms.

Noise and Error Rates: Classical Readout vs Quantum Measurement

Quantum measurement involves the collapse of qubits into definite states, introducing inherent quantum noise and error rates distinct from classical readout systems. Classical readout relies on well-established electronic amplifiers and sensors, which typically exhibit lower noise levels and more predictable error rates compared to quantum measurement processes. Optimizing quantum measurement techniques requires advanced error correction protocols to mitigate quantum noise and improve fidelity beyond the stability and reliability of classical readout methods.

Measurement Fidelity in Quantum Computing

Measurement fidelity in quantum computing quantifies the accuracy of extracting quantum state information through quantum measurement processes compared to classical readout methods. High-fidelity quantum measurement minimizes errors arising from decoherence and noise, ensuring reliable qubit state discrimination crucial for quantum error correction and algorithmic performance. Advances in superconducting qubits and ion traps have achieved measurement fidelities exceeding 99%, surpassing classical readout accuracy and enabling scalable quantum computation.

Emerging Technologies for Quantum State Readout

Emerging technologies for quantum state readout leverage superconducting qubits with dispersive readout techniques, enabling higher fidelity and faster measurement compared to classical analogs. Innovations such as quantum non-demolition measurements and single-photon detectors enhance the precision and scalability of quantum measurement systems. These advancements significantly reduce readout errors, critical for the implementation of fault-tolerant quantum computing architectures.

Future Directions: Advancing Quantum Measurement Techniques

Advancing quantum measurement techniques involves developing non-invasive, high-fidelity readout methods that preserve qubit coherence while minimizing errors. Innovations in quantum non-demolition (QND) measurements and real-time feedback control systems are critical for scalable quantum computing architectures. Future research prioritizes integrating machine learning algorithms to optimize measurement protocols and enhance the precision of quantum state discrimination beyond classical readout limits.

Quantum Measurement vs Classical Readout Infographic

techiny.com

techiny.com