Bit Error Rate (BER) measures the ratio of erroneous bits to total transmitted bits in telecommunications systems, reflecting the accuracy of individual bit transmission. Frame Error Rate (FER) evaluates the frequency of incorrectly received frames or packets, providing insight into higher-layer data integrity. Both BER and FER are critical metrics for assessing communication reliability, with BER focusing on bit-level errors and FER emphasizing the impact on complete data frames.

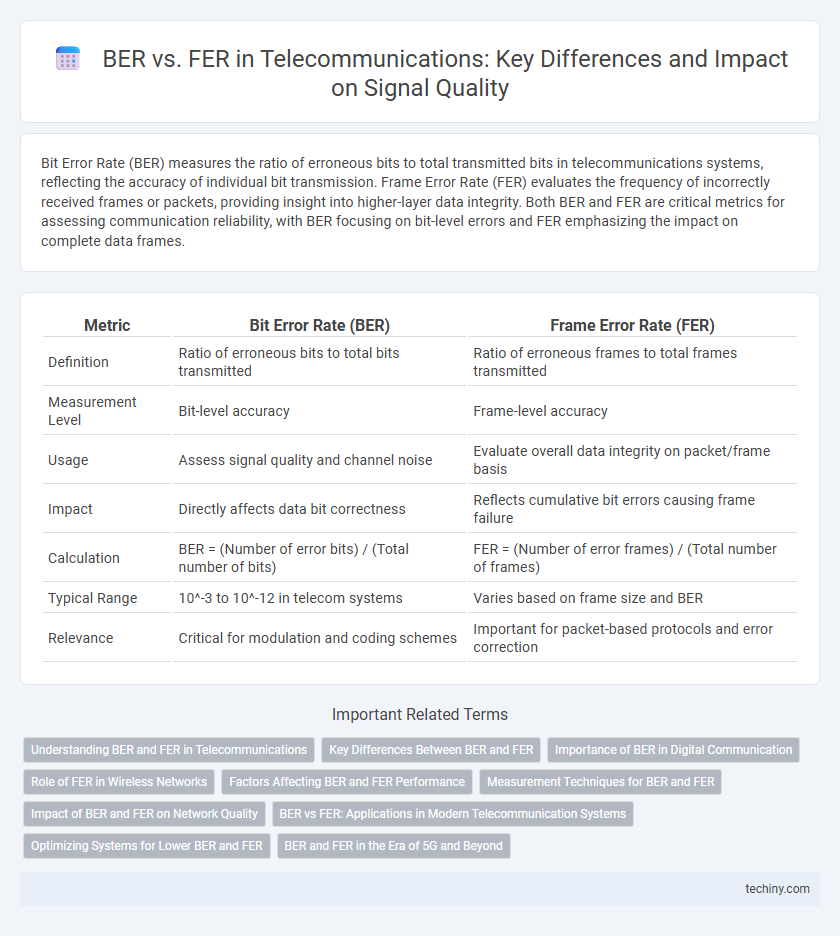

Table of Comparison

| Metric | Bit Error Rate (BER) | Frame Error Rate (FER) |

|---|---|---|

| Definition | Ratio of erroneous bits to total bits transmitted | Ratio of erroneous frames to total frames transmitted |

| Measurement Level | Bit-level accuracy | Frame-level accuracy |

| Usage | Assess signal quality and channel noise | Evaluate overall data integrity on packet/frame basis |

| Impact | Directly affects data bit correctness | Reflects cumulative bit errors causing frame failure |

| Calculation | BER = (Number of error bits) / (Total number of bits) | FER = (Number of error frames) / (Total number of frames) |

| Typical Range | 10^-3 to 10^-12 in telecom systems | Varies based on frame size and BER |

| Relevance | Critical for modulation and coding schemes | Important for packet-based protocols and error correction |

Understanding BER and FER in Telecommunications

Bit Error Rate (BER) measures the number of bit errors divided by the total transmitted bits, reflecting the accuracy of data transmission in telecommunications. Frame Error Rate (FER) evaluates the proportion of data frames containing errors relative to the total frames sent, emphasizing the reliability of packetized communication. Both BER and FER are critical metrics for assessing network quality, optimizing signal processing, and improving error correction algorithms in modern digital communication systems.

Key Differences Between BER and FER

BER (Bit Error Rate) measures the rate of erroneous bits received in a digital communication system, reflecting the integrity of individual bits during transmission. FER (Frame Error Rate) evaluates the frequency of entire frames or packets received with errors, representing the impact of errors on larger data units. The key difference lies in BER assessing errors at the bit level, while FER provides a broader perspective by counting errors at the frame level, crucial for understanding overall communication quality in telecommunications.

Importance of BER in Digital Communication

Bit Error Rate (BER) is a critical metric in digital communication that quantifies the number of bit errors per unit of time or per number of transmitted bits, directly impacting data integrity and system reliability. Unlike Frame Error Rate (FER), which measures errors at the frame level, BER provides granular insight into signal quality and modulation efficiency, influencing error correction strategies and overall bandwidth utilization. Maintaining a low BER is essential for optimizing network performance, minimizing retransmissions, and ensuring robust end-to-end communication in telecommunication systems.

Role of FER in Wireless Networks

Frame Error Rate (FER) plays a critical role in wireless networks by measuring the frequency of incorrectly received data frames, directly impacting communication reliability. Unlike Bit Error Rate (BER), which focuses on individual bit errors, FER assesses the integrity of entire frames, making it more relevant for evaluating performance in packet-based wireless systems. High FER indicates severe degradation in link quality, prompting adaptive error correction and retransmission mechanisms to maintain optimal network throughput and connectivity.

Factors Affecting BER and FER Performance

Bit Error Rate (BER) and Frame Error Rate (FER) performance in telecommunications are influenced by factors such as signal-to-noise ratio (SNR), modulation schemes, and channel conditions including multipath fading and interference. Error correction techniques like forward error correction (FEC) and interleaving significantly impact BER and FER by mitigating bit errors and burst errors respectively. Hardware quality, transmission distance, and mobility patterns also play critical roles in determining the overall reliability and efficiency of communication systems.

Measurement Techniques for BER and FER

Bit Error Rate (BER) and Frame Error Rate (FER) measurement techniques in telecommunications involve analyzing the accuracy of transmitted data by comparing received signals against known reference patterns. BER measurement typically utilizes pseudo-random binary sequences (PRBS) and error detectors to count bit mismatches over a defined interval, while FER measurement evaluates entire data frames to detect frame-level errors using cyclic redundancy checks (CRC) or checksum validations. Employing oscilloscopes, error analyzers, and protocol testers enhances precision in capturing error statistics, essential for optimizing communication system performance and reliability.

Impact of BER and FER on Network Quality

Bit Error Rate (BER) and Frame Error Rate (FER) are critical metrics influencing network quality in telecommunications. High BER directly degrades signal integrity, causing increased retransmissions and latency, while elevated FER affects data frame delivery, leading to packet loss and reduced throughput. Both metrics together determine overall network reliability, impacting user experience and system efficiency in wireless and wired communication systems.

BER vs FER: Applications in Modern Telecommunication Systems

Bit Error Rate (BER) and Frame Error Rate (FER) serve crucial roles in assessing the reliability of digital communication systems, with BER measuring the rate of individual bit errors and FER indicating the occurrence of errors within entire frames. Modern telecommunication systems, including 5G networks and satellite communications, leverage BER metrics for fine-grained channel quality analysis, while FER is pivotal in evaluating the effectiveness of error correction protocols and overall system robustness. Accurate BER and FER measurements enable adaptive modulation schemes, enhancing data throughput and ensuring minimal transmission errors in high-speed, real-time applications.

Optimizing Systems for Lower BER and FER

Optimizing telecommunications systems for lower Bit Error Rate (BER) and Frame Error Rate (FER) involves implementing advanced error correction codes such as LDPC and Turbo codes to enhance signal integrity. Adaptive modulation and coding schemes dynamically adjust parameters based on channel conditions, reducing both BER and FER effectively. Network designers also leverage channel estimation and equalization techniques to minimize interference, ensuring higher data accuracy and system reliability.

BER and FER in the Era of 5G and Beyond

Bit Error Rate (BER) and Frame Error Rate (FER) remain critical performance metrics in 5G wireless communications, directly impacting the reliability and efficiency of data transmission over massive MIMO and millimeter-wave channels. Advanced error correction techniques such as LDPC and Polar codes significantly reduce BER and FER, enabling ultra-reliable low-latency communication (URLLC) applications and massive IoT connectivity. Optimizing BER and FER is essential for maintaining signal integrity in the complex propagation environments and high data rates characteristic of 5G and beyond networks.

BER vs FER Infographic

techiny.com

techiny.com