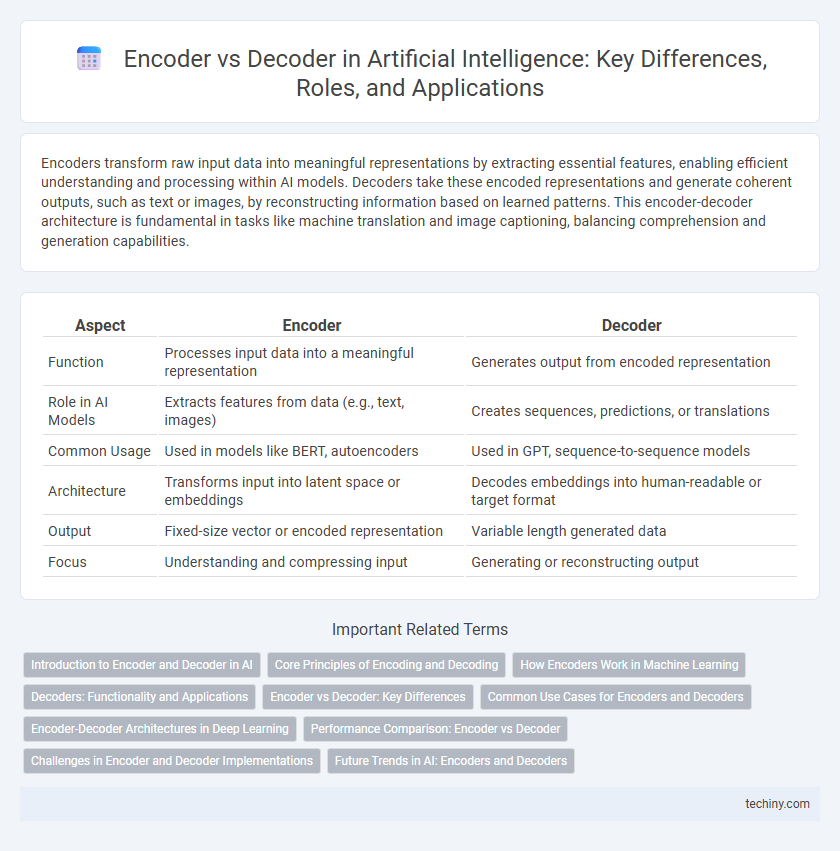

Encoders transform raw input data into meaningful representations by extracting essential features, enabling efficient understanding and processing within AI models. Decoders take these encoded representations and generate coherent outputs, such as text or images, by reconstructing information based on learned patterns. This encoder-decoder architecture is fundamental in tasks like machine translation and image captioning, balancing comprehension and generation capabilities.

Table of Comparison

| Aspect | Encoder | Decoder |

|---|---|---|

| Function | Processes input data into a meaningful representation | Generates output from encoded representation |

| Role in AI Models | Extracts features from data (e.g., text, images) | Creates sequences, predictions, or translations |

| Common Usage | Used in models like BERT, autoencoders | Used in GPT, sequence-to-sequence models |

| Architecture | Transforms input into latent space or embeddings | Decodes embeddings into human-readable or target format |

| Output | Fixed-size vector or encoded representation | Variable length generated data |

| Focus | Understanding and compressing input | Generating or reconstructing output |

Introduction to Encoder and Decoder in AI

Encoders and decoders are fundamental components in artificial intelligence models, particularly in natural language processing and machine translation tasks. The encoder processes input data by transforming it into a dense, fixed-length representation that captures the essential features and context, while the decoder generates the output sequence based on this encoded representation. Transformer architectures leverage stacked encoders and decoders to enhance the efficiency and accuracy of tasks such as text generation, language understanding, and speech recognition.

Core Principles of Encoding and Decoding

Encoding transforms raw input data into compact, meaningful representations by extracting essential features and reducing dimensionality, facilitating efficient processing and understanding. Decoding reconstructs original or target information from encoded data by interpreting latent representations to generate coherent outputs. Both processes rely on neural architectures like transformers, where encoder layers capture contextual embeddings, and decoder layers generate predictions based on these embeddings, enabling tasks such as machine translation and text generation.

How Encoders Work in Machine Learning

Encoders in machine learning transform raw input data into a compact, meaningful representation, often called an embedding, that captures essential features while reducing dimensionality. This encoded representation facilitates tasks such as classification, clustering, and anomaly detection by making complex data more interpretable and manageable for algorithms. Encoder architectures like convolutional neural networks (CNNs) or transformers systematically extract hierarchical patterns and contextual information from data, enhancing model performance and generalization.

Decoders: Functionality and Applications

Decoders in artificial intelligence transform encoded data representations back into interpretable outputs, playing a crucial role in tasks such as language translation, image generation, and speech synthesis. Leveraging architectures like transformers and recurrent neural networks, decoders sequentially generate meaningful sequences by predicting the next element in a series. This functionality underpins applications including text generation, machine translation, and chatbot responses, enabling more natural and coherent AI-driven communication.

Encoder vs Decoder: Key Differences

Encoders transform input data into a compact, meaningful representation by extracting essential features, while decoders reconstruct the original data from this encoded form, enabling tasks like translation or image generation. The encoder compresses information into latent space, optimizing for feature extraction, whereas the decoder focuses on generating output that closely resembles the input or target domain. Understanding this distinction is crucial for designing architectures such as autoencoders, transformers, and sequence-to-sequence models in artificial intelligence.

Common Use Cases for Encoders and Decoders

Encoders are commonly used in natural language processing tasks such as text classification, sentiment analysis, and feature extraction, where transforming input data into meaningful latent representations is crucial. Decoders excel in generative tasks including machine translation, image captioning, and speech synthesis, reconstructing coherent output sequences from encoded information. Both are integral to sequence-to-sequence models, but their roles differ--encoders compress and encode input features, while decoders generate contextually relevant outputs.

Encoder-Decoder Architectures in Deep Learning

Encoder-decoder architectures in deep learning transform input data into a fixed-dimensional representation using the encoder, which captures essential features and context. The decoder then generates the output by interpreting this encoded representation, enabling tasks like machine translation, image captioning, and text summarization. These architectures rely on mechanisms such as attention to efficiently handle variable-length sequences and improve output quality.

Performance Comparison: Encoder vs Decoder

Encoders excel at transforming input data into dense, information-rich representations, enhancing performance in tasks requiring feature extraction such as image recognition and natural language understanding. Decoders specialize in generating coherent output sequences from encoded representations, demonstrating superior performance in applications like language translation and text generation. Evaluating performance metrics reveals encoders prioritize accuracy and compression, while decoders emphasize fluency and contextual relevance in generated outputs.

Challenges in Encoder and Decoder Implementations

Encoder implementations often face challenges with handling long-range dependencies and maintaining context fidelity in sequential data, leading to potential information loss during compression. Decoders struggle with generating coherent and contextually accurate outputs, especially when exposed to ambiguous or noisy input representations from the encoder. Optimizing these components requires balancing model complexity with computational efficiency to ensure real-time processing without sacrificing performance.

Future Trends in AI: Encoders and Decoders

Future trends in AI emphasize the integration of advanced encoder-decoder architectures to enhance natural language understanding and generation. Transformers with improved encoder-decoder modules enable more efficient processing of multimodal data, driving innovations in conversational AI and automated reasoning. Research focuses on optimizing these components for scalability and real-time applications, expanding their impact across industries.

Encoder vs Decoder Infographic

techiny.com

techiny.com