Neural Turing Machines combine neural networks with external memory resources, enabling complex algorithmic tasks by learning how to read from and write to memory, which enhances their ability to handle sequential data with dynamic storage. Transformer models rely on self-attention mechanisms to process input data in parallel, making them highly efficient for natural language processing and large-scale pattern recognition without explicit memory management. While Neural Turing Machines excel in tasks requiring memory manipulation and algorithmic reasoning, Transformers dominate tasks with extensive contextual dependencies due to their scalability and parallel processing capabilities.

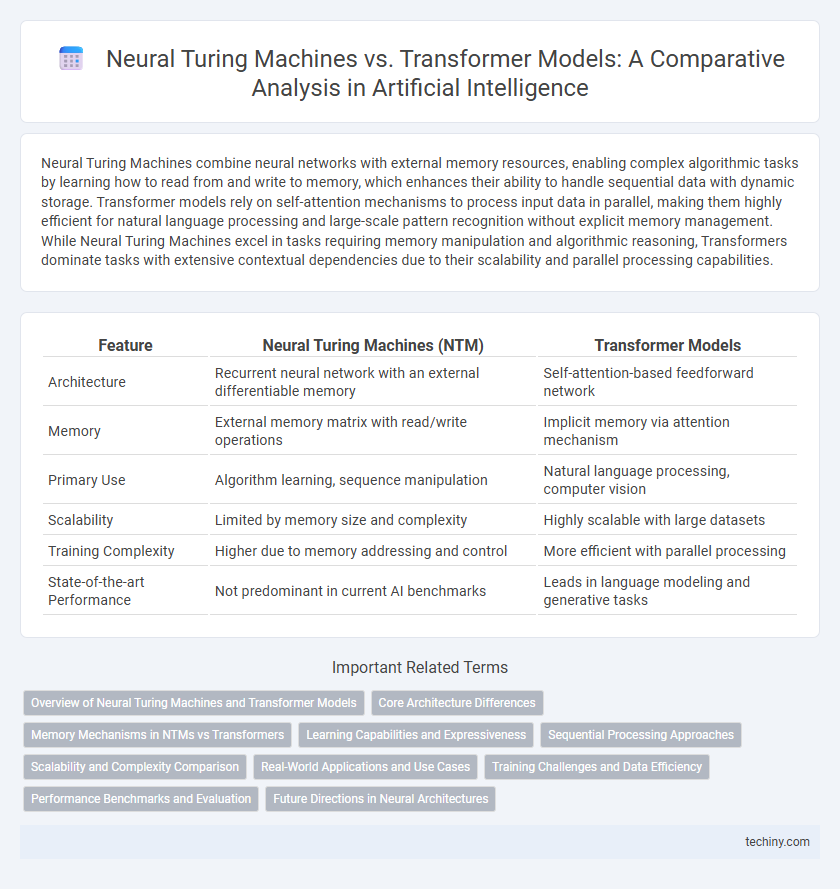

Table of Comparison

| Feature | Neural Turing Machines (NTM) | Transformer Models |

|---|---|---|

| Architecture | Recurrent neural network with an external differentiable memory | Self-attention-based feedforward network |

| Memory | External memory matrix with read/write operations | Implicit memory via attention mechanism |

| Primary Use | Algorithm learning, sequence manipulation | Natural language processing, computer vision |

| Scalability | Limited by memory size and complexity | Highly scalable with large datasets |

| Training Complexity | Higher due to memory addressing and control | More efficient with parallel processing |

| State-of-the-art Performance | Not predominant in current AI benchmarks | Leads in language modeling and generative tasks |

Overview of Neural Turing Machines and Transformer Models

Neural Turing Machines combine neural networks with an external memory bank, enabling them to learn algorithms and store complex data structures efficiently. Transformer models leverage self-attention mechanisms to process and generate sequences in parallel, achieving state-of-the-art results in natural language processing tasks. Both architectures represent significant advances in artificial intelligence by enhancing learning capacity and information retention through distinct mechanisms.

Core Architecture Differences

Neural Turing Machines integrate a neural network controller with an external memory matrix, enabling differentiable read-write operations that mimic Turing machine capabilities for algorithmic tasks. Transformer models rely on self-attention mechanisms to capture global dependencies within input sequences, eliminating the need for external memory by leveraging multi-head attention and positional encoding. The core architectural difference lies in Neural Turing Machines' explicit memory manipulation versus Transformers' implicit memory through attention, impacting their respective strengths in sequence processing and generalization.

Memory Mechanisms in NTMs vs Transformers

Neural Turing Machines utilize an external memory matrix that enables dynamic read-write operations, allowing for flexible storage and retrieval of information essential for algorithmic tasks. Transformer models rely on self-attention mechanisms that create context-dependent, weighted representations of input tokens without explicit external memory storage. This fundamental difference results in NTMs excelling in tasks requiring precise memory manipulation, while Transformers are optimized for capturing global dependencies through implicit memory in attention layers.

Learning Capabilities and Expressiveness

Neural Turing Machines (NTMs) enhance learning capabilities by combining neural networks with external memory, enabling the model to read from and write to memory cells for complex algorithmic tasks. Transformer models leverage self-attention mechanisms, providing superior expressiveness in capturing long-range dependencies and parallel processing of sequential data. While NTMs excel in explicit memory manipulation, Transformers dominate in large-scale natural language understanding and generation tasks due to their scalable architecture and contextual representation power.

Sequential Processing Approaches

Neural Turing Machines (NTMs) integrate a neural network with external memory, enabling sequential data processing through read-write operations akin to a traditional Turing machine, which supports tasks requiring algorithmic manipulation of sequences. Transformer models utilize self-attention mechanisms to process entire input sequences simultaneously, capturing long-range dependencies without relying on sequential processing steps, resulting in greater parallelization and efficiency. The fundamental difference lies in NTMs' step-by-step memory retrieval versus transformers' holistic, non-sequential attention-driven computation.

Scalability and Complexity Comparison

Neural Turing Machines combine neural networks with external memory to handle complex algorithmic tasks, but their scalability is limited by the difficulty of training and managing memory access. Transformer models excel in scalability due to their parallelizable self-attention mechanisms, allowing them to efficiently process large sequences despite increased complexity in model size. Comparing complexity, Transformers generally require more computational resources but offer superior scalability and adaptability across diverse AI applications compared to Neural Turing Machines.

Real-World Applications and Use Cases

Neural Turing Machines excel in tasks requiring complex algorithmic reasoning and memory manipulation, such as program synthesis and reinforcement learning in robotics. Transformer models dominate natural language processing applications, powering machine translation, text generation, and sentiment analysis with unparalleled efficiency. Real-world implementations leverage Transformers for large-scale data processing, while Neural Turing Machines enable adaptive systems in environments demanding dynamic memory usage.

Training Challenges and Data Efficiency

Neural Turing Machines (NTMs) face significant training challenges due to their complex memory addressing mechanisms, which often result in difficulty converging and require extensive computational resources. Transformer models, leveraging self-attention mechanisms, demonstrate higher data efficiency by effectively capturing long-range dependencies with fewer training iterations and larger parallelization capabilities. Despite their advantages, transformers demand substantial large-scale datasets and carefully tuned hyperparameters to optimize performance and prevent overfitting.

Performance Benchmarks and Evaluation

Neural Turing Machines (NTMs) excel in tasks requiring algorithmic generalization and memory-intensive computations, showing superior performance on sequential data with complex dependencies. Transformer models outperform NTMs in large-scale natural language processing benchmarks such as GLUE and SuperGLUE, demonstrating higher accuracy and faster training times due to their parallel attention mechanisms. Evaluation metrics including perplexity, BLEU scores, and task-specific accuracy highlight Transformers' scalability and robustness, while NTMs remain valuable for specialized applications demanding dynamic memory manipulation.

Future Directions in Neural Architectures

Neural Turing Machines integrate external memory with neural networks, enabling complex data manipulation and algorithmic tasks, while Transformer models leverage self-attention mechanisms for parallel processing and scalability in natural language understanding. Future directions in neural architectures focus on hybrid models that combine the explicit memory capabilities of Neural Turing Machines with the efficient attention-based learning of Transformers to enhance reasoning and generalization. Research aims to develop adaptive architectures that dynamically allocate computational resources, improving performance on diverse AI challenges such as long-term sequence modeling and real-time decision-making.

Neural Turing Machines vs Transformer Models Infographic

techiny.com

techiny.com