Word embedding captures semantic relationships by representing words as dense vectors in continuous space, enabling machines to understand the context and similarity between words. One hot encoding, in contrast, creates sparse vectors with binary values that fail to account for word meaning or relationships, leading to less efficient and scalable natural language processing models. Embeddings improve performance in tasks like sentiment analysis and machine translation by providing richer linguistic information compared to one hot encoding.

Table of Comparison

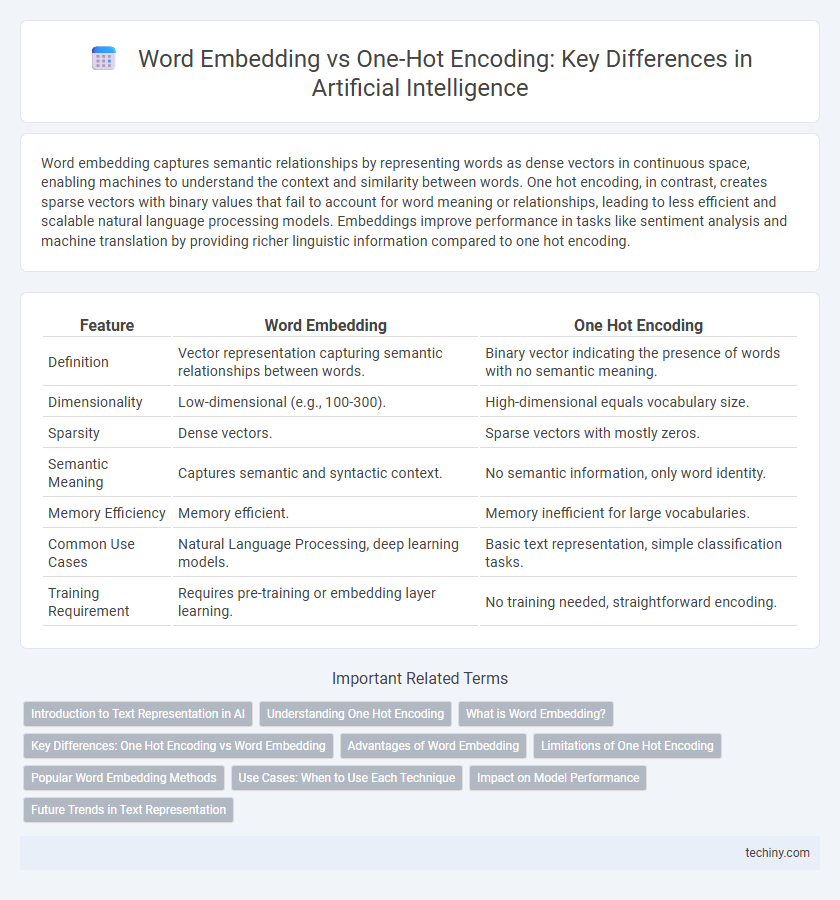

| Feature | Word Embedding | One Hot Encoding |

|---|---|---|

| Definition | Vector representation capturing semantic relationships between words. | Binary vector indicating the presence of words with no semantic meaning. |

| Dimensionality | Low-dimensional (e.g., 100-300). | High-dimensional equals vocabulary size. |

| Sparsity | Dense vectors. | Sparse vectors with mostly zeros. |

| Semantic Meaning | Captures semantic and syntactic context. | No semantic information, only word identity. |

| Memory Efficiency | Memory efficient. | Memory inefficient for large vocabularies. |

| Common Use Cases | Natural Language Processing, deep learning models. | Basic text representation, simple classification tasks. |

| Training Requirement | Requires pre-training or embedding layer learning. | No training needed, straightforward encoding. |

Introduction to Text Representation in AI

Word embedding transforms text into dense, continuous vector spaces capturing semantic relationships between words, unlike one-hot encoding which represents words as sparse, binary vectors without context. One-hot vectors increase dimensionality and sparsity, making them less efficient for modeling complex language patterns. Word embeddings such as Word2Vec and GloVe enable AI models to understand synonyms, analogies, and word similarities, improving natural language processing tasks.

Understanding One Hot Encoding

One Hot Encoding is a fundamental technique in natural language processing where each word is represented as a binary vector, with a single high (1) value indicating the presence of a word and zeros elsewhere, enabling simple and interpretable word representation. Unlike Word Embedding, One Hot Encoding does not capture semantic relationships or contextual meaning between words, resulting in sparse and high-dimensional vectors. This method is effective for small vocabularies but becomes inefficient and less meaningful for large-scale language models due to the lack of semantic information.

What is Word Embedding?

Word embedding is a technique in artificial intelligence that transforms words into dense vectors of real numbers, capturing semantic relationships and contextual similarities between words. Unlike one-hot encoding, which represents words as sparse vectors with no inherent relationship, word embeddings enable models to understand nuances such as synonyms and analogies. Popular algorithms for generating embeddings include Word2Vec, GloVe, and FastText, which improve natural language processing tasks by providing meaningful word representations.

Key Differences: One Hot Encoding vs Word Embedding

One Hot Encoding represents words as sparse vectors with a single high bit and no inherent semantic meaning, leading to high dimensionality and inefficiency in capturing word relationships. Word Embedding transforms words into dense, low-dimensional vectors that capture semantic similarities and contextual relevance, improving performance in natural language processing tasks. Unlike One Hot Encoding, Word Embeddings enable models to understand and infer the meaning and relationships between words through continuous vector spaces.

Advantages of Word Embedding

Word embedding captures semantic relationships between words by representing them as dense vectors in continuous vector space, enabling models to understand context and word similarity. Unlike one hot encoding, which creates sparse and high-dimensional vectors without semantic meaning, word embeddings reduce dimensionality and improve computational efficiency. This results in enhanced performance in natural language processing tasks such as sentiment analysis, machine translation, and text classification.

Limitations of One Hot Encoding

One hot encoding creates sparse vectors with high dimensionality, leading to increased memory usage and computational inefficiency in AI models. It fails to capture semantic relationships between words, treating each word as an independent entity without context. This limitation reduces the effectiveness of natural language processing tasks compared to dense, context-aware embeddings like Word2Vec or GloVe.

Popular Word Embedding Methods

Popular word embedding methods such as Word2Vec, GloVe, and FastText capture semantic relationships by converting words into dense vectors, enabling better understanding of context compared to sparse one-hot encoding. Word2Vec leverages continuous bag-of-words and skip-gram models to predict word similarity, while GloVe uses matrix factorization based on word co-occurrence statistics. FastText extends these techniques by incorporating subword information, effectively handling rare and out-of-vocabulary words.

Use Cases: When to Use Each Technique

Word embedding is ideal for natural language processing tasks requiring semantic understanding, such as sentiment analysis, machine translation, and chatbots, because it captures word meaning and context in dense vector representations. One hot encoding suits simpler tasks like text classification with limited vocabularies or feature extraction where interpretability and categorical distinction are essential. Choose word embedding for deep learning models needing contextual word relationships, while one hot encoding is effective for traditional machine learning algorithms handling discrete, non-sequential data.

Impact on Model Performance

Word Embedding significantly enhances model performance by capturing semantic relationships and contextual similarities between words, leading to better generalization in natural language processing tasks. One Hot Encoding, despite its simplicity, results in sparse and high-dimensional vectors that do not convey any semantic meaning, often causing models to require more data and training time to perform well. Consequently, Word Embedding enables more efficient learning and improved accuracy in complex AI models compared to One Hot Encoding.

Future Trends in Text Representation

Future trends in text representation emphasize advanced word embedding techniques like contextual embeddings from transformer models such as BERT and GPT, offering richer semantic understanding compared to traditional one-hot encoding. These embeddings capture dynamic word meanings based on context, enabling more accurate language models and improved natural language processing tasks. Emerging approaches integrate multimodal data and leverage self-supervised learning to further enhance text representation efficiency and scalability.

Word Embedding vs One Hot Encoding Infographic

techiny.com

techiny.com