Precision measures the accuracy of positive predictions by calculating the ratio of true positives to all predicted positives, ensuring minimal false positives. Recall quantifies the ability to identify all relevant instances by measuring the ratio of true positives to actual positives, prioritizing comprehensive detection. Balancing precision and recall is crucial in artificial intelligence to optimize model performance for specific tasks, such as fraud detection or medical diagnosis.

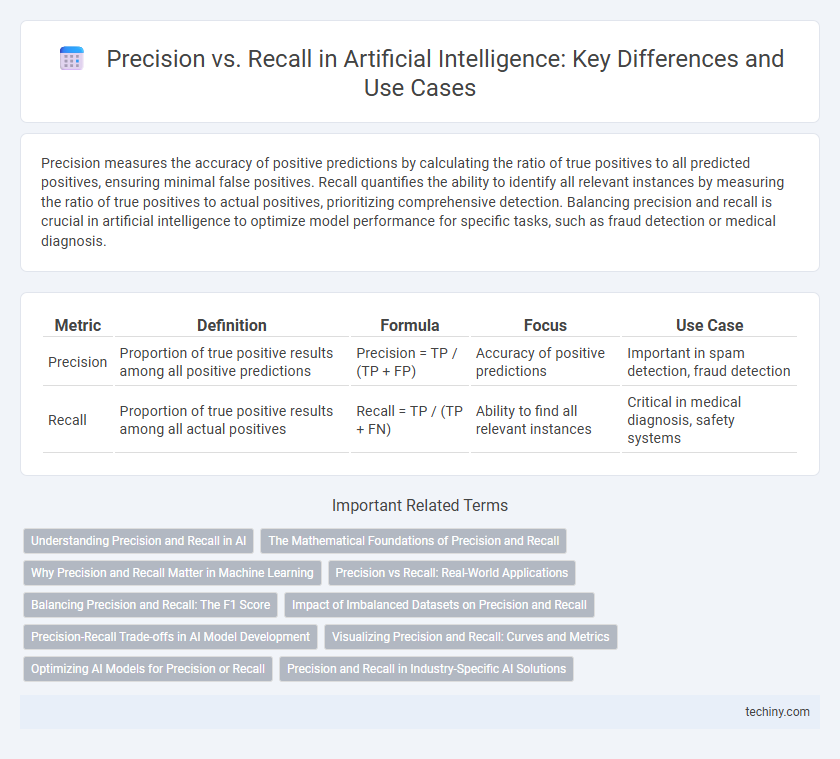

Table of Comparison

| Metric | Definition | Formula | Focus | Use Case |

|---|---|---|---|---|

| Precision | Proportion of true positive results among all positive predictions | Precision = TP / (TP + FP) | Accuracy of positive predictions | Important in spam detection, fraud detection |

| Recall | Proportion of true positive results among all actual positives | Recall = TP / (TP + FN) | Ability to find all relevant instances | Critical in medical diagnosis, safety systems |

Understanding Precision and Recall in AI

Precision in artificial intelligence measures the accuracy of positive predictions, indicating the proportion of true positive results among all predicted positives. Recall evaluates the model's ability to identify all relevant instances, reflecting the ratio of true positives detected out of all actual positives. Balancing precision and recall is crucial for optimizing AI performance, especially in applications like medical diagnosis or fraud detection where false positives and negatives carry significant consequences.

The Mathematical Foundations of Precision and Recall

Precision quantifies the ratio of true positive predictions to the total predicted positives, mathematically expressed as TP / (TP + FP). Recall measures the ratio of true positive predictions to all actual positives, denoted by TP / (TP + FN). These metrics derive from the confusion matrix and serve as fundamental indicators for evaluating classification models in artificial intelligence.

Why Precision and Recall Matter in Machine Learning

Precision measures the accuracy of positive predictions by calculating the ratio of true positives to the sum of true and false positives, ensuring the model minimizes false alarms. Recall quantifies the ability to identify all relevant instances by dividing true positives by the sum of true positives and false negatives, highlighting the model's effectiveness in capturing actual positives. Balancing precision and recall is crucial in machine learning applications such as medical diagnosis and fraud detection, where both false positives and false negatives carry significant consequences.

Precision vs Recall: Real-World Applications

Precision measures the accuracy of positive predictions, ensuring fewer false positives in applications like medical diagnosis and spam detection. Recall emphasizes the ability to identify all relevant instances, crucial in fraud detection and search engines where missing potential cases has high costs. Balancing precision and recall depends on the specific domain's tolerance for false positives versus false negatives, tailoring AI models for optimal performance in real-world scenarios.

Balancing Precision and Recall: The F1 Score

The F1 score represents the harmonic mean of precision and recall, providing a balanced metric that accounts for both false positives and false negatives in artificial intelligence models. It is especially valuable in scenarios with imbalanced datasets where emphasizing precision or recall alone might mislead performance evaluation. Optimizing the F1 score ensures that an AI system maintains a robust equilibrium between identifying relevant instances and minimizing erroneous classifications.

Impact of Imbalanced Datasets on Precision and Recall

Imbalanced datasets significantly affect precision and recall in artificial intelligence, often causing models to achieve high precision but low recall or vice versa due to the disproportionate representation of classes. In scenarios with rare positive cases, recall suffers as the model may miss true positives, while precision appears artificially high when the model predicts fewer positive instances. Techniques such as resampling, synthetic data generation, and cost-sensitive learning are essential to mitigate these impacts and improve the balance between precision and recall in imbalanced datasets.

Precision-Recall Trade-offs in AI Model Development

Precision and recall represent critical metrics in AI model evaluation, where precision measures the accuracy of positive predictions, and recall quantifies the model's ability to identify all relevant instances. Optimizing the precision-recall trade-off involves balancing false positives and false negatives depending on the application context, such as prioritizing precision in fraud detection to minimize false alarms or favoring recall in medical diagnosis to ensure comprehensive identification of conditions. Advanced techniques like precision-recall curves, F1 scores, and threshold adjustments assist developers in fine-tuning AI models to meet specific performance objectives while mitigating risks associated with misclassification.

Visualizing Precision and Recall: Curves and Metrics

Visualizing precision and recall through curves such as Precision-Recall (PR) curves provides critical insights into the trade-offs between false positives and false negatives in Artificial Intelligence models. Metrics like the Area Under the Curve (AUC) for PR curves quantify overall performance, highlighting model effectiveness in imbalanced datasets. Confusion matrices complement these visual tools by detailing true positive, false positive, true negative, and false negative rates for comprehensive model evaluation.

Optimizing AI Models for Precision or Recall

Optimizing AI models for precision involves fine-tuning algorithms to minimize false positives, ensuring that the model's positive predictions are highly reliable, which is crucial in applications like medical diagnostics or fraud detection. In contrast, optimizing for recall emphasizes capturing as many true positives as possible, reducing false negatives, which is vital in scenarios such as disease screening or security threat identification. Balancing precision and recall requires leveraging techniques like threshold adjustment, cross-validation, and performance metrics analysis to align model outputs with specific business objectives and risk tolerances.

Precision and Recall in Industry-Specific AI Solutions

Precision in industry-specific AI solutions minimizes false positives, ensuring critical decisions rely on accurate detections, such as fraud identification in finance or defect detection in manufacturing. Recall measures the ability to capture all relevant instances, vital in healthcare AI to identify every potential diagnosis. Balancing precision and recall optimizes model performance for sector-specific challenges, improving reliability and operational efficiency.

Precision vs Recall Infographic

techiny.com

techiny.com