Data sparsity refers to the scarcity of available data points in a dataset, which limits the effectiveness of training AI models and reduces their ability to generalize. Data imbalance occurs when certain classes or categories dominate the dataset, causing models to become biased towards the majority class and perform poorly on minority classes. Addressing both data sparsity and data imbalance is critical for building robust AI systems that deliver accurate and fair predictions across diverse scenarios.

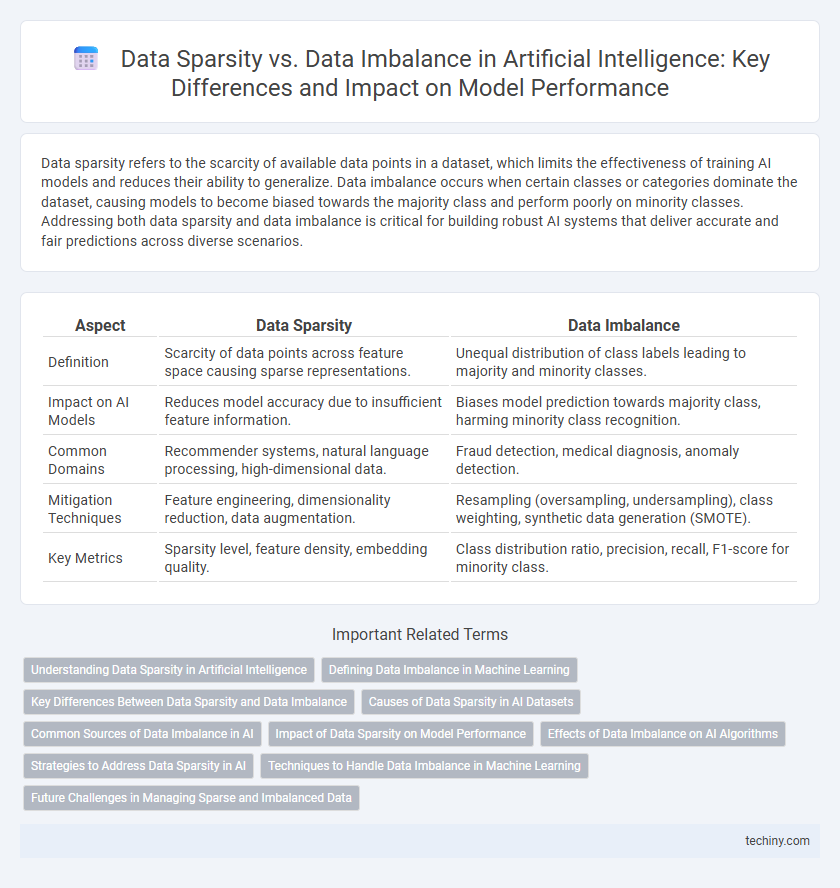

Table of Comparison

| Aspect | Data Sparsity | Data Imbalance |

|---|---|---|

| Definition | Scarcity of data points across feature space causing sparse representations. | Unequal distribution of class labels leading to majority and minority classes. |

| Impact on AI Models | Reduces model accuracy due to insufficient feature information. | Biases model prediction towards majority class, harming minority class recognition. |

| Common Domains | Recommender systems, natural language processing, high-dimensional data. | Fraud detection, medical diagnosis, anomaly detection. |

| Mitigation Techniques | Feature engineering, dimensionality reduction, data augmentation. | Resampling (oversampling, undersampling), class weighting, synthetic data generation (SMOTE). |

| Key Metrics | Sparsity level, feature density, embedding quality. | Class distribution ratio, precision, recall, F1-score for minority class. |

Understanding Data Sparsity in Artificial Intelligence

Data sparsity in artificial intelligence refers to situations where the available dataset has a large number of features with many missing or zero values, hindering model performance and generalization. This challenge often arises in high-dimensional spaces like natural language processing or recommender systems, where sparse data limits learning efficiency and prediction accuracy. Addressing data sparsity requires specialized techniques such as dimensionality reduction, feature engineering, or embedding methods to ensure robust AI model development and improved decision-making.

Defining Data Imbalance in Machine Learning

Data imbalance in machine learning refers to a disproportionate distribution of classes within a dataset, where one class significantly outnumbers others, leading to biased model performance. This issue often results in poor predictive accuracy for minority classes, as standard algorithms tend to favor the majority class. Addressing data imbalance involves techniques such as resampling, synthetic data generation, and cost-sensitive learning to ensure robust and fair model training.

Key Differences Between Data Sparsity and Data Imbalance

Data sparsity refers to the condition where a dataset contains a high proportion of missing or zero-valued entries, leading to insufficient information for robust model training. Data imbalance occurs when the distribution of classes or categories within a dataset is uneven, causing biased learning toward the majority class. Key differences include data sparsity impacting feature completeness, while data imbalance affects class representation and model performance metrics.

Causes of Data Sparsity in AI Datasets

Data sparsity in AI datasets primarily arises from insufficient data collection, leading to a high proportion of missing or zero-valued entries, especially in domains like natural language processing and recommender systems. Rare events or infrequent user interactions contribute significantly to this scarcity, causing sparse feature representations that hinder model learning. Furthermore, limited access to comprehensive, high-quality data due to privacy concerns, cost constraints, and data fragmentation exacerbates the sparsity challenge in AI applications.

Common Sources of Data Imbalance in AI

Common sources of data imbalance in AI include underrepresented classes due to natural rarity or data collection biases, skewed annotation efforts favoring certain categories, and sampling methods that do not reflect the true distribution of the population. These imbalances often arise from privacy constraints limiting access to sensitive data or imbalanced user interactions generating uneven datasets. Addressing these challenges is critical for improving model fairness, robustness, and overall performance in machine learning applications.

Impact of Data Sparsity on Model Performance

Data sparsity significantly degrades model performance by limiting the availability of representative samples, causing difficulty in capturing underlying patterns and increasing the risk of overfitting. Sparse datasets reduce the effectiveness of feature learning, leading to poor generalization on unseen data in machine learning and deep learning models. Addressing sparsity through techniques like data augmentation, dimensionality reduction, or transfer learning is crucial for enhancing model robustness and predictive accuracy.

Effects of Data Imbalance on AI Algorithms

Data imbalance in AI algorithms leads to biased model predictions by overrepresenting majority classes, resulting in poor generalization for minority classes. This imbalance often causes skewed accuracy metrics, misleading performance assessments and reducing the effectiveness of classification tasks. Addressing data imbalance through techniques like resampling or synthetic data generation is essential to improve model robustness and fairness in predictive analytics.

Strategies to Address Data Sparsity in AI

Data sparsity in AI poses challenges such as reduced model accuracy and limited generalization capabilities due to insufficient and scattered data points. Strategies to address data sparsity include data augmentation techniques, synthetic data generation using generative adversarial networks (GANs), and transfer learning to leverage pre-trained models from related domains. Employing matrix factorization and embedding methods also helps mitigate sparsity by extracting latent features and enhancing data representation for improved AI model performance.

Techniques to Handle Data Imbalance in Machine Learning

Techniques to handle data imbalance in machine learning include resampling methods such as oversampling the minority class using SMOTE (Synthetic Minority Over-sampling Technique) or undersampling the majority class to balance the dataset. Cost-sensitive learning assigns higher misclassification penalties to minority classes, improving model focus on underrepresented data without altering the original distribution. Ensemble methods like Balanced Random Forest and EasyEnsemble combine multiple classifiers trained on balanced subsets, enhancing robustness against skewed class distributions.

Future Challenges in Managing Sparse and Imbalanced Data

Future challenges in managing sparse and imbalanced data in artificial intelligence include developing robust algorithms that effectively handle limited and skewed datasets without compromising model accuracy. Advances in few-shot learning and synthetic data generation are critical to overcoming these obstacles by augmenting scarce data and balancing class distributions. Ensuring fairness and reducing bias in AI models remain paramount as data sparsity and imbalance can exacerbate existing disparities.

Data Sparsity vs Data Imbalance Infographic

techiny.com

techiny.com