Monte Carlo Tree Search (MCTS) excels in complex decision-making scenarios with large, uncertain search spaces by using random simulations to evaluate moves, which contrasts with Alpha-beta Pruning's systematic elimination of branches in a game tree based on minimax evaluations. MCTS adapts dynamically to different game states, providing probabilistic outcomes that improve with increased simulations, while Alpha-beta Pruning relies on deterministic evaluations and benefits significantly from effective move ordering. In practice, MCTS is favored for games with vast, less strictly defined move sets, whereas Alpha-beta Pruning remains efficient for well-defined, two-player zero-sum games like chess and checkers.

Table of Comparison

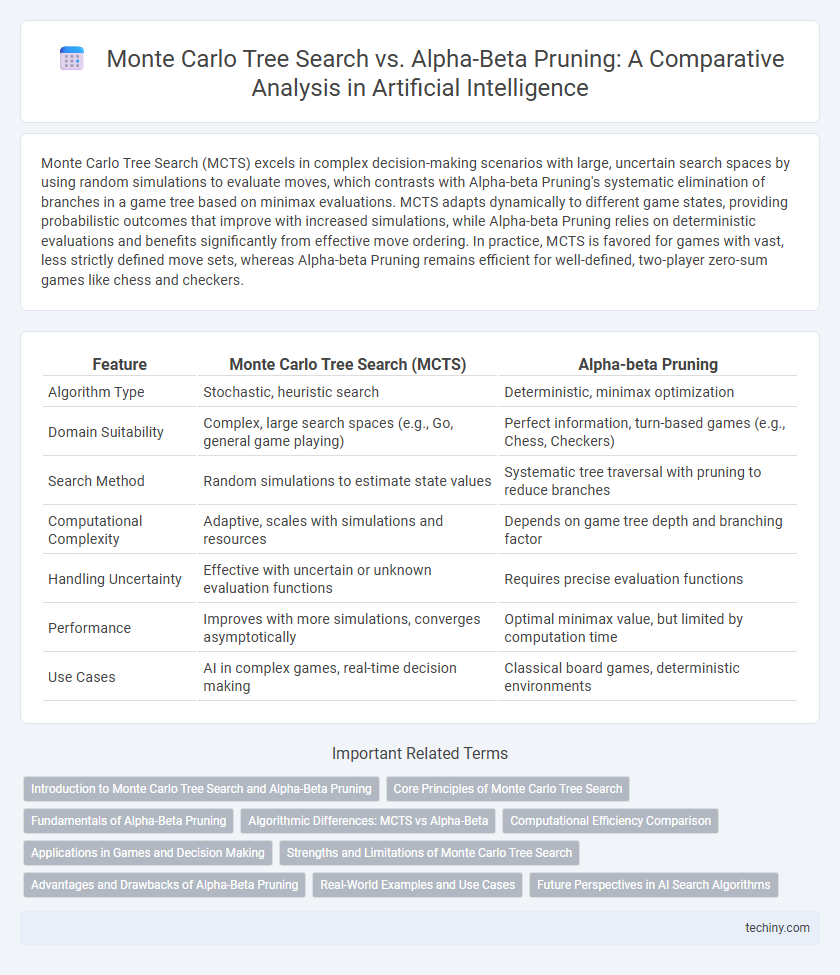

| Feature | Monte Carlo Tree Search (MCTS) | Alpha-beta Pruning |

|---|---|---|

| Algorithm Type | Stochastic, heuristic search | Deterministic, minimax optimization |

| Domain Suitability | Complex, large search spaces (e.g., Go, general game playing) | Perfect information, turn-based games (e.g., Chess, Checkers) |

| Search Method | Random simulations to estimate state values | Systematic tree traversal with pruning to reduce branches |

| Computational Complexity | Adaptive, scales with simulations and resources | Depends on game tree depth and branching factor |

| Handling Uncertainty | Effective with uncertain or unknown evaluation functions | Requires precise evaluation functions |

| Performance | Improves with more simulations, converges asymptotically | Optimal minimax value, but limited by computation time |

| Use Cases | AI in complex games, real-time decision making | Classical board games, deterministic environments |

Introduction to Monte Carlo Tree Search and Alpha-Beta Pruning

Monte Carlo Tree Search (MCTS) leverages randomized simulations to evaluate game states by balancing exploration and exploitation, making it highly effective in complex decision-making problems with large search spaces. Alpha-beta pruning enhances the classic minimax algorithm by eliminating branches in the search tree that cannot influence the final decision, significantly reducing computational overhead in deterministic, perfect-information games. While MCTS excels in stochastic or uncertain environments like Go, alpha-beta pruning remains optimal for turn-based, two-player games such as chess.

Core Principles of Monte Carlo Tree Search

Monte Carlo Tree Search (MCTS) relies on iterative simulation and statistical sampling to evaluate game tree nodes, balancing exploration and exploitation through the Upper Confidence Bound for Trees (UCT) formula. It constructs search trees guided by random playouts, progressively refining value estimates based on actual outcomes rather than heuristic evaluations. This stochastic approach contrasts with Alpha-beta Pruning, which deterministically trims branches to optimize minimax search paths in deterministic, perfect-information games.

Fundamentals of Alpha-Beta Pruning

Alpha-beta pruning improves the minimax algorithm by eliminating branches in the game tree that do not influence the final decision, significantly reducing the search space. It uses two parameters, alpha and beta, to track the best already explored options along the path for both players, allowing the algorithm to prune away moves that cannot possibly affect the outcome. This optimization enables faster decision-making in deterministic, perfect-information games like chess and checkers, where exhaustive search is computationally expensive.

Algorithmic Differences: MCTS vs Alpha-Beta

Monte Carlo Tree Search (MCTS) utilizes randomized simulations to explore game states, balancing exploration and exploitation through the Upper Confidence Bound for Trees (UCT) formula. Alpha-beta pruning enhances minimax search by systematically eliminating branches that cannot affect the final decision, reducing the number of nodes evaluated and improving search efficiency in deterministic games. Unlike Alpha-beta's depth-limited, adversarial evaluation, MCTS adapts dynamically to large or complex search spaces using stochastic sampling, making it particularly effective in imperfect-information or high-branching scenarios.

Computational Efficiency Comparison

Monte Carlo Tree Search (MCTS) employs stochastic simulations to evaluate game states, offering a flexible approach adaptable to complex, high-branching problems but often requiring significant computational resources for convergence. Alpha-beta pruning enhances minimax algorithms by systematically eliminating branches that do not influence the final decision, resulting in faster search times with reduced node evaluations in deterministic, perfect-information games. Computational efficiency in MCTS depends on the number of simulations and domain complexity, whereas alpha-beta pruning achieves near-optimal search depth with lower memory usage under well-defined heuristic orderings.

Applications in Games and Decision Making

Monte Carlo Tree Search (MCTS) excels in complex games with vast, uncertain state spaces like Go and poker by using random simulations to evaluate moves, enabling flexible decision-making under uncertainty. Alpha-beta pruning optimizes minimax search in deterministic, perfect-information games such as chess by efficiently eliminating suboptimal branches, speeding up decision time without sacrificing optimality. Combining MCTS and alpha-beta pruning techniques enhances AI performance in strategic games and real-time decision-making scenarios by balancing exploration with computational efficiency.

Strengths and Limitations of Monte Carlo Tree Search

Monte Carlo Tree Search (MCTS) excels in handling vast and complex decision spaces by using random simulations to evaluate moves, making it particularly effective in games with large branching factors like Go. Its primary strength lies in balancing exploration and exploitation without requiring an accurate heuristic, allowing it to adapt dynamically to unknown environments. However, MCTS suffers from computational intensity and slower convergence compared to Alpha-beta Pruning, especially in deterministic, low-branching games where heuristic-based pruning efficiently reduces the search space.

Advantages and Drawbacks of Alpha-Beta Pruning

Alpha-beta pruning significantly reduces the number of nodes evaluated in game trees by eliminating branches that cannot affect the final decision, enhancing search efficiency in deterministic, perfect-information games like chess. Its main drawback lies in its dependency on move ordering; inefficient ordering can result in minimal pruning and degraded performance. Unlike Monte Carlo Tree Search, alpha-beta pruning struggles with handling uncertainty and probabilistic outcomes, limiting its applicability in complex or stochastic environments.

Real-World Examples and Use Cases

Monte Carlo Tree Search (MCTS) excels in complex decision-making environments like Go and real-time strategy games, where it evaluates vast possibilities through randomized simulations. Alpha-beta pruning is highly effective in deterministic, turn-based games such as chess and checkers, optimizing minimax searches by eliminating redundant branches. In robotics and automated planning, MCTS adapts to uncertain environments while alpha-beta pruning remains preferred for clearly defined, adversarial scenarios.

Future Perspectives in AI Search Algorithms

Monte Carlo Tree Search (MCTS) offers enhanced exploration capabilities in complex and uncertain environments, making it ideal for future AI applications in real-time decision-making and games with vast state spaces. Alpha-beta pruning remains efficient for combinatorial optimization problems with well-defined heuristics, but its scalability is limited compared to MCTS's probabilistic approach. The future of AI search algorithms likely involves hybrid models combining MCTS's adaptability with alpha-beta's pruning efficiency to optimize performance across diverse problem domains.

Monte Carlo Tree Search vs Alpha-beta Pruning Infographic

techiny.com

techiny.com