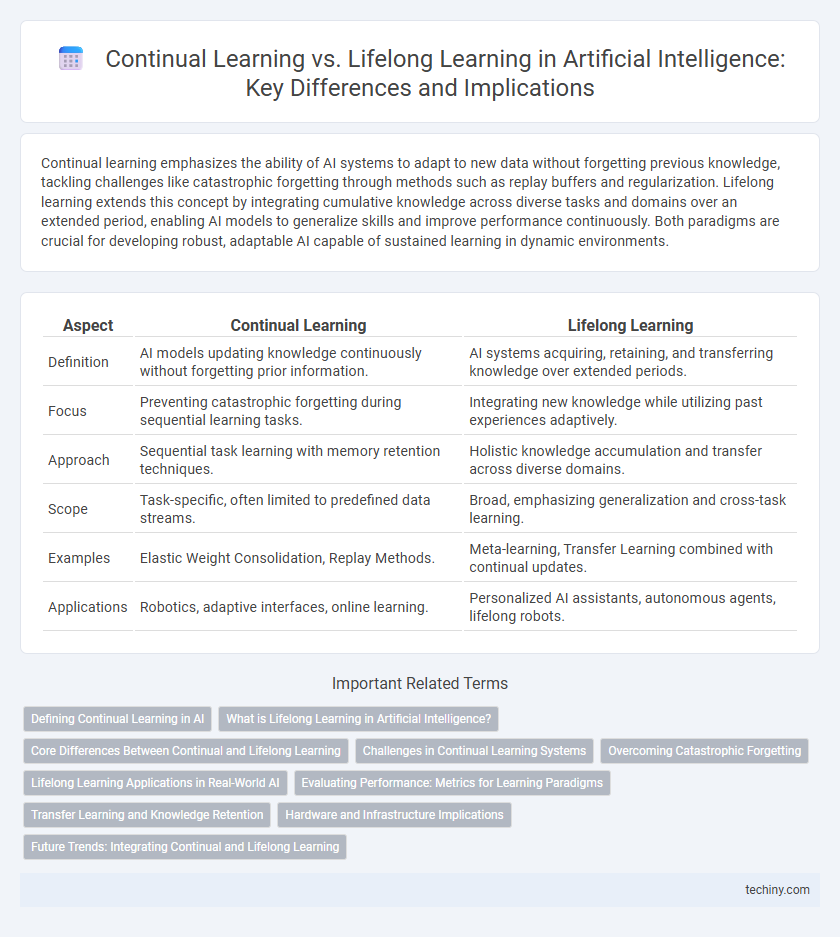

Continual learning emphasizes the ability of AI systems to adapt to new data without forgetting previous knowledge, tackling challenges like catastrophic forgetting through methods such as replay buffers and regularization. Lifelong learning extends this concept by integrating cumulative knowledge across diverse tasks and domains over an extended period, enabling AI models to generalize skills and improve performance continuously. Both paradigms are crucial for developing robust, adaptable AI capable of sustained learning in dynamic environments.

Table of Comparison

| Aspect | Continual Learning | Lifelong Learning |

|---|---|---|

| Definition | AI models updating knowledge continuously without forgetting prior information. | AI systems acquiring, retaining, and transferring knowledge over extended periods. |

| Focus | Preventing catastrophic forgetting during sequential learning tasks. | Integrating new knowledge while utilizing past experiences adaptively. |

| Approach | Sequential task learning with memory retention techniques. | Holistic knowledge accumulation and transfer across diverse domains. |

| Scope | Task-specific, often limited to predefined data streams. | Broad, emphasizing generalization and cross-task learning. |

| Examples | Elastic Weight Consolidation, Replay Methods. | Meta-learning, Transfer Learning combined with continual updates. |

| Applications | Robotics, adaptive interfaces, online learning. | Personalized AI assistants, autonomous agents, lifelong robots. |

Defining Continual Learning in AI

Continual Learning in Artificial Intelligence refers to the capability of models to incrementally acquire, fine-tune, and transfer knowledge over time from a continuous stream of data without forgetting previously learned information, addressing the challenge of catastrophic forgetting. Unlike traditional static learning paradigms, Continual Learning enables AI systems to adapt dynamically to new tasks and environments, thereby improving their generalization and robustness. This concept is fundamental in developing autonomous systems capable of evolving through experience in real-world applications.

What is Lifelong Learning in Artificial Intelligence?

Lifelong learning in artificial intelligence refers to the ability of AI systems to continuously acquire, fine-tune, and transfer knowledge throughout their operational lifetime without forgetting previous information. This approach enables models to adapt to new tasks and environments dynamically, improving performance across diverse domains while mitigating catastrophic forgetting. It contrasts with traditional static learning, promoting more flexible and robust AI systems capable of evolving with sustained experience.

Core Differences Between Continual and Lifelong Learning

Continual learning in artificial intelligence focuses on enabling models to learn from a stream of data without forgetting previously acquired knowledge, addressing the problem of catastrophic forgetting. Lifelong learning encompasses continual learning but extends to adaptive knowledge accumulation and transfer across diverse tasks over an extended period. The core difference lies in continual learning's emphasis on incremental updates and memory retention, while lifelong learning prioritizes comprehensive knowledge integration and long-term adaptability.

Challenges in Continual Learning Systems

Continual Learning systems face challenges such as catastrophic forgetting, where the model loses previously acquired knowledge when learning new tasks, and limited memory constraints that restrict the retention of past data. Ensuring stability-plasticity balance remains difficult, as models must adapt to new information without compromising earlier learning. Scalability issues also arise when integrating diverse and sequentially presented datasets, demanding sophisticated algorithms to maintain performance over time.

Overcoming Catastrophic Forgetting

Continual learning and lifelong learning in artificial intelligence both address the challenge of overcoming catastrophic forgetting, where models lose previously acquired knowledge upon learning new information. Techniques such as elastic weight consolidation, memory replay, and dynamic architecture expansion help maintain performance across sequential tasks by selectively preserving crucial neural connections and rehearsing past data. These approaches enable AI systems to adapt continuously without sacrificing earlier competencies, enhancing robustness and knowledge retention over time.

Lifelong Learning Applications in Real-World AI

Lifelong learning in AI enables systems to continuously acquire, fine-tune, and transfer knowledge across tasks without forgetting previous experiences, crucial for adapting to dynamic real-world environments. Applications include personalized recommendation systems that evolve with user preferences, autonomous vehicles improving decision-making through ongoing environmental interaction, and adaptive robotics capable of learning new skills over time. This capability enhances robustness and scalability in AI models deployed in healthcare diagnostics, finance fraud detection, and smart home automation.

Evaluating Performance: Metrics for Learning Paradigms

Evaluating performance in continual learning and lifelong learning involves distinct metrics tailored to their learning paradigms, such as accuracy retention, forgetting rate, and forward transfer. Continual learning metrics emphasize minimizing catastrophic forgetting and maximizing knowledge retention across sequential tasks, while lifelong learning evaluation often includes adaptability measures and cumulative learning efficiency over extended periods. Selecting appropriate metrics ensures precise assessment of models' ability to learn continuously without degrading previously acquired knowledge.

Transfer Learning and Knowledge Retention

Continual Learning emphasizes incremental updates by transferring knowledge from previous tasks to new ones, enhancing model adaptability without forgetting prior information. Lifelong Learning focuses on preserving accumulated knowledge through robust memory mechanisms to prevent catastrophic forgetting while integrating new experiences. Both approaches leverage transfer learning techniques to optimize knowledge retention and improve overall AI system performance across evolving domains.

Hardware and Infrastructure Implications

Continual Learning demands adaptive hardware architectures with efficient memory management and real-time data processing capabilities to support incremental model updates without retraining from scratch. Lifelong Learning necessitates scalable computing infrastructures that maintain long-term knowledge retention and facilitate integration of diverse data streams across extended periods. Both approaches require optimized energy consumption and robust storage solutions to handle evolving datasets and dynamic model evolution.

Future Trends: Integrating Continual and Lifelong Learning

Future trends in artificial intelligence emphasize the integration of continual learning and lifelong learning to create adaptive systems capable of acquiring, retaining, and transferring knowledge over extended periods. This convergence enables AI models to overcome catastrophic forgetting by efficiently updating representations with new data while maintaining previously learned information. Advances in neural architecture, memory consolidation, and meta-learning algorithms will drive more robust, scalable solutions tailored for dynamic, real-world environments.

Continual Learning vs Lifelong Learning Infographic

techiny.com

techiny.com