One-shot learning enables AI models to recognize new classes from a single example by leveraging prior knowledge, making it ideal for scenarios with minimal data. Few-shot learning extends this capability by using a small number of examples, improving accuracy and generalization across diverse tasks. Both approaches address data scarcity challenges, enhancing AI adaptability in real-world applications such as image recognition and natural language processing.

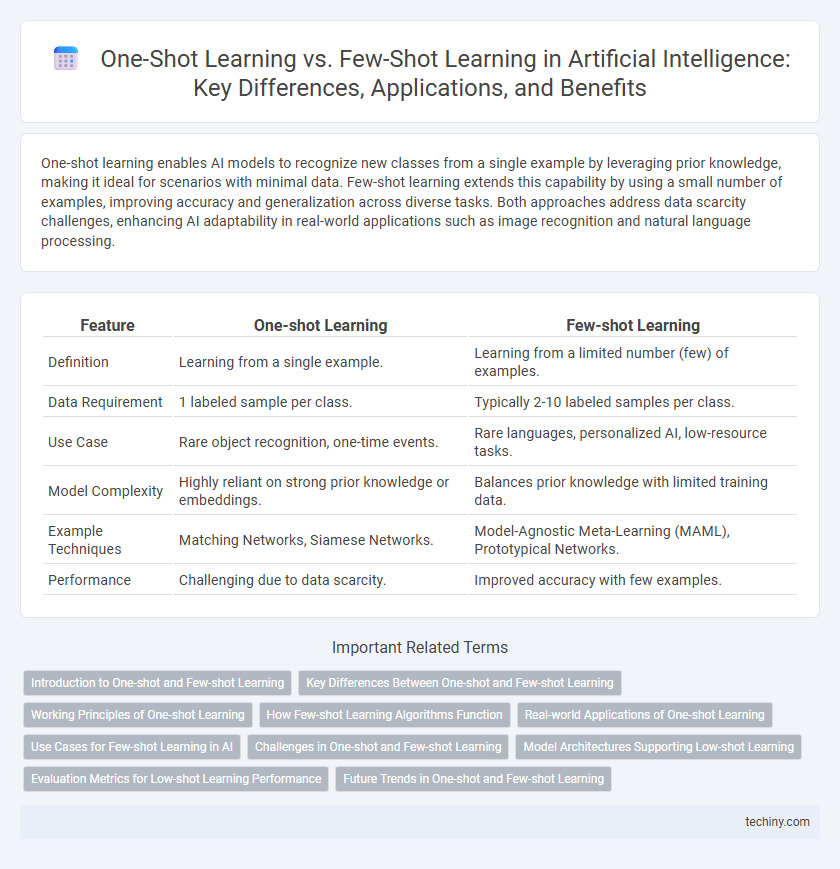

Table of Comparison

| Feature | One-shot Learning | Few-shot Learning |

|---|---|---|

| Definition | Learning from a single example. | Learning from a limited number (few) of examples. |

| Data Requirement | 1 labeled sample per class. | Typically 2-10 labeled samples per class. |

| Use Case | Rare object recognition, one-time events. | Rare languages, personalized AI, low-resource tasks. |

| Model Complexity | Highly reliant on strong prior knowledge or embeddings. | Balances prior knowledge with limited training data. |

| Example Techniques | Matching Networks, Siamese Networks. | Model-Agnostic Meta-Learning (MAML), Prototypical Networks. |

| Performance | Challenging due to data scarcity. | Improved accuracy with few examples. |

Introduction to One-shot and Few-shot Learning

One-shot learning enables models to recognize new classes from a single example, significantly reducing the need for extensive labeled data compared to traditional machine learning. Few-shot learning extends this concept by allowing models to learn from a small number of examples, typically ranging from two to ten, enhancing adaptability in low-data scenarios. Both techniques leverage meta-learning and transfer learning to improve performance on novel tasks with limited training samples.

Key Differences Between One-shot and Few-shot Learning

One-shot learning refers to the ability of artificial intelligence models to recognize or learn new concepts from a single example, whereas few-shot learning requires a limited number of examples, typically ranging from two to ten. Key differences include data efficiency, with one-shot learning demanding minimal data and heavily relying on prior knowledge or transfer learning, while few-shot learning benefits from slightly larger sample sizes to improve generalization. The application of advanced techniques like metric learning and meta-learning varies between the two, optimizing performance based on the scarcity of available training examples.

Working Principles of One-shot Learning

One-shot learning operates by leveraging prior knowledge through advanced feature extraction and metric-based approaches to recognize new classes from a single example. It utilizes a learned similarity function or embedding space, such as those generated by Siamese networks or prototypical networks, to compare the new instance with known categories effectively. This method contrasts with traditional learning models by emphasizing generalization from minimal data, making it particularly valuable in scenarios with scarce labeled samples.

How Few-shot Learning Algorithms Function

Few-shot learning algorithms function by leveraging prior knowledge encoded in pretrained models to generalize from a limited number of examples, often through meta-learning or transfer learning techniques. These algorithms optimize representation learning and employ task-specific adaptation to improve performance on new tasks with minimal labeled data. Techniques such as gradient-based optimization, memory-augmented neural networks, and attention mechanisms enable efficient knowledge transfer and rapid learning in few-shot scenarios.

Real-world Applications of One-shot Learning

One-shot learning enables AI models to recognize and classify new objects with a single example, significantly improving efficiency in real-world scenarios such as facial recognition, medical imaging, and personalized recommendation systems. This approach reduces the dependency on large datasets, making it ideal for applications with limited data availability or where rapid adaptation is crucial. Industries like healthcare, security, and autonomous vehicles benefit from one-shot learning's ability to generalize from minimal data, enhancing decision-making and user experience.

Use Cases for Few-shot Learning in AI

Few-shot learning excels in scenarios where labeled data is scarce, such as medical imaging diagnosis, allowing AI models to generalize from limited examples. It is widely applied in natural language processing tasks like sentiment analysis and language translation, where acquiring extensive annotated datasets is challenging. Robotics and autonomous systems benefit from few-shot learning by enabling rapid adaptation to new environments or tasks with minimal training data.

Challenges in One-shot and Few-shot Learning

One-shot learning faces significant challenges due to its reliance on extremely limited data, making it difficult for models to generalize from a single example and increasing susceptibility to overfitting. Few-shot learning, while allowing slightly more data, still struggles with issues like class imbalance and the need for effective knowledge transfer to accurately learn from a handful of instances. Both paradigms require advanced techniques such as meta-learning and data augmentation to overcome the scarcity of labeled data and enhance model robustness.

Model Architectures Supporting Low-shot Learning

Few-shot learning models often utilize meta-learning architectures such as Prototypical Networks and Model-Agnostic Meta-Learning (MAML) to generalize from limited examples by learning effective initialization parameters or embedding spaces. One-shot learning typically relies on Siamese Networks or Matching Networks, which compare input samples against a support set to classify new instances with minimal data. Both approaches emphasize leveraging prior knowledge through specialized neural network designs to enhance performance on low-shot tasks in artificial intelligence applications.

Evaluation Metrics for Low-shot Learning Performance

Evaluation metrics for low-shot learning performance prioritize accuracy, precision, recall, and F1-score to measure the effectiveness of one-shot and few-shot learning models. Confusion matrices and area under the receiver operating characteristic curve (AUC-ROC) help assess model robustness in scenarios with scarce labeled examples. Embedding quality and metric learning losses, such as triplet loss, are also used to evaluate how well models generalize from limited data.

Future Trends in One-shot and Few-shot Learning

Future trends in One-shot and Few-shot Learning emphasize the integration of meta-learning and transformer architectures to enhance model generalization with minimal data. Advances in unsupervised and self-supervised learning techniques are expected to reduce reliance on labeled datasets, improving adaptability across diverse AI applications. Continued research targets scalable solutions for real-time reasoning and personalization in low-data environments, driving innovation in robotics, healthcare, and natural language processing.

One-shot Learning vs Few-shot Learning Infographic

techiny.com

techiny.com