Centralized training consolidates data into a single location for model development, enabling comprehensive analysis but raising privacy and security concerns. Federated learning trains models across decentralized devices, preserving data privacy by keeping information local while aggregating updates to enhance performance. Balancing these approaches requires considering factors like data sensitivity, communication costs, and computational resources to optimize AI model efficiency and security.

Table of Comparison

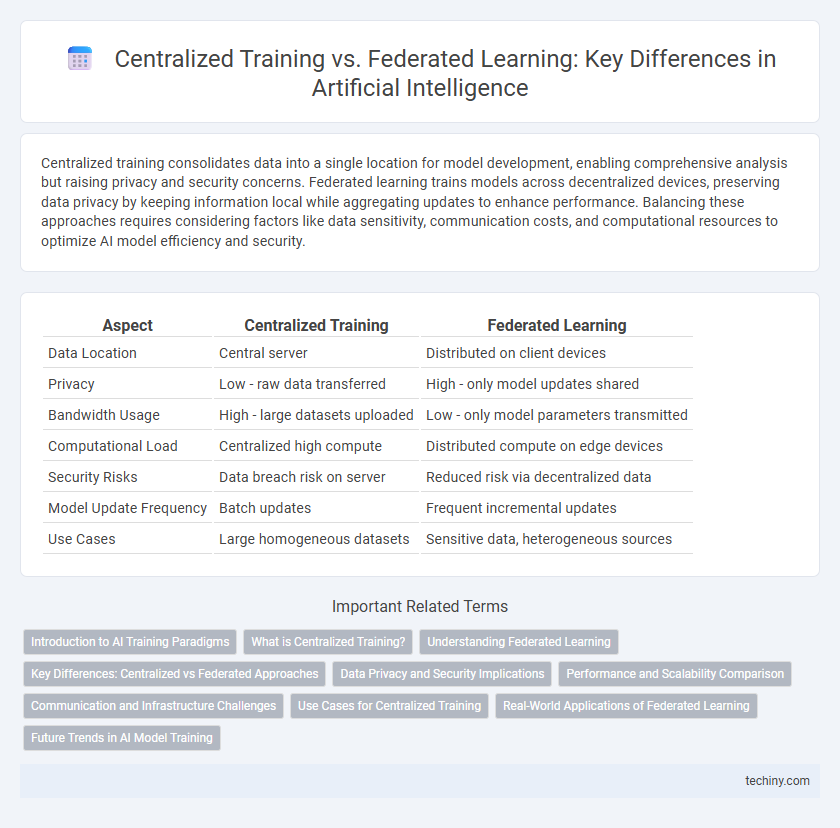

| Aspect | Centralized Training | Federated Learning |

|---|---|---|

| Data Location | Central server | Distributed on client devices |

| Privacy | Low - raw data transferred | High - only model updates shared |

| Bandwidth Usage | High - large datasets uploaded | Low - only model parameters transmitted |

| Computational Load | Centralized high compute | Distributed compute on edge devices |

| Security Risks | Data breach risk on server | Reduced risk via decentralized data |

| Model Update Frequency | Batch updates | Frequent incremental updates |

| Use Cases | Large homogeneous datasets | Sensitive data, heterogeneous sources |

Introduction to AI Training Paradigms

Centralized training in AI consolidates all data on a single server, enabling efficient model updates with extensive computational resources but raising privacy and data security concerns. Federated learning decentralizes the training process by allowing multiple devices or edge nodes to collaboratively train a shared model while keeping data localized, preserving user privacy and reducing communication overhead. This paradigm shift addresses data sovereignty issues and is increasingly adopted in applications such as healthcare, finance, and mobile device personalization.

What is Centralized Training?

Centralized training in artificial intelligence refers to a method where data from various sources is collected and stored on a single server or centralized location for model training. This approach allows for efficient use of computational resources and easier model updates due to the consolidated data. However, centralized training poses challenges related to data privacy, security concerns, and potential bottlenecks in data transfer.

Understanding Federated Learning

Federated learning enables multiple decentralized devices to collaboratively train a shared machine learning model while keeping data localized, enhancing privacy and reducing communication overhead. Unlike centralized training, where data is aggregated in a central server, federated learning performs model updates locally and only shares these updates, preserving sensitive information. This approach is particularly effective in scenarios involving diverse data sources and strict data privacy regulations, such as healthcare and finance.

Key Differences: Centralized vs Federated Approaches

Centralized training involves aggregating all data into a single, central server to build and fine-tune AI models, offering streamlined data management but raising privacy concerns. Federated learning trains AI models across multiple decentralized devices or servers, allowing data to remain local, which enhances user privacy and reduces data transfer costs. The key difference lies in data locality and privacy: centralized approaches collect data centrally for processing, while federated learning leverages distributed data sources without centralizing sensitive information.

Data Privacy and Security Implications

Centralized training involves aggregating data from multiple sources into a single repository, increasing risks of data breaches and unauthorized access. Federated learning enhances data privacy by enabling model training directly on decentralized devices, minimizing the need to share sensitive data. This paradigm reduces exposure of personal information and mitigates security vulnerabilities inherent in centralized data storage.

Performance and Scalability Comparison

Centralized training offers high computational power and efficient model updates due to aggregated data processing on a single server, resulting in faster convergence and generally higher accuracy. Federated learning improves scalability by distributing model training across multiple edge devices, reducing latency and bandwidth usage, but may face challenges with heterogeneous data and slower convergence rates. Performance in federated learning depends on network reliability and device capabilities, while centralized systems struggle with privacy concerns and single points of failure.

Communication and Infrastructure Challenges

Centralized training requires extensive data transfer to a central server, causing significant communication bottlenecks and high bandwidth consumption. Federated learning reduces data movement by processing updates locally but introduces infrastructure challenges related to coordinating heterogeneous devices with varying computing power and network reliability. Managing secure, efficient communication protocols and scalable infrastructure is critical for both approaches to ensure model convergence and data privacy.

Use Cases for Centralized Training

Centralized training is highly effective for applications requiring large-scale data aggregation, such as natural language processing models trained on vast text corpora and computer vision tasks utilizing extensive labeled image datasets. Industries like finance and healthcare benefit from centralized training by consolidating sensitive data in secure environments to develop predictive models with high accuracy and consistency. This approach is ideal when data privacy regulations are manageable and network bandwidth allows efficient data transfer to a central server.

Real-World Applications of Federated Learning

Federated learning enables edge devices such as smartphones, IoT sensors, and autonomous vehicles to collaboratively train AI models without sharing raw data, preserving privacy and reducing communication overhead. Healthcare applications leverage federated learning to aggregate patient data from multiple hospitals, enhancing diagnostic accuracy while complying with data protection regulations like HIPAA and GDPR. Financial institutions utilize this approach to detect fraud patterns across decentralized datasets, improving model robustness and security without exposing sensitive customer information.

Future Trends in AI Model Training

Emerging trends in AI model training emphasize a shift from centralized training toward federated learning to enhance data privacy and reduce latency. Federated learning enables decentralized data processing across edge devices while maintaining model accuracy, addressing regulatory demands such as GDPR and HIPAA. Advances in secure multi-party computation and differential privacy are accelerating the adoption of federated systems for scalable, real-time AI applications.

Centralized Training vs Federated Learning Infographic

techiny.com

techiny.com