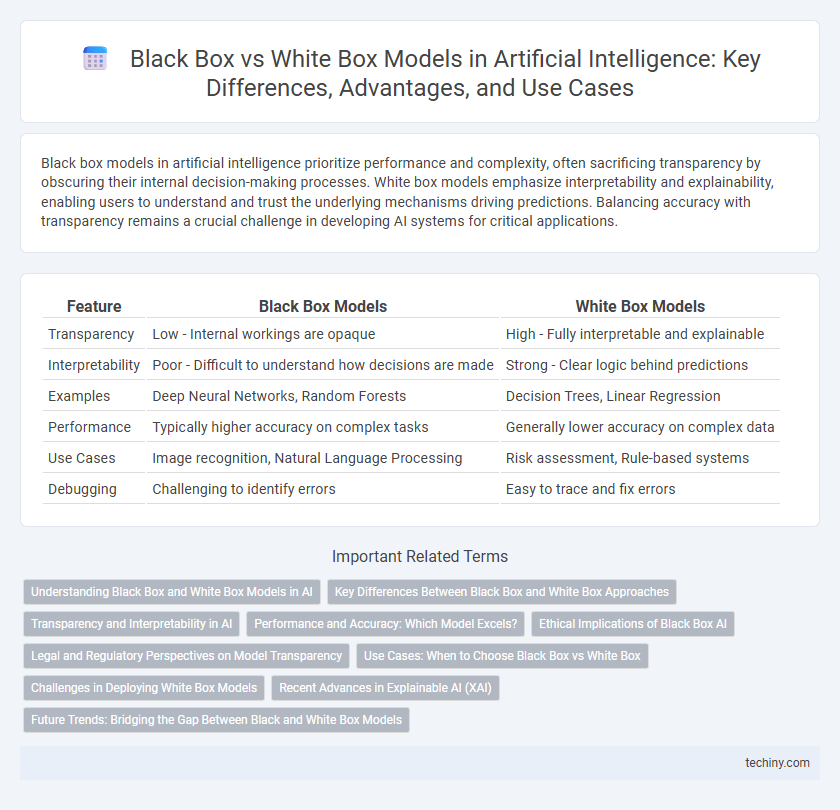

Black box models in artificial intelligence prioritize performance and complexity, often sacrificing transparency by obscuring their internal decision-making processes. White box models emphasize interpretability and explainability, enabling users to understand and trust the underlying mechanisms driving predictions. Balancing accuracy with transparency remains a crucial challenge in developing AI systems for critical applications.

Table of Comparison

| Feature | Black Box Models | White Box Models |

|---|---|---|

| Transparency | Low - Internal workings are opaque | High - Fully interpretable and explainable |

| Interpretability | Poor - Difficult to understand how decisions are made | Strong - Clear logic behind predictions |

| Examples | Deep Neural Networks, Random Forests | Decision Trees, Linear Regression |

| Performance | Typically higher accuracy on complex tasks | Generally lower accuracy on complex data |

| Use Cases | Image recognition, Natural Language Processing | Risk assessment, Rule-based systems |

| Debugging | Challenging to identify errors | Easy to trace and fix errors |

Understanding Black Box and White Box Models in AI

Black box models in AI, such as deep neural networks, operate with complex internal mechanisms that are not easily interpretable, making it challenging to understand how inputs are transformed into outputs. White box models, including decision trees and linear regression, offer transparent and interpretable processes that allow users to trace the decision-making steps clearly. Understanding the trade-off between the predictive power of black box models and the interpretability of white box models is crucial for applications requiring explainability and trust.

Key Differences Between Black Box and White Box Approaches

Black box models in artificial intelligence operate without revealing internal mechanisms, making them highly complex and often less interpretable, whereas white box models are designed with transparency, allowing users to understand and validate the decision-making process. Black box approaches excel in handling large datasets and complex patterns using techniques like deep learning, while white box methods prioritize interpretability through simpler algorithms such as decision trees or linear regression. The key difference lies in explainability: white box models provide clear insights into model logic and feature importance, whereas black box models require supplementary tools like SHAP or LIME to interpret outcomes.

Transparency and Interpretability in AI

Black Box models in Artificial Intelligence offer high predictive accuracy but lack transparency, making it challenging to interpret their decision-making processes. White Box models prioritize interpretability and transparency by providing clear, understandable rules or structures, enabling users to trace and explain outcomes. Enhanced transparency in White Box models fosters trust and accountability, crucial for applications in healthcare, finance, and regulatory environments.

Performance and Accuracy: Which Model Excels?

Black box models, such as deep neural networks, often achieve superior performance and accuracy by capturing complex patterns in large datasets, making them ideal for tasks like image recognition and natural language processing. White box models, including decision trees and linear regression, provide transparent decision-making processes but may sacrifice some accuracy due to their simpler structures. In practice, black box models excel in predictive accuracy, while white box models offer interpretability, highlighting the trade-off between performance and explainability in AI.

Ethical Implications of Black Box AI

Black box AI models present significant ethical challenges due to their lack of transparency and explainability, making it difficult to audit decisions or detect biases embedded within complex algorithms. This opacity raises concerns about accountability, especially in high-stakes domains like healthcare, finance, and criminal justice, where unjust outcomes can significantly impact lives. Ensuring ethical AI deployment requires prioritizing interpretable models or developing methods to explain black box decisions to uphold fairness and trustworthiness.

Legal and Regulatory Perspectives on Model Transparency

Black box models in artificial intelligence present significant challenges for legal compliance due to their lack of interpretability, making it difficult to ensure accountability and transparency in automated decision-making. Regulatory frameworks such as the EU's GDPR emphasize the right to explanation, which white box models inherently support by providing clear, auditable decision pathways. Ensuring model transparency aligns with emerging standards in AI ethics and legal mandates, facilitating trust and adherence to data protection laws.

Use Cases: When to Choose Black Box vs White Box

Black box models excel in complex pattern recognition tasks like image and speech recognition where interpretability is less critical, enabling high accuracy in fields such as healthcare diagnostics and fraud detection. White box models provide transparency and explainability, making them ideal for regulatory environments like finance and legal systems where decision accountability is essential. Choosing between black box and white box models depends on the need for interpretability, accuracy, and compliance with domain-specific regulations.

Challenges in Deploying White Box Models

White box models offer transparency and interpretability by providing clear insights into their decision-making processes, but challenges in deploying them include increased complexity in modeling high-dimensional data and limitations in capturing non-linear relationships. These models often require extensive domain expertise and computational resources to build and maintain, which can hinder scalability in real-world applications. Furthermore, balancing model accuracy with interpretability remains a significant obstacle when applying white box models in dynamic, data-intensive artificial intelligence systems.

Recent Advances in Explainable AI (XAI)

Recent advances in Explainable AI (XAI) have significantly improved the transparency of black box models such as deep neural networks by introducing techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations). White box models, including decision trees and linear regression, inherently provide interpretability but often lack the predictive power of black box models, prompting hybrid approaches that combine accuracy with explainability. Emerging research focuses on developing inherently interpretable models and post-hoc explanation methods to balance model performance with user trust and regulatory compliance in critical AI applications.

Future Trends: Bridging the Gap Between Black and White Box Models

Emerging trends in artificial intelligence emphasize the integration of black box and white box models to enhance interpretability without compromising performance. Techniques such as explainable AI (XAI) and hybrid modeling frameworks promote transparency while maintaining the predictive accuracy of complex neural networks. Future research focuses on developing algorithms that provide real-time interpretability and trustworthiness, addressing regulatory and ethical requirements in AI deployment.

Black Box Models vs White Box Models Infographic

techiny.com

techiny.com