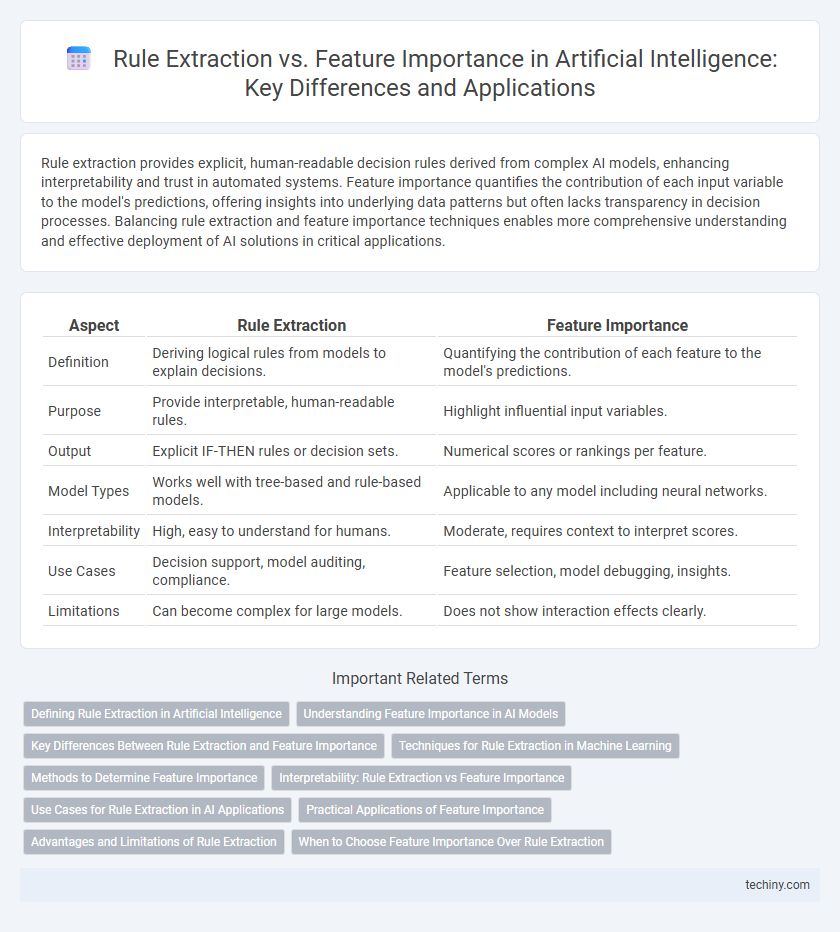

Rule extraction provides explicit, human-readable decision rules derived from complex AI models, enhancing interpretability and trust in automated systems. Feature importance quantifies the contribution of each input variable to the model's predictions, offering insights into underlying data patterns but often lacks transparency in decision processes. Balancing rule extraction and feature importance techniques enables more comprehensive understanding and effective deployment of AI solutions in critical applications.

Table of Comparison

| Aspect | Rule Extraction | Feature Importance |

|---|---|---|

| Definition | Deriving logical rules from models to explain decisions. | Quantifying the contribution of each feature to the model's predictions. |

| Purpose | Provide interpretable, human-readable rules. | Highlight influential input variables. |

| Output | Explicit IF-THEN rules or decision sets. | Numerical scores or rankings per feature. |

| Model Types | Works well with tree-based and rule-based models. | Applicable to any model including neural networks. |

| Interpretability | High, easy to understand for humans. | Moderate, requires context to interpret scores. |

| Use Cases | Decision support, model auditing, compliance. | Feature selection, model debugging, insights. |

| Limitations | Can become complex for large models. | Does not show interaction effects clearly. |

Defining Rule Extraction in Artificial Intelligence

Rule extraction in artificial intelligence involves transforming complex machine learning models into human-readable rules that explain decision-making processes. This technique improves model interpretability by converting black-box models into transparent rule sets, enabling better understanding and trust. Unlike feature importance, which quantifies the influence of individual features, rule extraction provides explicit logical conditions that outline how input variables lead to specific outcomes.

Understanding Feature Importance in AI Models

Understanding feature importance in AI models involves quantifying the contribution of each input variable to the model's predictions, enabling interpretable insights into the decision-making process. Techniques such as SHAP (SHapley Additive exPlanations) and permutation importance provide robust, model-agnostic measures that highlight critical features influencing output. Evaluating feature importance enhances transparency and guides model refinement by identifying key drivers behind AI predictions.

Key Differences Between Rule Extraction and Feature Importance

Rule extraction generates human-readable logical conditions from black-box AI models, enabling transparent decision-making by outlining explicit if-then rules. Feature importance quantifies the contribution of each input variable to the model's predictions, highlighting which features drive the output without providing explicit decision logic. The key difference lies in rule extraction's focus on interpretability through symbolic representations, whereas feature importance emphasizes variable significance through numerical scores.

Techniques for Rule Extraction in Machine Learning

Techniques for rule extraction in machine learning include decision tree algorithms, which translate complex models into interpretable if-then rules, and association rule mining that identifies relationships between variables through support and confidence metrics. Another effective method is rule-based learning, such as NeuroRule and RuleFit, which combine neural networks or ensemble models with symbolic rule extraction to improve interpretability. These techniques enable the transformation of black-box models into transparent decision-making processes, facilitating explainability in artificial intelligence systems.

Methods to Determine Feature Importance

Methods to determine feature importance in Artificial Intelligence include permutation importance, which measures the impact of shuffling feature values on model performance, and SHAP (SHapley Additive exPlanations), which assigns each feature an importance value based on cooperative game theory. Another technique is LIME (Local Interpretable Model-agnostic Explanations), which approximates model predictions locally using interpretable linear models to explain feature effects. Rule extraction, by contrast, converts complex model decisions into interpretable if-then rules, focusing on transparent decision logic rather than numerical feature importance scores.

Interpretability: Rule Extraction vs Feature Importance

Rule extraction offers clear, human-readable decision paths that enhance interpretability by explicitly outlining the logic behind AI predictions. Feature importance provides a quantitative measure of each input variable's influence, but often lacks the transparency to fully understand decision-making nuances. Combining rule extraction with feature importance enables more comprehensive interpretability, bridging intuitive reasoning with statistical insights.

Use Cases for Rule Extraction in AI Applications

Rule extraction in AI applications is essential for enhancing transparency and interpretability in decision-making systems, particularly in regulated industries like finance and healthcare where explainability is critical. This technique converts complex model behaviors into human-readable rules, enabling domain experts to validate AI outputs and ensure compliance with legal standards. Use cases include credit scoring, medical diagnosis, and fraud detection, where precise reasoning and auditability improve trust and facilitate actionable insights.

Practical Applications of Feature Importance

Feature importance techniques provide critical insights into which variables most influence AI model predictions, enabling practitioners to enhance model interpretability and trust in sectors like finance and healthcare. Unlike rule extraction, which derives explicit decision rules, feature importance quantifies the contribution of input features, facilitating model debugging and feature selection to improve performance. Practical applications include identifying key risk factors in credit scoring, detecting relevant biomarkers in medical diagnosis, and optimizing customer targeting in marketing campaigns.

Advantages and Limitations of Rule Extraction

Rule extraction offers clear advantages in artificial intelligence by providing interpretable and human-readable models that enhance transparency and trustworthiness in decision-making processes. Its limitations include challenges with scalability and complexity when applied to large datasets or highly non-linear models, which can lead to overly simplified or incomplete rules. Despite these constraints, rule extraction remains valuable for domains requiring explicit explanations and regulatory compliance.

When to Choose Feature Importance Over Rule Extraction

Feature importance is preferable over rule extraction when working with complex models like deep neural networks or ensemble methods where interpretability of individual features can guide model tuning and dataset understanding. It excels in scenarios requiring insight into which variables most influence predictions without simplifying model behavior into discrete rules. Use feature importance to efficiently prioritize features for dimensionality reduction or feature engineering in high-dimensional datasets.

Rule Extraction vs Feature Importance Infographic

techiny.com

techiny.com