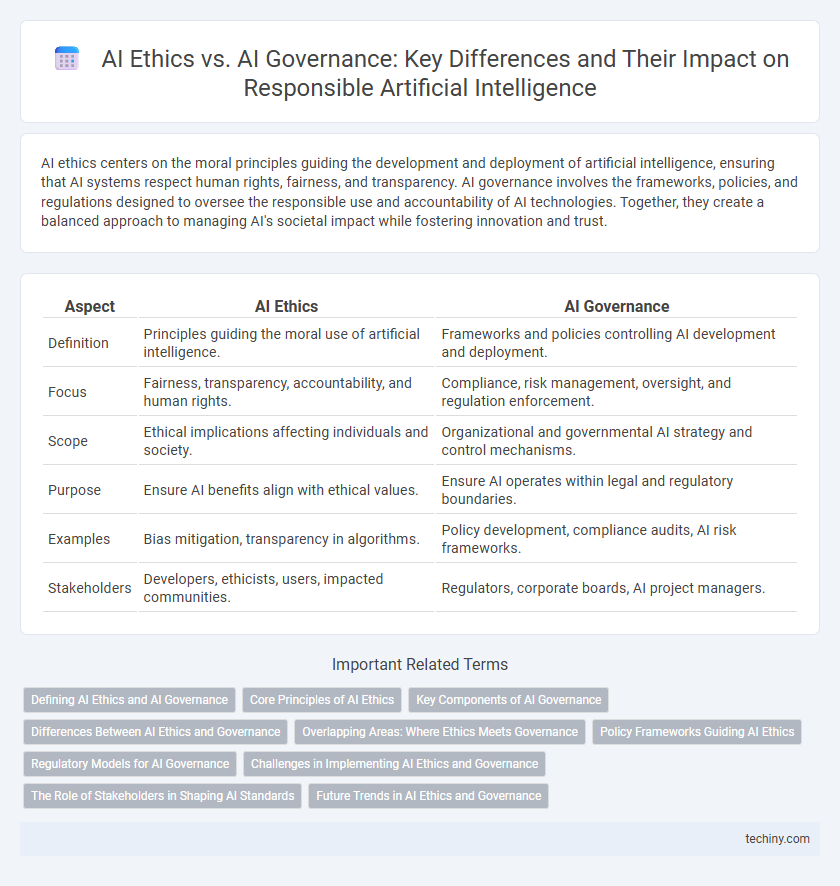

AI ethics centers on the moral principles guiding the development and deployment of artificial intelligence, ensuring that AI systems respect human rights, fairness, and transparency. AI governance involves the frameworks, policies, and regulations designed to oversee the responsible use and accountability of AI technologies. Together, they create a balanced approach to managing AI's societal impact while fostering innovation and trust.

Table of Comparison

| Aspect | AI Ethics | AI Governance |

|---|---|---|

| Definition | Principles guiding the moral use of artificial intelligence. | Frameworks and policies controlling AI development and deployment. |

| Focus | Fairness, transparency, accountability, and human rights. | Compliance, risk management, oversight, and regulation enforcement. |

| Scope | Ethical implications affecting individuals and society. | Organizational and governmental AI strategy and control mechanisms. |

| Purpose | Ensure AI benefits align with ethical values. | Ensure AI operates within legal and regulatory boundaries. |

| Examples | Bias mitigation, transparency in algorithms. | Policy development, compliance audits, AI risk frameworks. |

| Stakeholders | Developers, ethicists, users, impacted communities. | Regulators, corporate boards, AI project managers. |

Defining AI Ethics and AI Governance

AI ethics refers to the moral principles guiding the development and deployment of artificial intelligence, emphasizing fairness, transparency, accountability, and the prevention of harm. AI governance encompasses the frameworks, policies, and regulations designed to ensure compliance with ethical standards, risk management, and responsible AI use across organizations and governments. Defining AI ethics involves setting normative values, while AI governance operationalizes these values through enforceable rules and oversight mechanisms.

Core Principles of AI Ethics

Core principles of AI ethics emphasize fairness, transparency, accountability, and respect for human rights, ensuring AI systems operate without bias and uphold privacy standards. Ethical AI development prioritizes informed consent, equitable outcomes, and the prevention of harm to individuals and societies. These principles serve as fundamental guidelines distinguishing ethical considerations from regulatory frameworks found in AI governance.

Key Components of AI Governance

Key components of AI governance include transparency, accountability, and regulatory compliance, which ensure AI systems operate fairly and responsibly. Effective AI governance frameworks integrate risk management, stakeholder engagement, and continuous monitoring to mitigate ethical and legal challenges. These elements collectively support the development of AI technologies that align with societal values and legal standards.

Differences Between AI Ethics and Governance

AI ethics centers on principles like fairness, transparency, and accountability to guide moral behavior in AI development and usage. AI governance involves establishing policies, frameworks, and regulatory structures to oversee AI deployment and ensure compliance with legal and ethical standards. The main difference lies in ethics providing normative guidelines, while governance focuses on implementation and enforcement mechanisms.

Overlapping Areas: Where Ethics Meets Governance

AI ethics and AI governance intersect in establishing frameworks that ensure responsible AI development while complying with regulatory standards. Both prioritize transparency, accountability, and fairness by integrating ethical principles into governance policies and operational protocols. Overlapping areas include data privacy, algorithmic bias mitigation, and stakeholder engagement to align AI systems with societal values and legal requirements.

Policy Frameworks Guiding AI Ethics

AI ethics centers on principles such as fairness, transparency, accountability, and respect for human rights, providing moral guidelines for artificial intelligence development and deployment. AI governance establishes policy frameworks and regulatory standards to operationalize these ethical principles, ensuring compliance and mitigating risks associated with AI systems. Effective governance frameworks integrate stakeholder input and global best practices to create robust policies that guide ethical AI innovation and use.

Regulatory Models for AI Governance

AI governance regulatory models encompass frameworks such as risk-based, command-and-control, and self-regulation approaches, each aiming to balance innovation with ethical accountability. Risk-based models prioritize mitigating potential harms by categorizing AI applications according to their impact, enabling tailored oversight. Command-and-control mechanisms enforce strict compliance through legally binding rules, while self-regulation leverages industry standards and best practices to promote responsible AI development without stifling progress.

Challenges in Implementing AI Ethics and Governance

Implementing AI ethics and governance faces significant challenges such as balancing innovation with regulation, addressing algorithmic bias, and ensuring transparency in AI decision-making processes. Diverse stakeholder perspectives and rapidly evolving AI technologies complicate the establishment of standardized ethical frameworks and governance policies. Lack of global consensus and enforcement mechanisms further hinder effective oversight and accountability in AI deployment.

The Role of Stakeholders in Shaping AI Standards

Stakeholders including policymakers, industry leaders, and civil society play a crucial role in shaping AI ethics and governance by defining standards that ensure transparency, accountability, and fairness. Ethical frameworks guide responsible AI development, while governance structures enforce compliance through regulations and oversight mechanisms. Collaboration among diverse stakeholders fosters consensus on best practices that balance innovation with societal values.

Future Trends in AI Ethics and Governance

Future trends in AI ethics emphasize the development of transparent, accountable algorithms designed to mitigate bias and ensure fairness across diverse applications. AI governance frameworks are increasingly adopting dynamic regulatory approaches that incorporate continuous stakeholder engagement and real-time monitoring to address emerging risks. Enhanced collaboration between international bodies and tech developers aims to standardize ethical guidelines while balancing innovation with societal well-being.

AI Ethics vs AI Governance Infographic

techiny.com

techiny.com