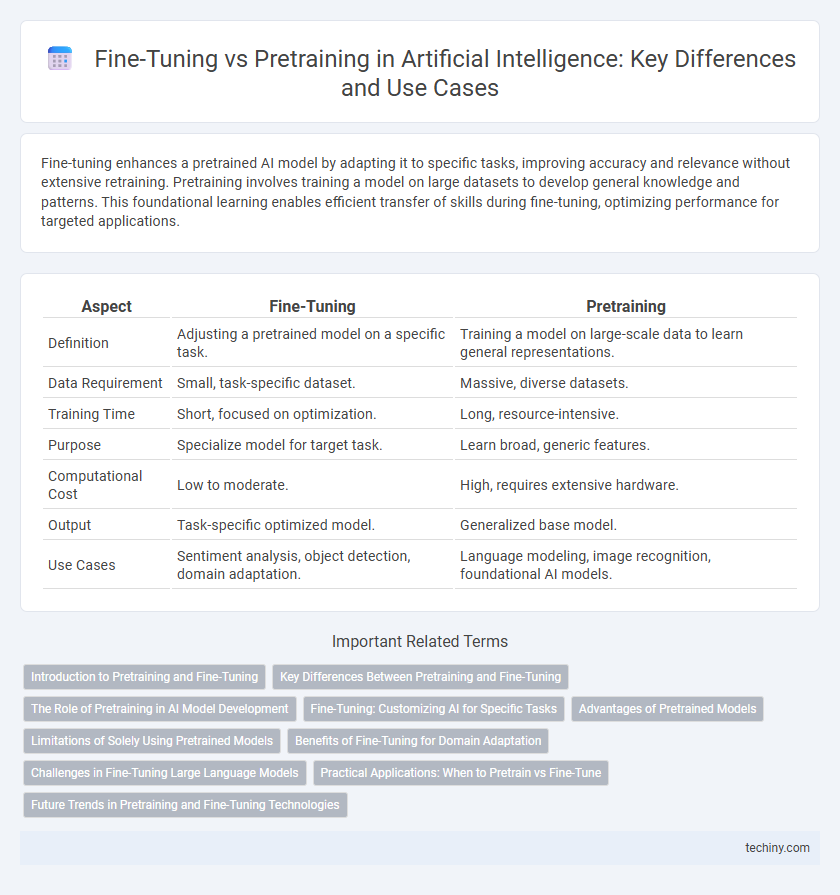

Fine-tuning enhances a pretrained AI model by adapting it to specific tasks, improving accuracy and relevance without extensive retraining. Pretraining involves training a model on large datasets to develop general knowledge and patterns. This foundational learning enables efficient transfer of skills during fine-tuning, optimizing performance for targeted applications.

Table of Comparison

| Aspect | Fine-Tuning | Pretraining |

|---|---|---|

| Definition | Adjusting a pretrained model on a specific task. | Training a model on large-scale data to learn general representations. |

| Data Requirement | Small, task-specific dataset. | Massive, diverse datasets. |

| Training Time | Short, focused on optimization. | Long, resource-intensive. |

| Purpose | Specialize model for target task. | Learn broad, generic features. |

| Computational Cost | Low to moderate. | High, requires extensive hardware. |

| Output | Task-specific optimized model. | Generalized base model. |

| Use Cases | Sentiment analysis, object detection, domain adaptation. | Language modeling, image recognition, foundational AI models. |

Introduction to Pretraining and Fine-Tuning

Pretraining in artificial intelligence involves training a model on a large, diverse dataset to learn general features and patterns, creating a robust foundation for various tasks. Fine-tuning refines this pretrained model by training it on a smaller, task-specific dataset to improve performance on specialized applications. This two-step approach enhances model accuracy and efficiency by leveraging broad knowledge before specializing.

Key Differences Between Pretraining and Fine-Tuning

Pretraining involves training a language model on a large, diverse dataset to learn general patterns and representations, whereas fine-tuning customizes this model on a smaller, task-specific dataset to enhance performance on a particular application. Pretraining typically requires substantial computational resources and time, leveraging unsupervised learning techniques, while fine-tuning is more efficient and supervised, adapting pre-learned features to specialized contexts. Key differences include scope of data, learning objectives, and resource intensity, with pretraining establishing foundational knowledge and fine-tuning providing targeted expertise.

The Role of Pretraining in AI Model Development

Pretraining forms the foundation of AI model development by enabling neural networks to learn general patterns from vast datasets before fine-tuning on specific tasks. Large-scale models like GPT-4 leverage extensive pretraining on diverse text corpora to capture language structure, semantics, and world knowledge. This initial phase significantly enhances model accuracy and adaptability when later fine-tuned for specialized applications.

Fine-Tuning: Customizing AI for Specific Tasks

Fine-tuning enables customization of pretrained AI models by adjusting parameters on task-specific datasets, enhancing performance in specialized applications such as medical diagnosis or sentiment analysis. This approach leverages the general knowledge acquired during pretraining while adapting to nuances of particular domains, resulting in improved accuracy and efficiency. Fine-tuning reduces the need for extensive computational resources and large datasets compared to training models from scratch.

Advantages of Pretrained Models

Pretrained models leverage massive datasets and extensive computational resources, enabling them to capture generalizable features and patterns across diverse tasks. This broad knowledge base reduces the need for large labeled datasets in downstream applications, accelerating model deployment and improving performance in low-resource scenarios. Their versatility also allows seamless adaptation through fine-tuning, enhancing efficiency while maintaining robust accuracy across various AI domains.

Limitations of Solely Using Pretrained Models

Pretrained models often struggle with domain-specific tasks due to their generalist nature, leading to suboptimal performance when applied without fine-tuning. They may fail to capture unique patterns or nuances in specialized datasets, resulting in decreased accuracy and reliability. Relying solely on pretrained models can also cause issues with adaptability, as these models are not tailored to the specific requirements or evolving data distributions of particular applications.

Benefits of Fine-Tuning for Domain Adaptation

Fine-tuning harnesses pre-trained AI models' foundational knowledge to enhance performance on specific domain tasks, allowing for customized adaptation with reduced computational resources and data requirements. This approach significantly improves model accuracy and relevance in specialized fields such as healthcare, finance, and legal services, where domain-specific nuances are critical. By adjusting pre-trained weights, fine-tuning enables efficient transfer learning, accelerating deployment and optimizing AI applications tailored to unique industry needs.

Challenges in Fine-Tuning Large Language Models

Fine-tuning large language models presents challenges such as high computational costs, requiring significant GPU memory and processing power to update millions or billions of parameters efficiently. Overfitting risks arise when fine-tuning on small datasets, which can degrade model generalization and performance on diverse tasks. Moreover, balancing task-specific adaptation while preserving pre-trained knowledge demands careful hyperparameter tuning and regularization techniques to avoid catastrophic forgetting.

Practical Applications: When to Pretrain vs Fine-Tune

Pretraining is essential for building broad foundational models by learning from large, diverse datasets, making it ideal for applications requiring general understanding like natural language processing or image recognition. Fine-tuning tailors these pretrained models to specific tasks or domains, enhancing accuracy and efficiency in targeted applications such as medical diagnosis or sentiment analysis. Practical deployment favors pretraining when data availability is limited and a wide knowledge base is needed, while fine-tuning is preferred for optimizing performance on specialized, high-stakes tasks.

Future Trends in Pretraining and Fine-Tuning Technologies

Emerging trends in pretraining emphasize large-scale, multimodal models that integrate diverse data types to enhance contextual understanding and adaptability. Fine-tuning techniques are advancing through parameter-efficient approaches like prefix tuning and adapters, enabling rapid customization on specialized tasks with minimal compute resources. Future developments anticipate a hybrid paradigm where continuous learning and pretraining cycles dynamically optimize models for evolving real-world applications.

Fine-Tuning vs Pretraining Infographic

techiny.com

techiny.com