Vanishing gradient and exploding gradient are critical challenges in training deep neural networks, affecting the stability and convergence of learning algorithms. Vanishing gradient occurs when gradients become too small, slowing down weight updates and preventing the network from learning long-range dependencies effectively. Exploding gradient, on the other hand, happens when gradients grow exponentially, causing unstable updates and potentially leading to numerical overflow or model divergence.

Table of Comparison

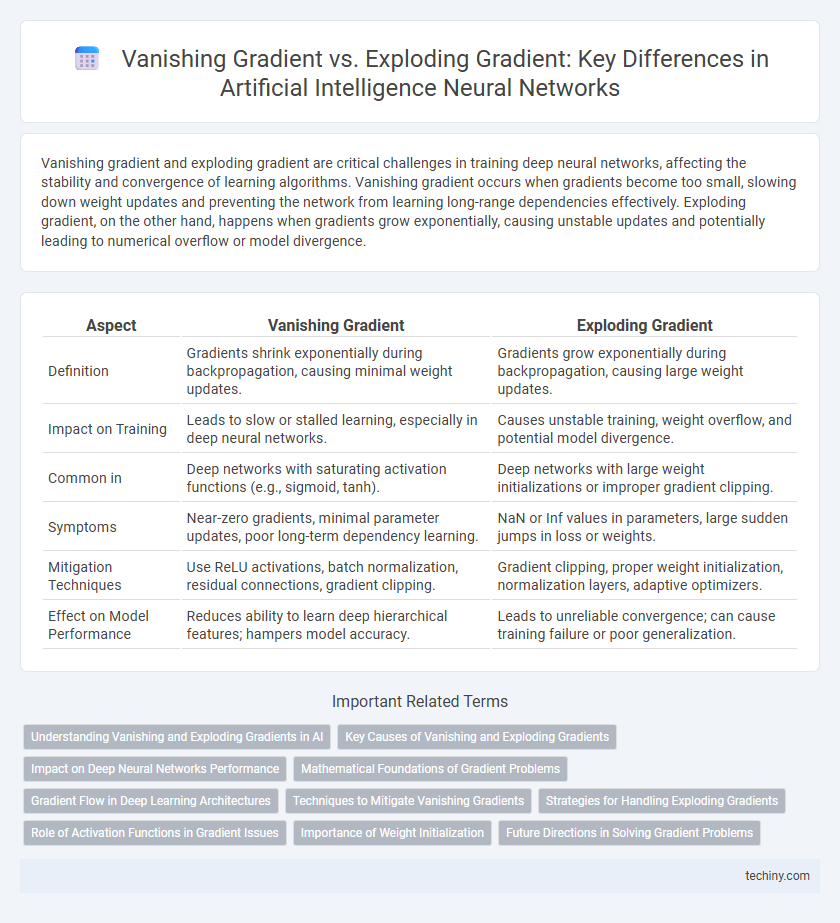

| Aspect | Vanishing Gradient | Exploding Gradient |

|---|---|---|

| Definition | Gradients shrink exponentially during backpropagation, causing minimal weight updates. | Gradients grow exponentially during backpropagation, causing large weight updates. |

| Impact on Training | Leads to slow or stalled learning, especially in deep neural networks. | Causes unstable training, weight overflow, and potential model divergence. |

| Common in | Deep networks with saturating activation functions (e.g., sigmoid, tanh). | Deep networks with large weight initializations or improper gradient clipping. |

| Symptoms | Near-zero gradients, minimal parameter updates, poor long-term dependency learning. | NaN or Inf values in parameters, large sudden jumps in loss or weights. |

| Mitigation Techniques | Use ReLU activations, batch normalization, residual connections, gradient clipping. | Gradient clipping, proper weight initialization, normalization layers, adaptive optimizers. |

| Effect on Model Performance | Reduces ability to learn deep hierarchical features; hampers model accuracy. | Leads to unreliable convergence; can cause training failure or poor generalization. |

Understanding Vanishing and Exploding Gradients in AI

Vanishing gradients occur when gradients shrink exponentially during backpropagation, causing early network layers to learn slowly or not at all, which hinders training deep neural networks. Exploding gradients cause gradients to grow uncontrollably, leading to unstable model weights and divergent training processes. Addressing these issues involves techniques like gradient clipping, careful weight initialization, and using architectures such as LSTM or ResNet to maintain gradient flow in artificial intelligence models.

Key Causes of Vanishing and Exploding Gradients

Vanishing gradients primarily occur due to the repeated multiplication of small derivatives in deep neural networks, particularly with activation functions like sigmoid or tanh, causing gradients to shrink exponentially during backpropagation. Exploding gradients arise from excessively large weight updates, often resulting from poor weight initialization or inappropriate learning rates, leading to unstable model training. Both issues are critical in training deep networks, affecting convergence and stability, and are addressed using techniques like gradient clipping, careful initialization, and alternative activation functions.

Impact on Deep Neural Networks Performance

Vanishing gradients hinder deep neural networks by causing weights to update minimally during backpropagation, leading to slow learning and poor performance in capturing long-term dependencies. Exploding gradients result in excessively large weight updates, destabilizing the training process and causing model divergence or overflow errors. Both phenomena critically impair convergence speed and accuracy, necessitating techniques like gradient clipping and advanced activation functions to maintain stable training dynamics.

Mathematical Foundations of Gradient Problems

Vanishing and exploding gradients occur due to the multiplicative nature of chain rule derivatives in deep neural networks, where repeated multiplication by values less than one leads to exponentially small gradients, while values greater than one cause exponential growth. Mathematically, this is explained by the eigenvalues of the weight matrices and their role in propagating gradients through layers, with norms significantly less than or greater than one creating instability. Understanding these foundational concepts is crucial for designing stable architectures and optimizing gradient-based learning algorithms.

Gradient Flow in Deep Learning Architectures

Vanishing gradient occurs when gradients shrink exponentially through layers, hindering effective weight updates in deep learning architectures, especially recurrent neural networks. Exploding gradient involves excessively large gradients causing unstable updates and divergence during training. Proper gradient flow management through techniques like gradient clipping and initialization strategies is essential for stable and efficient training of deep neural networks.

Techniques to Mitigate Vanishing Gradients

Techniques to mitigate vanishing gradients include the use of ReLU activation functions, which maintain stronger gradient signals compared to traditional sigmoid or tanh functions. Implementing batch normalization helps stabilize the learning process by normalizing inputs across layers, reducing gradient shrinkage. Additionally, architectures like Long Short-Term Memory (LSTM) networks and residual connections enable better gradient flow through deep networks, effectively addressing the vanishing gradient problem.

Strategies for Handling Exploding Gradients

Handling exploding gradients in artificial intelligence primarily involves gradient clipping, which constrains gradients within a predefined threshold to stabilize training. Techniques like weight regularization and adaptive learning rate methods, such as Adam or RMSprop, also mitigate gradient explosion by controlling parameter updates. Employing these strategies enhances neural network training efficiency and prevents numerical instability during backpropagation.

Role of Activation Functions in Gradient Issues

Activation functions critically influence vanishing and exploding gradient problems in deep neural networks by affecting the signal propagation during backpropagation. Functions like sigmoid and tanh often cause gradients to vanish due to their saturation regions, while ReLU and its variants help mitigate this by maintaining gradient flow through non-saturating outputs. Choosing or designing appropriate activation functions such as Leaky ReLU, ELU, or SELU is essential for stabilizing training and ensuring effective gradient dynamics.

Importance of Weight Initialization

Weight initialization plays a crucial role in mitigating vanishing and exploding gradient problems in deep neural networks by ensuring that initial weights maintain stable signal propagation across layers. Proper initialization methods, such as Xavier or He initialization, help preserve gradient magnitude, preventing gradients from diminishing to near zero or growing exponentially during backpropagation. This stability facilitates effective learning, faster convergence, and improved model performance.

Future Directions in Solving Gradient Problems

Future research in addressing vanishing and exploding gradient issues focuses on developing adaptive optimization algorithms that dynamically adjust learning rates and gradient norms to maintain stable training. Innovations in normalization techniques, such as advanced layer normalization and batch normalization variants, aim to preserve gradient flow in deep networks. Emerging architectures incorporating skip connections and attention mechanisms also contribute to mitigating gradient degradation, enhancing the scalability of deep learning models.

Vanishing Gradient vs Exploding Gradient Infographic

techiny.com

techiny.com