Embedding layers convert categorical data into dense vectors that capture semantic relationships, making them essential for natural language processing and recommendation systems. Dense layers, by contrast, perform learned weighted sums on inputs to extract features and enable complex pattern recognition in various neural networks. While embedding layers reduce dimensionality and preserve context, dense layers transform these embeddings or other data into actionable outputs for classification or regression tasks.

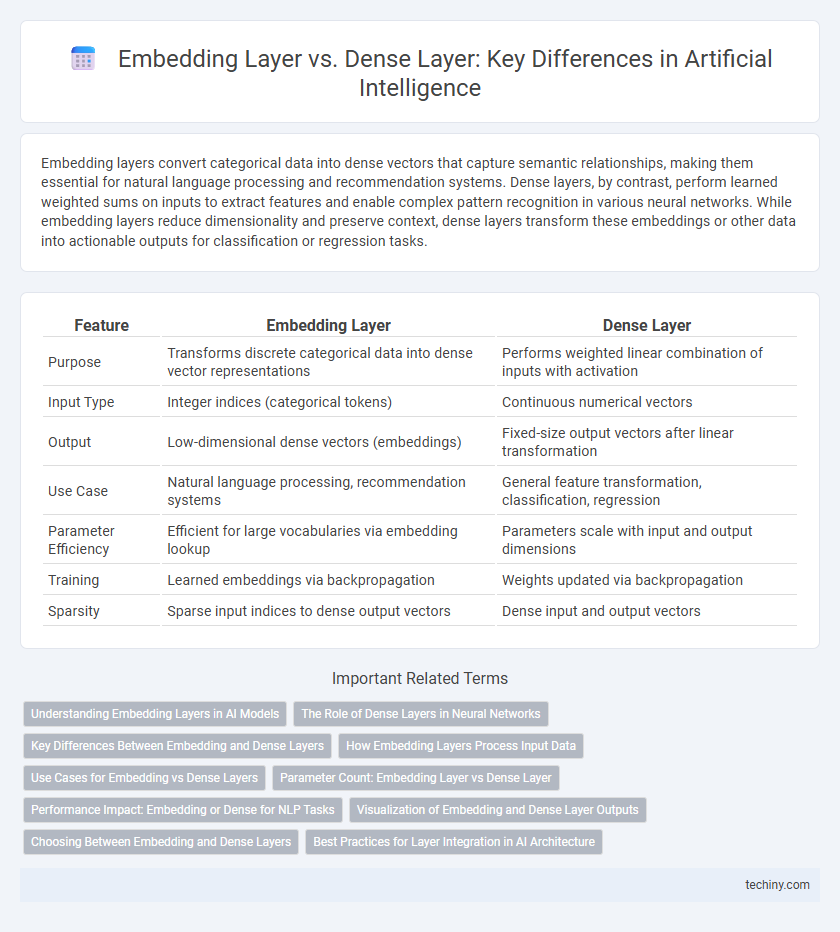

Table of Comparison

| Feature | Embedding Layer | Dense Layer |

|---|---|---|

| Purpose | Transforms discrete categorical data into dense vector representations | Performs weighted linear combination of inputs with activation |

| Input Type | Integer indices (categorical tokens) | Continuous numerical vectors |

| Output | Low-dimensional dense vectors (embeddings) | Fixed-size output vectors after linear transformation |

| Use Case | Natural language processing, recommendation systems | General feature transformation, classification, regression |

| Parameter Efficiency | Efficient for large vocabularies via embedding lookup | Parameters scale with input and output dimensions |

| Training | Learned embeddings via backpropagation | Weights updated via backpropagation |

| Sparsity | Sparse input indices to dense output vectors | Dense input and output vectors |

Understanding Embedding Layers in AI Models

Embedding layers transform categorical data into dense vector representations that capture semantic meanings, essential for natural language processing tasks. Unlike dense layers that perform linear transformations on continuous input features, embedding layers learn low-dimensional embeddings that preserve relationships between discrete tokens. This enables AI models to effectively handle large vocabularies by mapping words or categories into continuous vector spaces optimized during training.

The Role of Dense Layers in Neural Networks

Dense layers in neural networks perform the critical function of learning complex feature representations by applying weighted sums followed by nonlinear activations, which enables the model to capture intricate patterns in data. Unlike embedding layers that convert categorical variables into dense vectors, dense layers are fully connected layers that integrate and transform inputs from previous layers to contribute to decision-making or prediction tasks. Their ability to adjust weights during training facilitates the network's capacity to generalize from training data, making dense layers essential for deep learning architectures across image recognition, natural language processing, and other AI applications.

Key Differences Between Embedding and Dense Layers

Embedding layers transform sparse categorical data into dense vector representations by learning semantic relationships between categories, while dense layers process continuous numerical inputs through fully connected neurons. Embedding layers are specifically designed for natural language processing tasks involving discrete tokens, reducing dimensionality and capturing context, whereas dense layers generalize across various data types for regression or classification. The key difference lies in embeddings' ability to map high-dimensional sparse data to meaningful low-dimensional vectors, enabling parameter sharing, unlike dense layers that treat each input feature independently.

How Embedding Layers Process Input Data

Embedding layers transform discrete categorical data, such as words or tokens, into continuous vector representations by mapping each unique input to a dense, low-dimensional space. These embeddings capture semantic relationships and context by learning during training, enabling the model to understand similarities and patterns in the data. Unlike dense layers that process fixed-size numerical inputs through weighted sums and activations, embedding layers specifically handle sparse input indices and produce meaningful feature vectors crucial for natural language processing tasks.

Use Cases for Embedding vs Dense Layers

Embedding layers are essential for natural language processing tasks, transforming words into dense vectors that capture semantic meaning and relationships. Dense layers excel in structured data scenarios, performing feature extraction and classification by learning non-linear combinations of input features. Use embedding layers for text representation in sentiment analysis or recommendation systems, while dense layers suit tabular data in financial forecasting or image classification models.

Parameter Count: Embedding Layer vs Dense Layer

Embedding layers significantly reduce parameter count by mapping discrete input tokens to dense vectors, often requiring parameters proportional to vocabulary size times embedding dimension. Dense layers, in contrast, involve parameters equal to the product of input and output dimensions, which can become computationally expensive for high-dimensional input data. Choosing embedding layers over dense layers is crucial for managing memory efficiency and enabling scalable training in natural language processing tasks.

Performance Impact: Embedding or Dense for NLP Tasks

Embedding layers significantly improve performance in NLP tasks by efficiently converting sparse, high-dimensional categorical data into dense vectors, enabling models to capture semantic relationships between words. Dense layers, while powerful for general feature transformations, often require more parameters and computational resources when applied directly to high-dimensional text inputs, leading to slower training and inference. Utilizing embedding layers before dense layers optimizes both accuracy and computational efficiency, making embeddings the preferred choice for handling large-scale natural language datasets.

Visualization of Embedding and Dense Layer Outputs

The visualization of Embedding layer outputs reveals dense, low-dimensional vectors that represent discrete categorical variables, capturing semantic relationships and enabling clear identification of clusters in natural language processing tasks. Dense layer visualizations produce high-dimensional activations often requiring dimensionality reduction techniques such as t-SNE or PCA to interpret feature importance and neuron activations, reflecting complex hierarchical feature transformations. Comparing both, Embedding layers provide interpretable, meaningful spatial patterns directly linked to input semantics, whereas Dense layers encode abstract representations that depend heavily on network depth and task-specific training.

Choosing Between Embedding and Dense Layers

Choosing between Embedding and Dense layers depends on the type of input data and task requirements; Embedding layers are optimized for transforming categorical or sparse input data, such as words in natural language processing, into dense vector representations that capture semantic relationships. Dense layers, on the other hand, are fully connected layers designed for numerical feature inputs and are ideal for learning complex patterns after initial feature extraction. Embedding layers reduce dimensionality and computational overhead in sequence models, whereas Dense layers provide flexibility for nonlinear transformation and classification tasks.

Best Practices for Layer Integration in AI Architecture

Embedding layers convert categorical data into dense vector representations, essential for natural language processing and categorical feature handling, while Dense layers perform weighted sum operations for feature transformation and output prediction. Best practices for integrating these layers involve using Embedding layers as the initial step for categorical inputs to capture semantic relationships, followed by Dense layers for nonlinear feature extraction and decision-making tasks. Proper normalization, dimensionality tuning, and dropout regularization between these layers enhance model generalization and training efficiency in AI architectures.

Embedding layer vs Dense layer Infographic

techiny.com

techiny.com