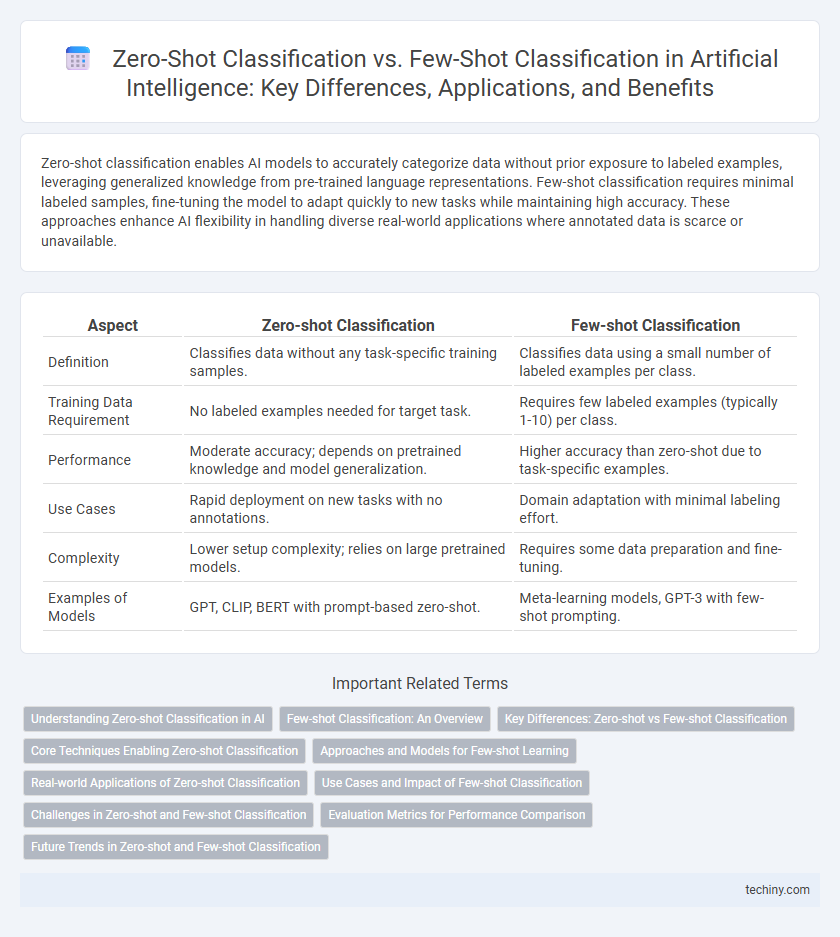

Zero-shot classification enables AI models to accurately categorize data without prior exposure to labeled examples, leveraging generalized knowledge from pre-trained language representations. Few-shot classification requires minimal labeled samples, fine-tuning the model to adapt quickly to new tasks while maintaining high accuracy. These approaches enhance AI flexibility in handling diverse real-world applications where annotated data is scarce or unavailable.

Table of Comparison

| Aspect | Zero-shot Classification | Few-shot Classification |

|---|---|---|

| Definition | Classifies data without any task-specific training samples. | Classifies data using a small number of labeled examples per class. |

| Training Data Requirement | No labeled examples needed for target task. | Requires few labeled examples (typically 1-10) per class. |

| Performance | Moderate accuracy; depends on pretrained knowledge and model generalization. | Higher accuracy than zero-shot due to task-specific examples. |

| Use Cases | Rapid deployment on new tasks with no annotations. | Domain adaptation with minimal labeling effort. |

| Complexity | Lower setup complexity; relies on large pretrained models. | Requires some data preparation and fine-tuning. |

| Examples of Models | GPT, CLIP, BERT with prompt-based zero-shot. | Meta-learning models, GPT-3 with few-shot prompting. |

Understanding Zero-shot Classification in AI

Zero-shot classification in AI enables models to categorize data into classes they have never encountered during training by leveraging semantic information and pre-trained knowledge representations like word embeddings. This approach relies on transferring learned concepts from known classes to unseen ones, eliminating the need for labeled examples in new categories. Zero-shot classification enhances scalability and adaptability in AI systems by allowing them to generalize beyond specific training datasets.

Few-shot Classification: An Overview

Few-shot classification enables AI models to accurately categorize new data with only a handful of labeled examples, leveraging prior knowledge from large pre-trained models. This approach reduces the dependency on extensive annotated datasets by utilizing meta-learning and transfer learning techniques to generalize from limited samples. Few-shot classification is pivotal in applications where data scarcity or high annotation costs make traditional supervised learning impractical.

Key Differences: Zero-shot vs Few-shot Classification

Zero-shot classification enables AI models to categorize data without prior examples of the target class by leveraging semantic knowledge and pre-trained embeddings, while few-shot classification relies on a limited number of labeled samples to adapt the model for new categories. Zero-shot approaches excel in scenarios with no available training instances, using external knowledge bases or natural language descriptions to infer class membership. Few-shot methods improve accuracy by fine-tuning on scarce labeled data, making them ideal when minimal but representative examples exist for fast model adaptation.

Core Techniques Enabling Zero-shot Classification

Zero-shot classification relies on semantic embeddings and natural language descriptions to interpret unseen classes without explicit training data, leveraging pre-trained transformer models like GPT or CLIP to bridge the gap between visual or textual inputs and class labels. Core techniques involve learning generalized representations through large-scale unsupervised pre-training and aligning multimodal data in a shared embedding space, enabling the model to infer class membership by measuring similarity scores. Contrastively, few-shot classification depends on fine-tuning with a small number of labeled examples, whereas zero-shot classification eliminates this dependency by exploiting semantic relationships and transfer learning capabilities.

Approaches and Models for Few-shot Learning

Few-shot classification leverages meta-learning algorithms such as Model-Agnostic Meta-Learning (MAML) and Prototypical Networks, enabling models to rapidly adapt to new tasks with minimal labeled data. These approaches utilize task-specific embeddings and optimization strategies to generalize from a small number of examples, enhancing performance in data-scarce environments. Contrastively, zero-shot classification relies on pretrained language models and semantic embeddings without any task-specific supervision, highlighting the distinct use of training paradigms between few-shot and zero-shot frameworks.

Real-world Applications of Zero-shot Classification

Zero-shot classification enables AI models to categorize data from unseen classes without any prior training examples, making it highly valuable for real-world applications such as content moderation, medical diagnosis, and natural language processing. This capability significantly reduces the need for extensive labeled datasets, accelerating deployment in dynamic environments like social media monitoring and emerging disease detection. By leveraging semantic embeddings and large pretrained models, zero-shot classification streamlines adaptation to evolving tasks with minimal human intervention.

Use Cases and Impact of Few-shot Classification

Few-shot classification enables AI systems to recognize new categories with minimal labeled examples, making it ideal for applications in personalized medicine, rare disease detection, and bespoke customer service. Unlike zero-shot classification, which relies on pre-existing knowledge and generalized models, few-shot methods improve accuracy by adapting quickly to specific, data-scarce environments. This capability significantly reduces the time and cost of training models for niche or emerging use cases, accelerating innovation across industries such as healthcare, finance, and cybersecurity.

Challenges in Zero-shot and Few-shot Classification

Zero-shot classification faces challenges such as limited generalization to unseen classes and reliance on accurate semantic embeddings for effective transfer learning. Few-shot classification struggles with data scarcity, making it difficult to capture class variability and preventing robust model adaptation from minimal examples. Both methods require advanced techniques to address sample efficiency and reduce model bias for improved accuracy in low-data scenarios.

Evaluation Metrics for Performance Comparison

Zero-shot classification performance is typically evaluated using accuracy, precision, recall, and F1-score on unseen classes without any labeled examples, emphasizing the model's ability to generalize from prior knowledge. Few-shot classification metrics also include these standard measures but are assessed on a small number of labeled examples per class, highlighting adaptability with limited data. Comparing these metrics reveals zero-shot methods excel in scalability across diverse classes, while few-shot methods achieve higher precision and recall with minimal supervision.

Future Trends in Zero-shot and Few-shot Classification

Future trends in zero-shot and few-shot classification emphasize the integration of large-scale pre-trained language models with adaptive learning techniques to enhance generalization across unseen classes. Advances in meta-learning and transfer learning aim to minimize labeled data dependence while improving model robustness and efficiency in diverse applications such as natural language processing and computer vision. Emerging research focuses on hybrid frameworks combining zero-shot and few-shot methods to optimize performance in real-world scenarios with limited annotated data.

Zero-shot Classification vs Few-shot Classification Infographic

techiny.com

techiny.com