Ensemble learning combines multiple models to improve overall prediction accuracy by leveraging diverse algorithms, while bagging specifically uses bootstrap aggregating to reduce variance by training the same model type on different data subsets. Bagging enhances stability and helps prevent overfitting, making it effective for high-variance models like decision trees. Ensemble learning techniques, including bagging, boosting, and stacking, offer flexible strategies to optimize performance across various machine learning tasks.

Table of Comparison

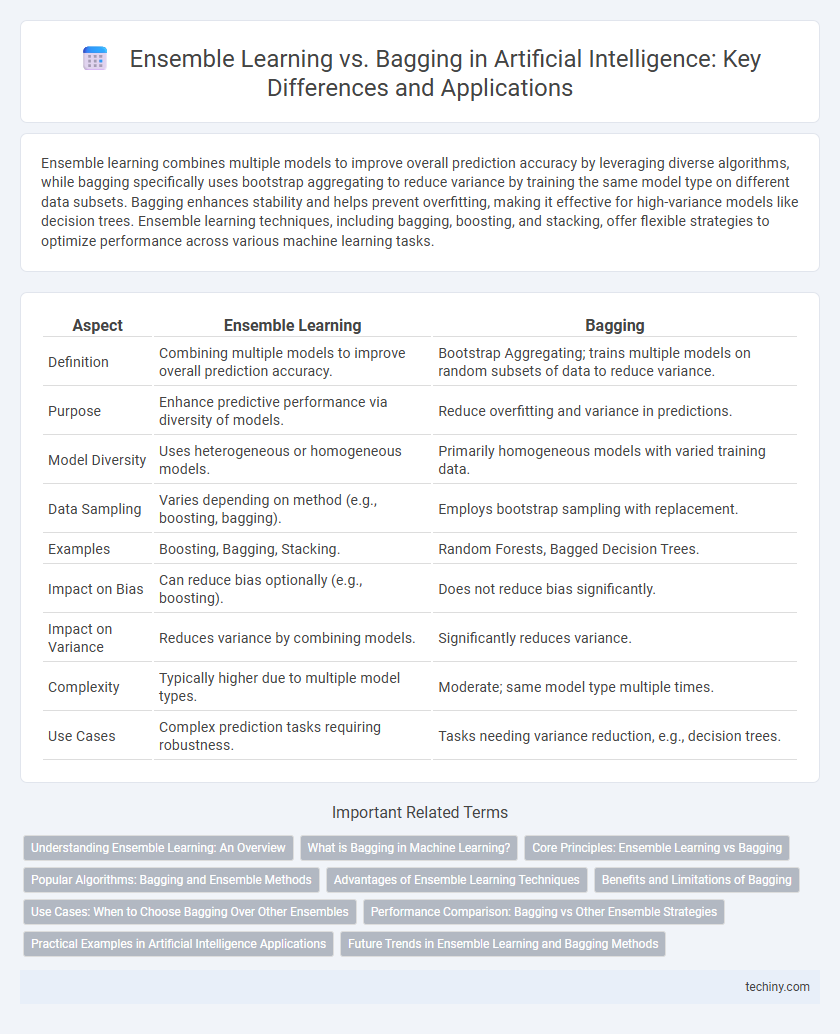

| Aspect | Ensemble Learning | Bagging |

|---|---|---|

| Definition | Combining multiple models to improve overall prediction accuracy. | Bootstrap Aggregating; trains multiple models on random subsets of data to reduce variance. |

| Purpose | Enhance predictive performance via diversity of models. | Reduce overfitting and variance in predictions. |

| Model Diversity | Uses heterogeneous or homogeneous models. | Primarily homogeneous models with varied training data. |

| Data Sampling | Varies depending on method (e.g., boosting, bagging). | Employs bootstrap sampling with replacement. |

| Examples | Boosting, Bagging, Stacking. | Random Forests, Bagged Decision Trees. |

| Impact on Bias | Can reduce bias optionally (e.g., boosting). | Does not reduce bias significantly. |

| Impact on Variance | Reduces variance by combining models. | Significantly reduces variance. |

| Complexity | Typically higher due to multiple model types. | Moderate; same model type multiple times. |

| Use Cases | Complex prediction tasks requiring robustness. | Tasks needing variance reduction, e.g., decision trees. |

Understanding Ensemble Learning: An Overview

Ensemble learning combines multiple models to improve predictive performance and reduce overfitting by leveraging diverse algorithms or data subsets. Bagging, a key ensemble technique, builds several versions of a predictor by training each on different bootstrap samples and averages their outputs to enhance stability and accuracy. Understanding these methods highlights how ensemble approaches boost model robustness and generalization in artificial intelligence applications.

What is Bagging in Machine Learning?

Bagging, or Bootstrap Aggregating, is a machine learning ensemble technique designed to improve model stability and accuracy by training multiple versions of a predictor on different random subsets of the original dataset. Each subset is generated through bootstrap sampling, which involves sampling with replacement, allowing for variance reduction and overfitting prevention in models such as decision trees. Bagging is particularly effective for high-variance, low-bias models, enhancing performance by aggregating predictions through voting or averaging.

Core Principles: Ensemble Learning vs Bagging

Ensemble learning combines multiple models to improve predictive performance by capturing diverse patterns and reducing errors. Bagging, a specific ensemble technique, trains multiple base models independently on bootstrapped data samples, emphasizing variance reduction and model stability. Both approaches leverage the strengths of combining models, but bagging specifically targets overfitting through random data subsets to enhance overall accuracy.

Popular Algorithms: Bagging and Ensemble Methods

Bagging, a popular ensemble method, improves model stability by training multiple versions of a predictor on different subsets of the data and aggregating their outputs, commonly implemented with algorithms like Random Forests. Ensemble learning extends beyond bagging by combining diverse models to enhance predictive performance, with algorithms such as Gradient Boosting Machines (GBM) and AdaBoost showcasing its versatility. Both techniques leverage the power of multiple learners to reduce variance, bias, and improve accuracy in tasks like classification and regression.

Advantages of Ensemble Learning Techniques

Ensemble learning techniques, including bagging, enhance model accuracy by combining predictions from multiple base learners, reducing overfitting and improving generalization. Unlike single algorithms, ensembles leverage diverse models to capture varied data patterns, boosting robustness and predictive performance in complex tasks. This approach effectively mitigates biases and variance, leading to more reliable outcomes in artificial intelligence applications.

Benefits and Limitations of Bagging

Bagging, or Bootstrap Aggregating, improves model accuracy by reducing variance through training multiple base learners on different subsets of data, enhancing stability and robustness in predictions. It effectively mitigates overfitting in high-variance models such as decision trees but may suffer from increased computational complexity and reduced interpretability compared to single models. Despite its benefits in variance reduction, bagging does not address bias, limiting its effectiveness on models with inherent high bias.

Use Cases: When to Choose Bagging Over Other Ensembles

Bagging excels in reducing variance and preventing overfitting, making it ideal for high-variance models like decision trees when dealing with noisy datasets or small sample sizes. Use bagging ensemble techniques such as Random Forests for tasks requiring stability and robustness in predictive performance, especially in classification and regression problems with complex feature interactions. Bagging outperforms boosting in scenarios where model simplicity and parallel training are prioritized over incremental error correction.

Performance Comparison: Bagging vs Other Ensemble Strategies

Bagging, or Bootstrap Aggregating, enhances model stability and accuracy by training multiple base learners on different subsets of data, effectively reducing variance compared to single models. When compared to other ensemble strategies like boosting or stacking, bagging often yields superior performance on noisy datasets due to its robustness against overfitting. However, boosting algorithms generally outperform bagging in terms of bias reduction and predictive accuracy on cleaner, well-structured data.

Practical Examples in Artificial Intelligence Applications

Ensemble learning leverages multiple models to improve predictive performance, with bagging as a popular technique that builds diverse classifiers by training on bootstrap samples of the dataset. In practical AI applications, bagging is extensively used in random forests for image recognition and fraud detection, where it enhances robustness by reducing variance. Compared to other ensemble methods, bagging provides significant improvements in domains requiring high accuracy and stability under noisy data conditions.

Future Trends in Ensemble Learning and Bagging Methods

Future trends in ensemble learning and bagging methods emphasize the integration of deep learning architectures and automated machine learning (AutoML) techniques to enhance model robustness and predictive accuracy. Research is increasingly exploring hybrid ensembles that combine diverse base learners and optimize weight allocations dynamically using reinforcement learning. Advances in scalable distributed computing frameworks enable more efficient training of large bagging ensembles on high-dimensional datasets, driving real-time AI applications forward.

Ensemble learning vs Bagging Infographic

techiny.com

techiny.com