Transformers outperform RNNs by processing entire sequences simultaneously, enabling better handling of long-range dependencies and parallelization. Unlike RNNs, which suffer from vanishing gradients and sequential bottlenecks, Transformers use self-attention mechanisms to capture contextual relationships more effectively. This architectural advantage leads to significant improvements in tasks such as language modeling, translation, and text generation.

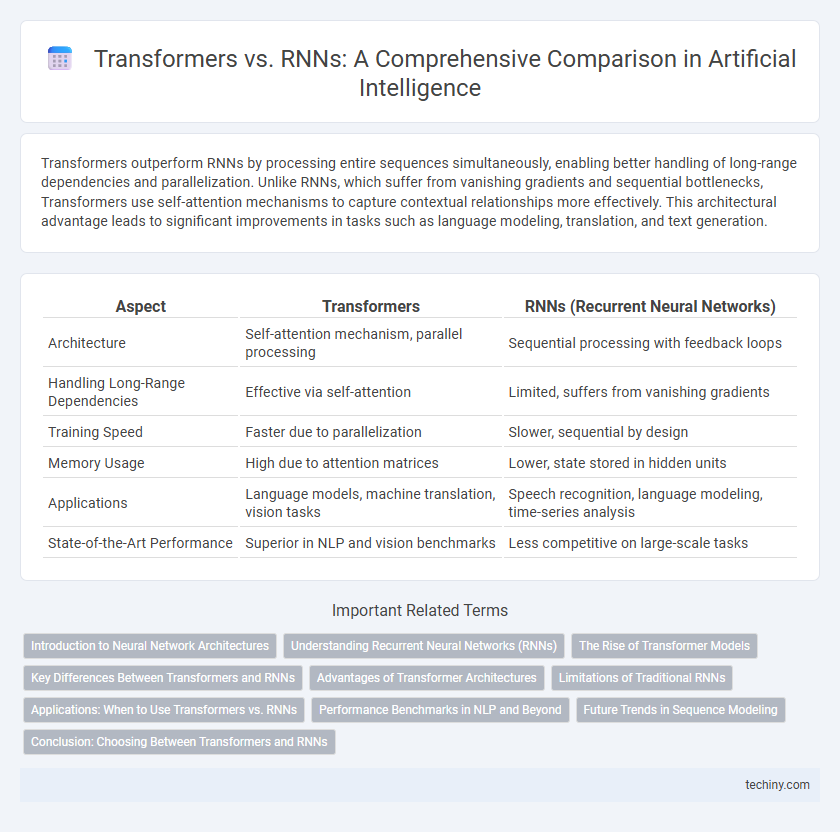

Table of Comparison

| Aspect | Transformers | RNNs (Recurrent Neural Networks) |

|---|---|---|

| Architecture | Self-attention mechanism, parallel processing | Sequential processing with feedback loops |

| Handling Long-Range Dependencies | Effective via self-attention | Limited, suffers from vanishing gradients |

| Training Speed | Faster due to parallelization | Slower, sequential by design |

| Memory Usage | High due to attention matrices | Lower, state stored in hidden units |

| Applications | Language models, machine translation, vision tasks | Speech recognition, language modeling, time-series analysis |

| State-of-the-Art Performance | Superior in NLP and vision benchmarks | Less competitive on large-scale tasks |

Introduction to Neural Network Architectures

Transformers revolutionize neural network architectures by leveraging self-attention mechanisms to process input sequences in parallel, enhancing efficiency and scalability. Recurrent Neural Networks (RNNs) handle sequential data through iterative state updates but suffer from vanishing gradients in long sequences, limiting their effectiveness. The Transformer architecture overcomes these limitations, enabling superior performance in tasks like language modeling and machine translation by capturing long-range dependencies more effectively.

Understanding Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to process sequential data by maintaining a hidden state that captures information from previous inputs, enabling the model to exhibit temporal dynamic behavior. Unlike traditional feedforward networks, RNNs use loops in their architecture to retain context, making them effective for tasks like language modeling and speech recognition. However, their inability to efficiently handle long-range dependencies often leads to challenges such as vanishing gradients, which Transformers overcome with self-attention mechanisms.

The Rise of Transformer Models

Transformer models have revolutionized natural language processing by enabling parallel computation and effectively capturing long-range dependencies, overcoming the sequential limitations of Recurrent Neural Networks (RNNs). Their self-attention mechanism allows transformers to handle vast amounts of data with higher efficiency and accuracy, leading to breakthroughs in machine translation, text generation, and speech recognition. The scalability and adaptability of transformers have driven widespread adoption across AI research and industry applications, outperforming traditional RNN architectures in both performance and training speed.

Key Differences Between Transformers and RNNs

Transformers leverage self-attention mechanisms enabling parallel processing of input data, contrasting with RNNs' sequential data handling and inherent difficulty with long-range dependencies. The architecture of Transformers allows for better scalability and efficiency in training large models, while RNNs often suffer from vanishing gradient problems that limit their performance on complex tasks. Transformers have become the foundation for state-of-the-art models in natural language processing, surpassing traditional RNN-based approaches in accuracy and speed.

Advantages of Transformer Architectures

Transformer architectures provide significant advantages over Recurrent Neural Networks (RNNs) due to their ability to process entire input sequences simultaneously, resulting in faster training and improved scalability. The self-attention mechanism in transformers enhances the modeling of long-range dependencies, overcoming the vanishing gradient problem commonly seen in RNNs. Furthermore, transformers enable parallelization, making them highly efficient for large-scale natural language processing tasks and leading to state-of-the-art performance in machine translation and language modeling.

Limitations of Traditional RNNs

Traditional Recurrent Neural Networks (RNNs) suffer from vanishing and exploding gradient problems, which hinder their ability to learn long-range dependencies in sequential data. Their sequential processing nature limits parallelization, resulting in slower training times compared to Transformer models. Furthermore, RNNs struggle with capturing complex contextual relationships across entire sequences, a challenge mitigated by the self-attention mechanisms in Transformers.

Applications: When to Use Transformers vs. RNNs

Transformers excel in handling long-range dependencies and large-scale data, making them ideal for applications like language translation, text summarization, and speech recognition where context preservation is crucial. RNNs, particularly LSTMs and GRUs, are suitable for sequential data with shorter dependencies, such as time series forecasting, anomaly detection, and real-time signal processing. Choosing between Transformers and RNNs depends on the complexity of the sequence, data size, and performance requirements of the specific AI task.

Performance Benchmarks in NLP and Beyond

Transformers outperform RNNs in NLP benchmarks such as GLUE and SuperGLUE due to their superior parallel processing and ability to capture long-range dependencies. Their architecture facilitates faster training times and higher accuracy in tasks like machine translation, text generation, and sentiment analysis. Beyond NLP, Transformers show promise in areas including computer vision and reinforcement learning, where RNNs struggle with scalability and sequence modeling.

Future Trends in Sequence Modeling

Transformers are rapidly surpassing RNNs in sequence modeling due to their superior parallel processing capabilities and scalability, enabling more efficient handling of long-range dependencies in data. Emerging developments like sparse attention mechanisms and adaptive computation are enhancing Transformers' efficiency and reducing computational costs, driving broader adoption in natural language processing and time-series analysis. Future trends emphasize integrating multimodal data and leveraging self-supervised learning to improve model generalization and contextual understanding in complex sequential tasks.

Conclusion: Choosing Between Transformers and RNNs

Transformers outperform RNNs in handling long-range dependencies and parallel processing, making them ideal for complex natural language processing tasks. RNNs, with their sequential data handling and lower computational cost, remain suitable for simpler time-series predictions and real-time applications. Selecting between Transformers and RNNs depends on task complexity, data size, and computational resources.

Transformers vs RNNs Infographic

techiny.com

techiny.com