Explainability in artificial intelligence refers to the ability to make the outputs of complex models understandable to humans by providing clear, detailed justifications for decisions. Interpretability focuses on the extent to which a human can comprehend the internal mechanics of a model without external explanations. Both concepts enhance trust and transparency but serve different purposes: explainability is about clarifying outputs, while interpretability involves understanding the model's inner workings.

Table of Comparison

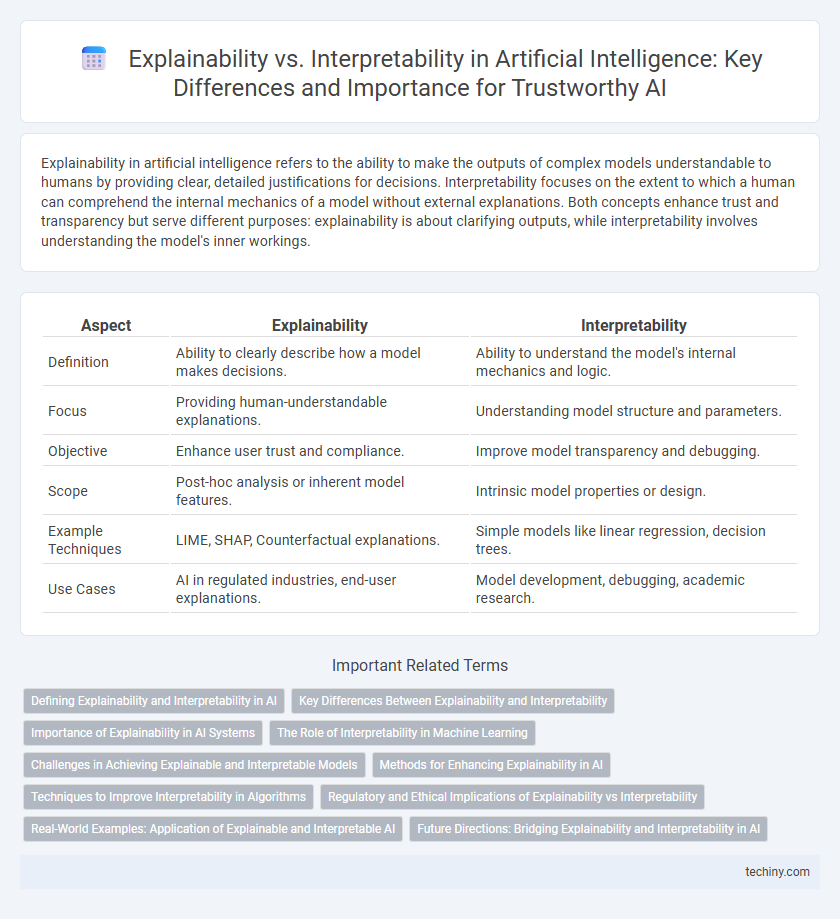

| Aspect | Explainability | Interpretability |

|---|---|---|

| Definition | Ability to clearly describe how a model makes decisions. | Ability to understand the model's internal mechanics and logic. |

| Focus | Providing human-understandable explanations. | Understanding model structure and parameters. |

| Objective | Enhance user trust and compliance. | Improve model transparency and debugging. |

| Scope | Post-hoc analysis or inherent model features. | Intrinsic model properties or design. |

| Example Techniques | LIME, SHAP, Counterfactual explanations. | Simple models like linear regression, decision trees. |

| Use Cases | AI in regulated industries, end-user explanations. | Model development, debugging, academic research. |

Defining Explainability and Interpretability in AI

Explainability in AI refers to the ability of a model to provide clear, comprehensible reasons for its outputs, enabling users to understand the decision-making process in human terms. Interpretability involves the extent to which a human can consistently predict the model's behavior and infer relationships within the data based on the model's structure or parameters. Both explainability and interpretability are crucial for transparency, trust, and accountability in AI systems, especially in high-stakes applications like healthcare and finance.

Key Differences Between Explainability and Interpretability

Explainability in artificial intelligence refers to the extent to which the internal mechanics of a model can be presented in human-understandable terms, often involving post-hoc methods that clarify model decisions. Interpretability emphasizes the model's inherent transparency, enabling users to directly comprehend how input features influence outputs without external explanation tools. The key difference lies in explainability being an add-on feature providing insights after training, while interpretability is an intrinsic property of the model's design and structure.

Importance of Explainability in AI Systems

Explainability in AI systems is crucial for building user trust and ensuring accountability by providing clear and understandable reasons behind model decisions. Unlike interpretability, which focuses on the internal mechanics of models, explainability emphasizes actionable insights that stakeholders can comprehend and validate. Regulatory compliance, ethical considerations, and enhanced decision-making processes heavily rely on the explainability of AI algorithms, particularly in high-stakes domains like healthcare, finance, and autonomous systems.

The Role of Interpretability in Machine Learning

Interpretability in machine learning provides clear insights into how models make decisions by mapping input features directly to outcomes, enhancing trust and transparency. Explainability often involves post-hoc analysis, generating human-understandable explanations without guaranteeing model simplicity. Prioritizing interpretability supports regulatory compliance and facilitates debugging, essential for deploying AI systems in critical domains like healthcare and finance.

Challenges in Achieving Explainable and Interpretable Models

Achieving explainable and interpretable AI models is challenged by the complexity of deep learning architectures, which often function as opaque "black boxes" with millions of parameters. Balancing model accuracy with transparency requires sophisticated techniques to translate internal model logic into human-understandable explanations without sacrificing performance. Data heterogeneity and high dimensionality further complicate the extraction of meaningful insights, impeding the development of universally interpretable frameworks.

Methods for Enhancing Explainability in AI

Techniques for enhancing explainability in AI include model-agnostic approaches like LIME and SHAP, which provide local interpretability by approximating complex models with simpler, understandable ones. Intrinsic methods involve designing inherently interpretable models such as decision trees and attention mechanisms that reveal decision-making processes transparently. Visualization tools and rule extraction techniques further contribute to explainability by translating abstract model behaviors into human-understandable formats, facilitating trust and accountability in AI systems.

Techniques to Improve Interpretability in Algorithms

Techniques to improve interpretability in artificial intelligence algorithms include model-agnostic methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide local explanations by approximating complex models with simpler, interpretable ones. Feature importance ranking and partial dependence plots help visualize the influence of individual features on model predictions, enhancing transparency. Additionally, inherently interpretable models such as decision trees, rule-based learners, and linear models offer straightforward interpretability by design, enabling clearer understanding of decision-making processes.

Regulatory and Ethical Implications of Explainability vs Interpretability

Explainability in artificial intelligence ensures transparent decision-making processes, enabling compliance with regulatory requirements such as GDPR and the AI Act, which mandate clear explanations for automated decisions. Interpretability facilitates ethical accountability by allowing stakeholders to understand model behavior and identify biases, reducing risks of discrimination and promoting fairness. Balancing explainability and interpretability is crucial for building trustworthy AI systems that meet legal standards and uphold ethical principles in deployment.

Real-World Examples: Application of Explainable and Interpretable AI

Explainable AI (XAI) provides transparent insights into model decisions, crucial in healthcare for diagnosing diseases, where understanding why a model predicts a condition improves trust and patient outcomes. Interpretable AI models, such as decision trees in credit scoring, allow financial institutions to clearly communicate approval reasons to clients while complying with regulations. Autonomous vehicles use a combination of both approaches to ensure safety by explaining sensor-based decisions and maintaining human-understandable operational logic.

Future Directions: Bridging Explainability and Interpretability in AI

Future directions in AI emphasize bridging explainability and interpretability by developing hybrid models that combine transparent mechanisms with post-hoc explanation techniques. Advances in causal inference and symbolic reasoning are expected to enhance both model transparency and user-centric interpretability. Integrating human-in-the-loop approaches will further align AI system outputs with stakeholder comprehension and trust, facilitating more accountable and reliable AI deployment.

Explainability vs Interpretability Infographic

techiny.com

techiny.com