Forward propagation processes input data through neural network layers to generate predictions, while backpropagation adjusts the network's weights by calculating gradients based on prediction errors. This optimization technique uses gradient descent to minimize loss functions, improving the model's accuracy over time. Efficient training of deep learning models relies on the seamless interaction between forward propagation and backpropagation algorithms.

Table of Comparison

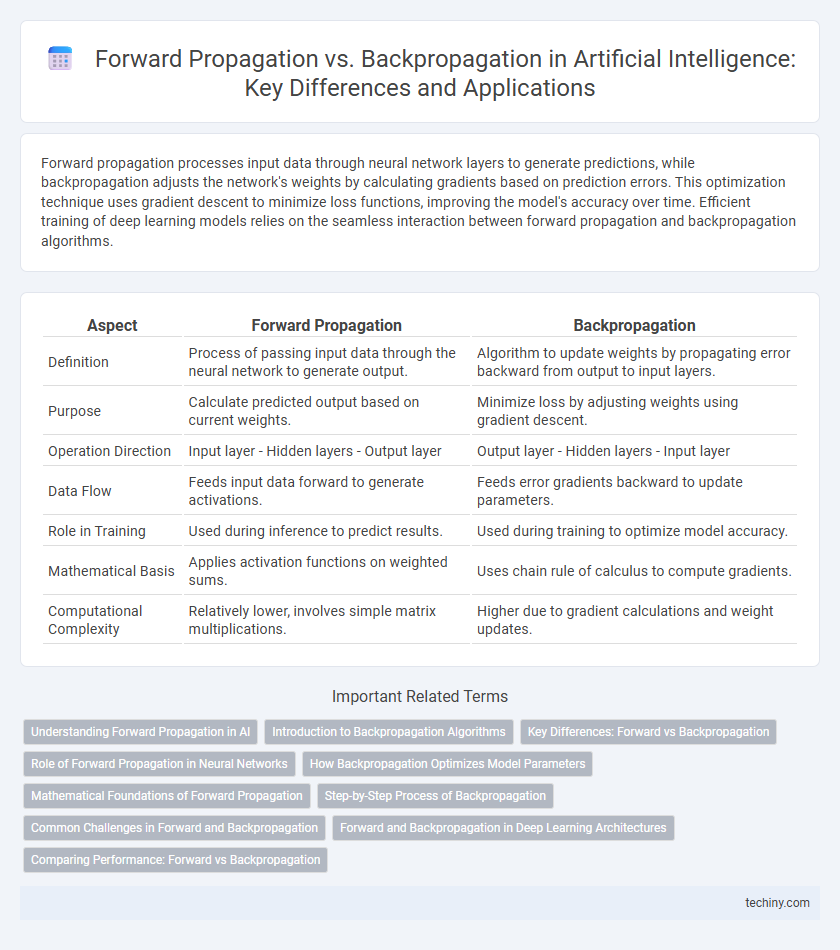

| Aspect | Forward Propagation | Backpropagation |

|---|---|---|

| Definition | Process of passing input data through the neural network to generate output. | Algorithm to update weights by propagating error backward from output to input layers. |

| Purpose | Calculate predicted output based on current weights. | Minimize loss by adjusting weights using gradient descent. |

| Operation Direction | Input layer - Hidden layers - Output layer | Output layer - Hidden layers - Input layer |

| Data Flow | Feeds input data forward to generate activations. | Feeds error gradients backward to update parameters. |

| Role in Training | Used during inference to predict results. | Used during training to optimize model accuracy. |

| Mathematical Basis | Applies activation functions on weighted sums. | Uses chain rule of calculus to compute gradients. |

| Computational Complexity | Relatively lower, involves simple matrix multiplications. | Higher due to gradient calculations and weight updates. |

Understanding Forward Propagation in AI

Forward propagation in AI involves passing input data through each layer of a neural network to generate an output, using weighted sums and activation functions to transform the data. This process enables the model to make predictions by mapping inputs to outputs based on learned parameters. Understanding forward propagation is crucial for grasping how neural networks process information before adjustments are made through backpropagation.

Introduction to Backpropagation Algorithms

Backpropagation algorithms are essential for training artificial neural networks by minimizing error through gradient descent. They iteratively adjust network weights by propagating the loss gradient from the output layer back to the input layer. This optimization process enables efficient learning and improves model accuracy in diverse AI applications.

Key Differences: Forward vs Backpropagation

Forward propagation processes input data through neural network layers to generate predictions by applying weights and activation functions, while backpropagation calculates gradients by propagating the error backward to update these weights. Forward propagation is responsible for the network's output generation, whereas backpropagation optimizes the model by minimizing loss through gradient descent. The primary distinction lies in their roles: forward propagation is the inference step, and backpropagation is the training step aimed at improving accuracy.

Role of Forward Propagation in Neural Networks

Forward propagation in neural networks involves transmitting input data through successive layers, applying weights and activation functions to generate output predictions. This process transforms raw data into meaningful representations by calculating weighted sums and activations at each neuron, enabling the model to approximate complex functions. Accurate forward propagation is crucial for establishing predictions that backpropagation later refines by adjusting weights to minimize error.

How Backpropagation Optimizes Model Parameters

Backpropagation improves model parameters by calculating the gradient of the loss function with respect to each weight through the chain rule, enabling precise adjustments that minimize prediction errors. This process systematically propagates errors backward from the output layer to the input layer, ensuring efficient parameter updates across multiple layers. By optimizing weights iteratively, backpropagation enhances the neural network's accuracy and generalization in tasks such as image recognition and natural language processing.

Mathematical Foundations of Forward Propagation

In forward propagation, the input data is multiplied by weights and summed with biases before being passed through an activation function, facilitating non-linear transformations essential for model learning. This process is mathematically represented by matrix multiplications and nonlinear functions such as sigmoid, ReLU, or tanh, enabling the network to approximate complex functions. The efficient computation of these linear and nonlinear operations underpins the accuracy and speed of neural network predictions.

Step-by-Step Process of Backpropagation

Backpropagation involves calculating the error by comparing the predicted output with the actual target, then propagating this error backward through the network layer by layer. Gradient descent is applied during this process to update the weights by computing the partial derivatives of the loss function with respect to each weight. This iterative adjustment minimizes the loss function, improving the neural network's accuracy with each epoch.

Common Challenges in Forward and Backpropagation

Forward propagation and backpropagation face common challenges such as vanishing and exploding gradients, which hinder effective learning in deep neural networks. Both processes require careful weight initialization and tuning of hyperparameters like learning rate to ensure stable convergence. Computational complexity and memory limitations also impact the efficiency and scalability of training AI models using these techniques.

Forward and Backpropagation in Deep Learning Architectures

Forward propagation in deep learning involves passing input data through multiple network layers to generate output predictions by applying weighted sums and activation functions. Backpropagation is a gradient-based optimization method that calculates error derivatives layer-by-layer to update weights through algorithms like stochastic gradient descent. These processes work iteratively in deep neural networks to minimize loss functions and improve model accuracy during training.

Comparing Performance: Forward vs Backpropagation

Forward propagation efficiently computes output predictions by passing input data through network layers using weighted sums and activation functions. Backpropagation optimizes model performance through gradient descent by propagating errors backward to update weights, enabling learning from data. While forward propagation focuses on inference speed, backpropagation significantly impacts training accuracy and convergence rates in neural networks.

Forward Propagation vs Backpropagation Infographic

techiny.com

techiny.com