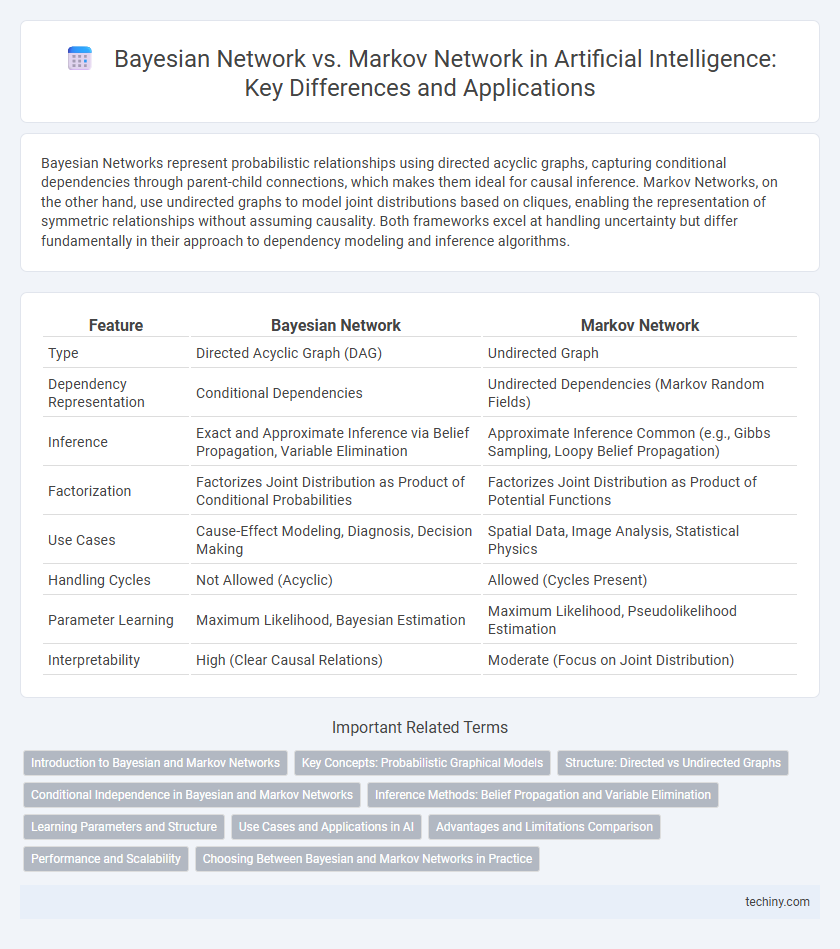

Bayesian Networks represent probabilistic relationships using directed acyclic graphs, capturing conditional dependencies through parent-child connections, which makes them ideal for causal inference. Markov Networks, on the other hand, use undirected graphs to model joint distributions based on cliques, enabling the representation of symmetric relationships without assuming causality. Both frameworks excel at handling uncertainty but differ fundamentally in their approach to dependency modeling and inference algorithms.

Table of Comparison

| Feature | Bayesian Network | Markov Network |

|---|---|---|

| Type | Directed Acyclic Graph (DAG) | Undirected Graph |

| Dependency Representation | Conditional Dependencies | Undirected Dependencies (Markov Random Fields) |

| Inference | Exact and Approximate Inference via Belief Propagation, Variable Elimination | Approximate Inference Common (e.g., Gibbs Sampling, Loopy Belief Propagation) |

| Factorization | Factorizes Joint Distribution as Product of Conditional Probabilities | Factorizes Joint Distribution as Product of Potential Functions |

| Use Cases | Cause-Effect Modeling, Diagnosis, Decision Making | Spatial Data, Image Analysis, Statistical Physics |

| Handling Cycles | Not Allowed (Acyclic) | Allowed (Cycles Present) |

| Parameter Learning | Maximum Likelihood, Bayesian Estimation | Maximum Likelihood, Pseudolikelihood Estimation |

| Interpretability | High (Clear Causal Relations) | Moderate (Focus on Joint Distribution) |

Introduction to Bayesian and Markov Networks

Bayesian Networks represent probabilistic relationships using directed acyclic graphs, where nodes denote random variables and edges signify conditional dependencies. Markov Networks use undirected graphs to model joint distributions, capturing variables' conditional independencies through cliques. Both methods are foundational in probabilistic graphical models, enabling efficient reasoning under uncertainty in artificial intelligence applications.

Key Concepts: Probabilistic Graphical Models

Bayesian Networks utilize directed acyclic graphs to model conditional dependencies among random variables, allowing efficient representation of joint probability distributions through local conditional probabilities. Markov Networks employ undirected graphs focusing on the concept of Markov properties and clique potentials to capture the global joint distribution without requiring a directed structure. Both models serve as fundamental probabilistic graphical models in artificial intelligence for reasoning under uncertainty, with Bayesian Networks emphasizing causal relationships and Markov Networks excelling in representing symmetric dependencies.

Structure: Directed vs Undirected Graphs

Bayesian networks use directed acyclic graphs (DAGs) to represent probabilistic relationships, where edges indicate direct causal influence between variables. Markov networks utilize undirected graphs, capturing dependencies through cliques without implying causal direction, suitable for representing mutual or symmetric relationships. The choice between directed and undirected structures impacts inference methods and the interpretation of conditional independencies in artificial intelligence models.

Conditional Independence in Bayesian and Markov Networks

Bayesian Networks encode conditional independence through directed acyclic graphs, where a node is independent of its non-descendants given its parents, enabling efficient probabilistic inference. Markov Networks represent conditional independence via undirected graphs, relying on the concept of Markov blankets whereby a node is conditionally independent of all other nodes given its neighbors. These differences in graph structure directly influence how conditional independence is modeled and exploited for reasoning in uncertain environments.

Inference Methods: Belief Propagation and Variable Elimination

Bayesian Networks utilize variable elimination and belief propagation algorithms for efficient probabilistic inference, leveraging their directed acyclic graph structure to compute posterior probabilities. Markov Networks, characterized by undirected graphs, primarily rely on belief propagation methods adapted for loopy graphs, such as loopy belief propagation, to estimate marginal distributions. Variable elimination is more straightforward in Bayesian Networks due to their conditional independence properties, whereas Markov Networks require more complex factorization techniques during inference.

Learning Parameters and Structure

Bayesian Networks learn parameters through conditional probability tables derived from data, employing algorithms like Expectation-Maximization for incomplete datasets, while their structure is often determined using score-based or constraint-based methods to identify directed acyclic graphs. Markov Networks estimate parameters via potential functions or clique potentials using techniques such as Maximum Likelihood Estimation or pseudo-likelihood, with structure learning focusing on undirected graph formation through methods like L1-regularization or neighborhood selection. Both frameworks confront computational challenges in structure learning but offer distinct advantages: Bayesian Networks capture causal relationships with directed edges, whereas Markov Networks excel in modeling symmetric dependencies.

Use Cases and Applications in AI

Bayesian Networks excel in applications involving causal inference, diagnosis, and decision-making where directional probabilistic dependencies are essential, such as in medical diagnosis and risk assessment systems. Markov Networks are preferred in scenarios requiring modeling of undirected dependencies and spatial relationships, like image analysis, natural language processing, and social network modeling. Both frameworks support AI tasks involving uncertainty, but Bayesian Networks are typically used for problems with clear causal structures, while Markov Networks suit contexts with symmetric relationships and complex joint distributions.

Advantages and Limitations Comparison

Bayesian Networks excel in modeling causal relationships through directed acyclic graphs, enabling efficient reasoning under uncertainty but face challenges with cyclic dependencies and scalability in large datasets. Markov Networks, represented as undirected graphs, offer robust handling of symmetric relationships and complex dependencies, yet they often require more computational resources for inference and parameter estimation. The choice between the two depends on the specific application domain, with Bayesian Networks preferred for causal inference and Markov Networks favored in spatial or relational data contexts.

Performance and Scalability

Bayesian Networks excel in representing asymmetric relationships with directed acyclic graphs, offering efficient inference in smaller or sparse datasets but often face scalability challenges due to complex conditional dependencies. Markov Networks, utilizing undirected graphs, handle symmetric relationships and are better suited for large, densely connected systems, providing more robust performance in high-dimensional spaces through local Markov properties. Scalability in Markov Networks benefits from parallelizability and localized computations, whereas Bayesian Networks may encounter bottlenecks during exact inference as network size grows.

Choosing Between Bayesian and Markov Networks in Practice

Choosing between Bayesian networks and Markov networks depends on the nature of dependencies and available data in the problem domain. Bayesian networks excel in scenarios with directed causal relationships and conditional independencies, making them suitable for expert systems and diagnosis tasks. Markov networks are preferred when modeling undirected dependencies and symmetric relationships, often applied in image processing and spatial data analysis.

Bayesian Network vs Markov Network Infographic

techiny.com

techiny.com