Residual connections help mitigate the vanishing gradient problem by allowing gradients to flow directly through layers, improving deep neural network training. Skip connections enable the model to bypass certain layers, facilitating feature reuse and better information flow across the network. Both techniques enhance model performance but differ in structure and specific applications within architectures like ResNet and U-Net.

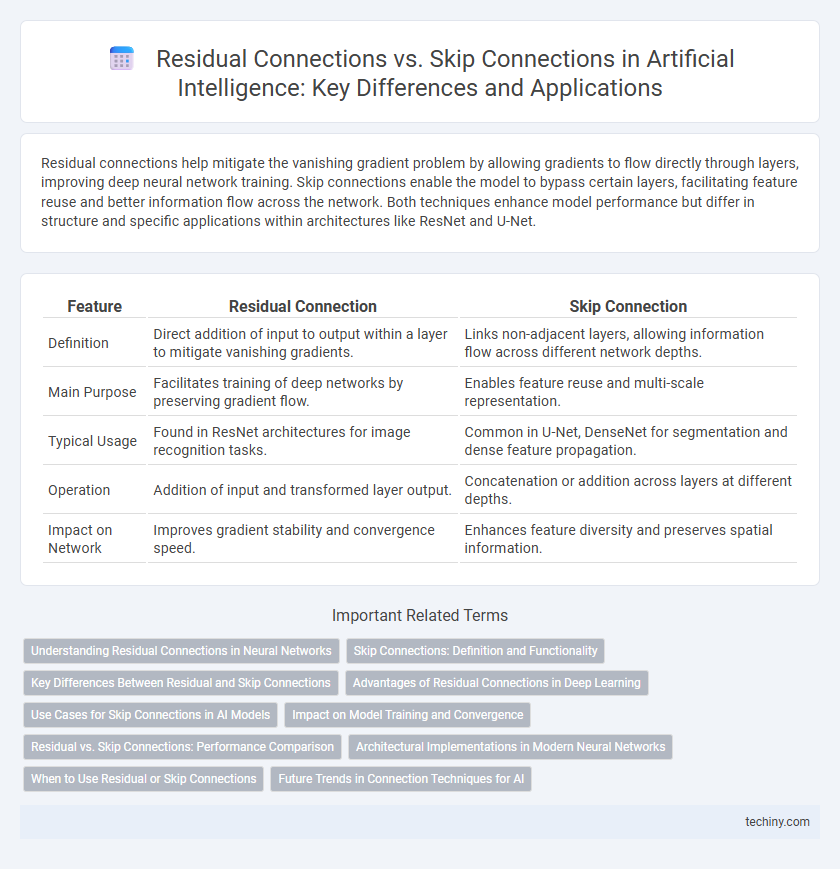

Table of Comparison

| Feature | Residual Connection | Skip Connection |

|---|---|---|

| Definition | Direct addition of input to output within a layer to mitigate vanishing gradients. | Links non-adjacent layers, allowing information flow across different network depths. |

| Main Purpose | Facilitates training of deep networks by preserving gradient flow. | Enables feature reuse and multi-scale representation. |

| Typical Usage | Found in ResNet architectures for image recognition tasks. | Common in U-Net, DenseNet for segmentation and dense feature propagation. |

| Operation | Addition of input and transformed layer output. | Concatenation or addition across layers at different depths. |

| Impact on Network | Improves gradient stability and convergence speed. | Enhances feature diversity and preserves spatial information. |

Understanding Residual Connections in Neural Networks

Residual connections in neural networks help mitigate the vanishing gradient problem by allowing gradients to flow directly through identity mappings, improving training of deep architectures. Unlike simple skip connections that link non-adjacent layers, residual connections add input activations to the output of stacked layers, preserving original feature information. This additive operation enhances model convergence and stability, making residual networks (ResNets) a foundational architecture in deep learning for tasks like image recognition.

Skip Connections: Definition and Functionality

Skip connections, also known as shortcut connections, allow the output of a previous layer to be fed directly into a later layer, bypassing intermediate layers. This architectural feature addresses the vanishing gradient problem in deep neural networks by preserving gradient flow and enabling better information propagation. Skip connections are fundamental in models like ResNet, improving training efficiency and enabling deeper network architectures.

Key Differences Between Residual and Skip Connections

Residual connections integrate input features directly with output from subsequent layers using element-wise addition, enhancing gradient flow and mitigating vanishing gradient issues. Skip connections typically bypass one or more layers by concatenating or adding features, allowing the model to preserve and reuse information from earlier layers. The key difference lies in residual connections focusing on identity mapping to facilitate training of very deep networks, whereas skip connections emphasize feature reuse across various network depths for richer representations.

Advantages of Residual Connections in Deep Learning

Residual connections enhance deep learning models by enabling the training of much deeper neural networks without encountering vanishing gradient problems. They allow identity mapping, which facilitates gradient flow and improves convergence speed in architectures like ResNet. This structural advantage leads to better accuracy and robustness in image recognition and other AI tasks compared to traditional skip connections.

Use Cases for Skip Connections in AI Models

Skip connections are widely used in AI models to mitigate the vanishing gradient problem by allowing gradients to flow directly through the network layers, improving training efficiency in deep architectures. They are prevalent in convolutional neural networks (CNNs) for image segmentation tasks, such as the U-Net architecture, where they help preserve spatial information across layers. Additionally, skip connections enhance performance in transformer models by connecting different attention layers, facilitating better contextual learning.

Impact on Model Training and Convergence

Residual connections improve model training by enabling gradients to flow directly through layers, mitigating the vanishing gradient problem and accelerating convergence in deep neural networks. Skip connections, while similarly facilitating gradient propagation, primarily allow the model to reuse features from earlier layers, enhancing feature representation and thus improving training stability. Both techniques significantly enhance convergence rates, but residual connections are particularly effective in very deep architectures by explicitly learning the residual mapping.

Residual vs. Skip Connections: Performance Comparison

Residual connections improve deep neural network training by enabling identity mapping and mitigating vanishing gradients, resulting in better convergence and higher accuracy compared to traditional skip connections. Skip connections provide a shortcut for information flow but lack the explicit residual learning framework that enhances feature reuse and gradient propagation. Empirical studies demonstrate residual connections outperform skip connections in complex architectures such as ResNet, leading to superior performance on image recognition benchmarks.

Architectural Implementations in Modern Neural Networks

Residual connections, integral to architectures like ResNet, ease the training of deep neural networks by allowing identity mappings that directly pass input features to deeper layers, mitigating the vanishing gradient problem. Skip connections broadly refer to pathways that bypass intermediate layers, enabling multi-scale feature fusion critical in models such as U-Net for image segmentation. Modern neural network designs implement residual and skip connections strategically to enhance gradient flow and feature reuse, boosting overall performance and training stability.

When to Use Residual or Skip Connections

Residual connections are ideal in deep neural networks where gradient flow and training stability are critical, particularly in architectures like ResNet to prevent vanishing gradients. Skip connections are more flexible, used to directly pass information between non-adjacent layers, commonly in encoder-decoder structures such as U-Net for preserving spatial information. Choose residual connections when improving convergence in deep layers, and skip connections when merging features across layers with different resolutions or semantics.

Future Trends in Connection Techniques for AI

Future trends in connection techniques for AI emphasize the evolution of residual connections and skip connections to enhance deep neural network training efficiency and model expressiveness. Emerging architectures integrate adaptive residual pathways that dynamically adjust connection weights, improving gradient flow and reducing vanishing gradient problems in ultra-deep networks. Research is also exploring hybrid skip-residual frameworks that combine the strengths of both to optimize feature reuse and modular learning across diverse AI applications.

Residual connection vs Skip connection Infographic

techiny.com

techiny.com